gist.github.com/josephrocca/...

You can paste the code here to turn it into a bookmarklet:

chriszarate.github.io/bookmarkleter/

(With great power comes great responsibility. Personally using it for extremely bad faith tribal brainrot.)

👋 I work on RL, world models, and generalization in decision-making. I'm perhaps most well known for my work on "TD-MPC2: Scalable, Robust World Models for Continuous Control" www.tdmpc2.com

👋 I work on RL, world models, and generalization in decision-making. I'm perhaps most well known for my work on "TD-MPC2: Scalable, Robust World Models for Continuous Control" www.tdmpc2.com

Huginn-0125: Pretraining a Depth-Recurrent Model

Train a recurrent-depth model at scale on 4096 AMD GPUs on Frontier.

Huginn-0125: Pretraining a Depth-Recurrent Model

Train a recurrent-depth model at scale on 4096 AMD GPUs on Frontier.

They release both transformer and SSM-hybrid models under an Apache 2.0 license.

They release both transformer and SSM-hybrid models under an Apache 2.0 license.

They are releasing the code and weights for the π0 as part of our experimental openpi repository.

Blog: www.pi.website/blog/openpi

Repo: github.com/Physical-Int...

They are releasing the code and weights for the π0 as part of our experimental openpi repository.

Blog: www.pi.website/blog/openpi

Repo: github.com/Physical-Int...

Pi0 is the most advanced Vision Language Action model. It takes natural language commands as input and directly output autonomous behavior.

It was trained by @physical_int and ported to pytorch by @m_olbap

👇🧵

Pi0 is the most advanced Vision Language Action model. It takes natural language commands as input and directly output autonomous behavior.

It was trained by @physical_int and ported to pytorch by @m_olbap

👇🧵

Baichuan releases Baichuan-Omni-1.5

Open-source Omni-modal Foundation Model Supporting Text, Image, Video, and Audio Inputs as Well as Text and Audio Outputs.

Both model ( huggingface.co/baichuan-inc... ) and base ( huggingface.co/baichuan-inc... ).

Baichuan releases Baichuan-Omni-1.5

Open-source Omni-modal Foundation Model Supporting Text, Image, Video, and Audio Inputs as Well as Text and Audio Outputs.

Both model ( huggingface.co/baichuan-inc... ) and base ( huggingface.co/baichuan-inc... ).

Thanks to @searyanc.dev for landing the new --erasableSyntaxOnly tsconfig flag. Heading for TS 5.8 Beta next week 🎉

🔷 Guides users away from TS-only runtime features such as enum & namespace

🔷 Pairs nicely with Node's recent TypeScript support

github.com/microsoft/Ty...

Thanks to @searyanc.dev for landing the new --erasableSyntaxOnly tsconfig flag. Heading for TS 5.8 Beta next week 🎉

🔷 Guides users away from TS-only runtime features such as enum & namespace

🔷 Pairs nicely with Node's recent TypeScript support

github.com/microsoft/Ty...

🔋Eliminates the value function from PPO to save boatloads of compute

💰 Samples N completions per prompt to compute average rewards across a group

To use it, run:

pip install git+https://github.com/huggingface/trl.git

🔋Eliminates the value function from PPO to save boatloads of compute

💰 Samples N completions per prompt to compute average rewards across a group

To use it, run:

pip install git+https://github.com/huggingface/trl.git

- INTELLECT-MATH, a frontier 7B parameter model for math reasoning that shows that the quality of your SFT initialization strongly impacts reinforcement learning.

Blog: www.primeintellect.ai/blog/intelle... Models: huggingface.co/PrimeIntelle...

- INTELLECT-MATH, a frontier 7B parameter model for math reasoning that shows that the quality of your SFT initialization strongly impacts reinforcement learning.

Blog: www.primeintellect.ai/blog/intelle... Models: huggingface.co/PrimeIntelle...

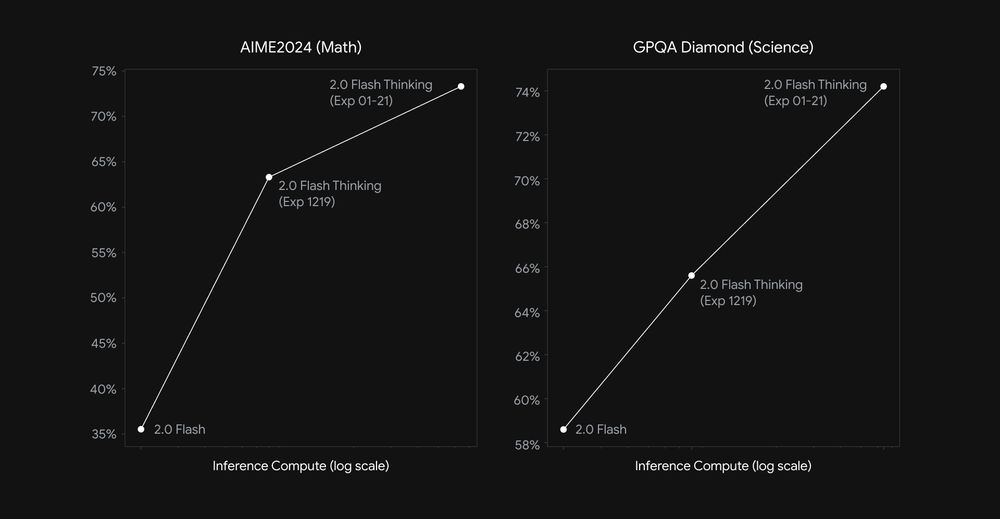

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

Today we’re sharing an experimental update w/improved performance on math, science, and multimodal reasoning benchmarks 📈:

• AIME: 73.3%

• GPQA: 74.2%

• MMMU: 75.4%

The open-weight tokenizer-free language model. Their 6.5B byte-level LM—-EvaByte matches modern tokenizer-based LMs with 5x less data & 2x faster decoding!

The open-weight tokenizer-free language model. Their 6.5B byte-level LM—-EvaByte matches modern tokenizer-based LMs with 5x less data & 2x faster decoding!

Project: github.com/bytedance/UI...

Desktop: github.com/bytedance/UI...

Browser: github.com/web-infra-de...

Models : huggingface.co/bytedance-re...

Paper: arxiv.org/abs/2501.12326

Project: github.com/bytedance/UI...

Desktop: github.com/bytedance/UI...

Browser: github.com/web-infra-de...

Models : huggingface.co/bytedance-re...

Paper: arxiv.org/abs/2501.12326

👉 npm i kokoro-js 👈

Link to demo (+ sample code) in 🧵

👉 npm i kokoro-js 👈

Link to demo (+ sample code) in 🧵

DeepSeek-R1 (Preview) Results. The model performs in the vicinity of o1-Medium providing SOTA reasoning performance on LiveCodeBench.

DeepSeek-R1 (Preview) Results. The model performs in the vicinity of o1-Medium providing SOTA reasoning performance on LiveCodeBench.

huggingface.co/kyutai/heliu...

After 2 years of work, I'm excited to announce our newest paper, MatterGen, has been published in Nature!

www.nature.com/articles/s41...

We are also releasing all the training data, model weights, model code, and evaluation code on GitHub!

github.com/microsoft/ma...

After 2 years of work, I'm excited to announce our newest paper, MatterGen, has been published in Nature!

www.nature.com/articles/s41...

We are also releasing all the training data, model weights, model code, and evaluation code on GitHub!

github.com/microsoft/ma...

TLAS/BLAS construction and traversal, for single and double precision BVHs, and including a brand new GPU demo: See the attached real-time footage, captured at 1280x720 on an NVIDIA 2070 laptop GPU.

#RTXoff

github.com/jbikker/tiny...

TLAS/BLAS construction and traversal, for single and double precision BVHs, and including a brand new GPU demo: See the attached real-time footage, captured at 1280x720 on an NVIDIA 2070 laptop GPU.

#RTXoff

github.com/jbikker/tiny...

- Performance surpasses models like Llama3.1-8B and Qwen2.5-7B

- Capable of deep reasoning with system prompts

- Trained only on 4T high-quality tokens

huggingface.co/collections/...

- Performance surpasses models like Llama3.1-8B and Qwen2.5-7B

- Capable of deep reasoning with system prompts

- Trained only on 4T high-quality tokens

huggingface.co/collections/...

🔖 Model collection: huggingface.co/collections/...

🔖 Notebook on how to use: colab.research.google.com/drive/1e8fcb...

🔖 Try it here: huggingface.co/spaces/hysts...

🔖 Model collection: huggingface.co/collections/...

🔖 Notebook on how to use: colab.research.google.com/drive/1e8fcb...

🔖 Try it here: huggingface.co/spaces/hysts...

Goodbye WinterCG, welcome WinterTC!

deno.com/blog/wintertc

Goodbye WinterCG, welcome WinterTC!

deno.com/blog/wintertc