chrishedges.substack.com

substack.com/@caitlinjohn...

substack.com/@arnaudbertr...

substack.com/@mate

chrishedges.substack.com

substack.com/@caitlinjohn...

substack.com/@arnaudbertr...

substack.com/@mate

chrishedges.substack.com

substack.com/@caitlinjohn...

substack.com/@arnaudbertr...

substack.com/@mate

chrishedges.substack.com

substack.com/@caitlinjohn...

substack.com/@arnaudbertr...

substack.com/@mate

link.springer.com/content/pdf/...

sites.bu.edu/steveg/files...

link.springer.com/content/pdf/...

sites.bu.edu/steveg/files...

@mschrimpf.bsky.social @brendenlake.bsky.social @gretatuckute.bsky.social @tonyzador.bsky.social @garymarcus.bsky.social @neuranna.bsky.social @bayesianboy.bsky.social @cocoscilab.bsky.social @mpshanahan.bsky.social

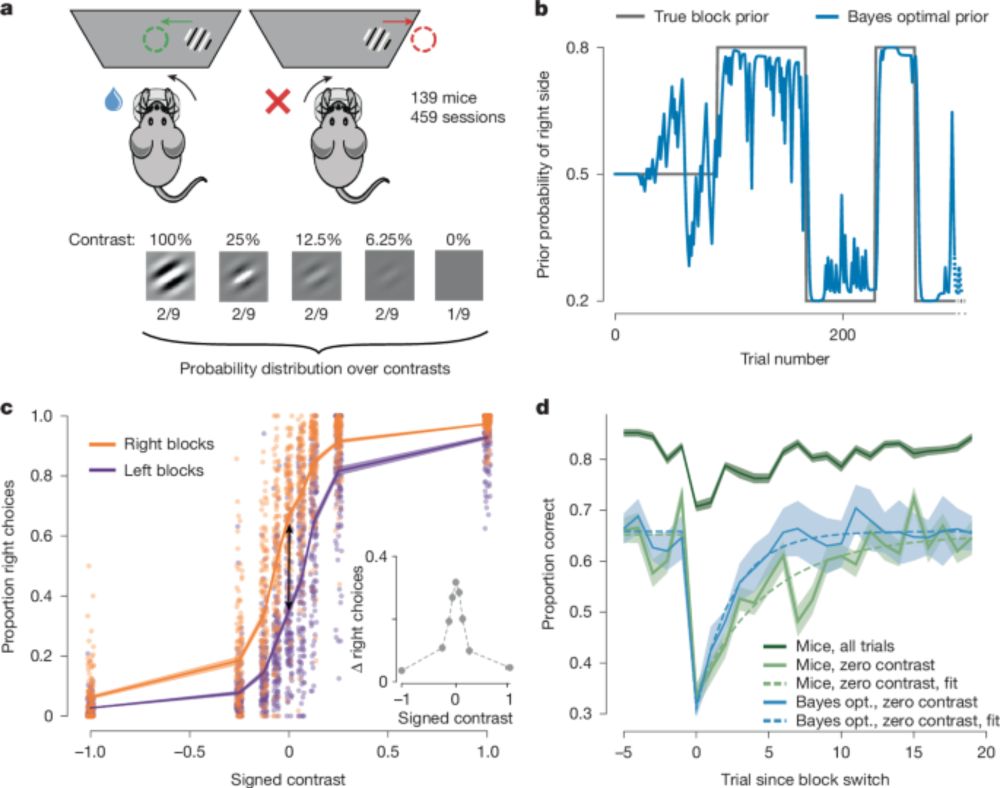

Two new papers from the #IBL looking at brain-wide activity:

www.nature.com/articles/s41...

www.nature.com/articles/s41...

#neuroscience 🧪