Understanding this balance enables better tuning of auto-labeling pipelines, prioritizing overall model effectiveness over superficial label cleanliness.

Understanding this balance enables better tuning of auto-labeling pipelines, prioritizing overall model effectiveness over superficial label cleanliness.

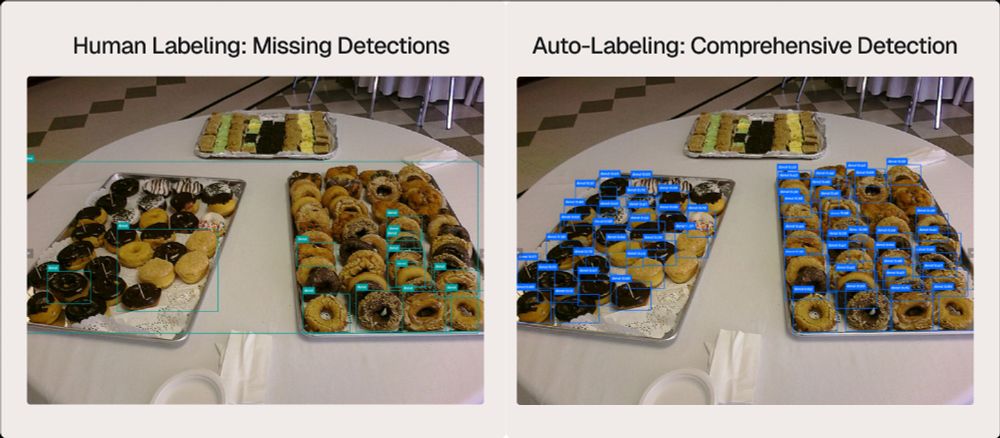

The image below compares a human-labeled image (left) with an auto-labeled one (right). Humans are clearly, umm, lazy here :)

The image below compares a human-labeled image (left) with an auto-labeled one (right). Humans are clearly, umm, lazy here :)

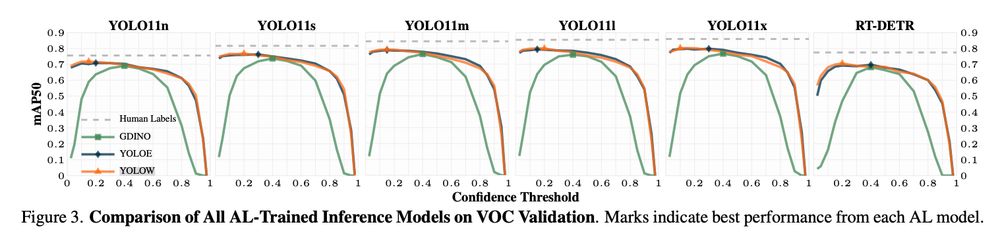

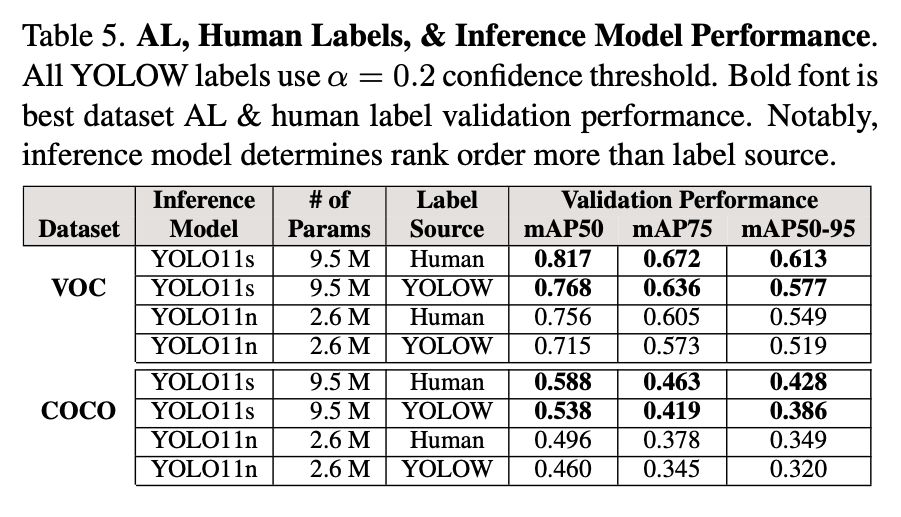

The mean average precision (mAP) of inference models trained from auto labels approached those trained from human labels.

On VOC, auto-labeled models achieved mAP50 scores of 0.768, closely matching the 0.817 achieved with human-labeled data.

The mean average precision (mAP) of inference models trained from auto labels approached those trained from human labels.

On VOC, auto-labeled models achieved mAP50 scores of 0.768, closely matching the 0.817 achieved with human-labeled data.

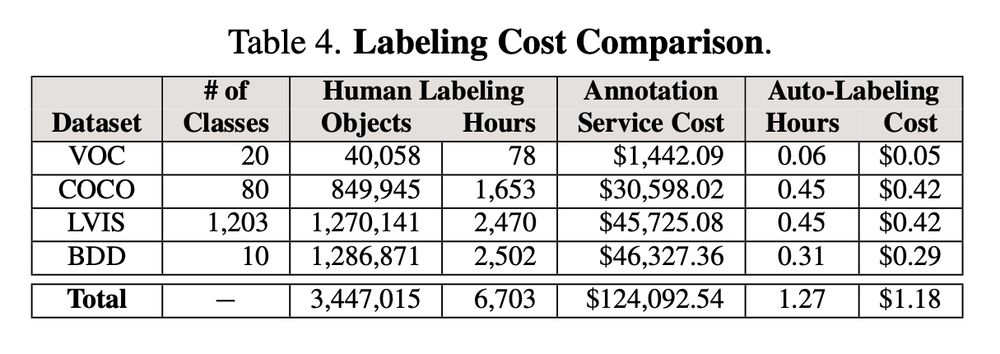

📊 Massive cost and time savings.

Using Verified Auto Labeling costs $1.18 and 1 hour in @NVIDIA L40S GPU time, vs. over $124,092 and 6,703 hours for human annotation.

Read our blog to dive deeper: link.voxel51.com/verified-auto-labeling-tw/

📊 Massive cost and time savings.

Using Verified Auto Labeling costs $1.18 and 1 hour in @NVIDIA L40S GPU time, vs. over $124,092 and 6,703 hours for human annotation.

Read our blog to dive deeper: link.voxel51.com/verified-auto-labeling-tw/

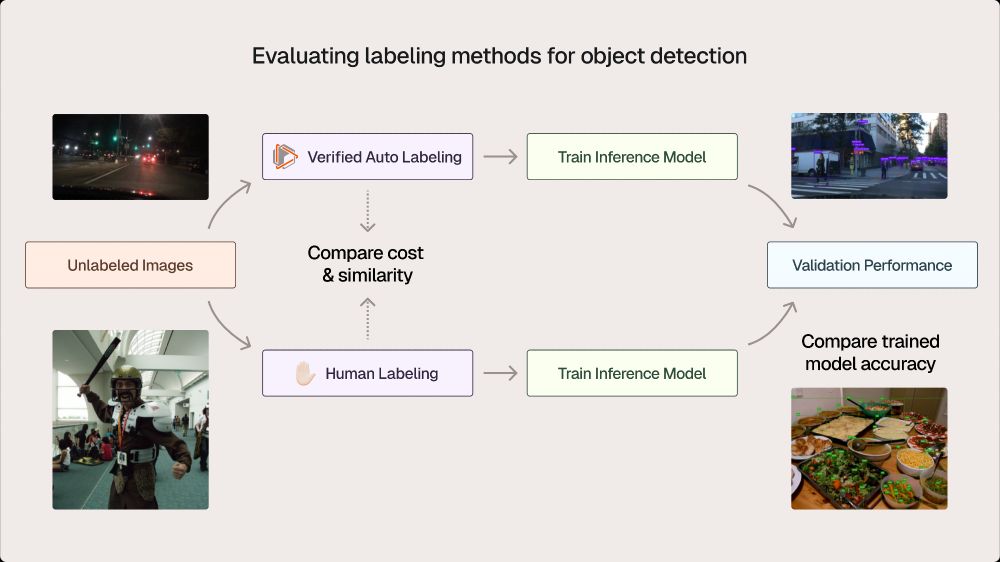

- Used off-the-shelf foundation models to label several benchmark datasets

- Evaluated these labels relative to the human-annotated ground truth

- Used off-the-shelf foundation models to label several benchmark datasets

- Evaluated these labels relative to the human-annotated ground truth

The zeitgeist claims zero-shot labeling is here but no one measured it. We did. 95%+ performance of human labels 100,000x cheaper & 5,000x faster

arxiv.org/abs/2506.02359

The zeitgeist claims zero-shot labeling is here but no one measured it. We did. 95%+ performance of human labels 100,000x cheaper & 5,000x faster

arxiv.org/abs/2506.02359

What a great way to start a new week other than a new contributed article in VentureBeat!!! Focus: the battle for open source AI through the serious risk that selective transparency poses.

venturebeat.com/ai/the-open-...

What a great way to start a new week other than a new contributed article in VentureBeat!!! Focus: the battle for open source AI through the serious risk that selective transparency poses.

venturebeat.com/ai/the-open-...

Making Visual AI a Reality. Every day. Grew to 50 team members, dozens of F500 custs, 3M open source installs...

Stay tuned for a wild 2025 from @voxel51.bsky.social

Making Visual AI a Reality. Every day. Grew to 50 team members, dozens of F500 custs, 3M open source installs...

Stay tuned for a wild 2025 from @voxel51.bsky.social

**New Paper Alert** VITRO: Vocabulary Inversion for Time-series Representation Optimization. To appear at ICASSP 2025. w/ Filipos Bellos and Nam Nguyen.

👉 Project Page w/ code: fil-mp.github.io/project_page/

👉 Paper Link: arxiv.org/abs/2412.17921

**New Paper Alert** VITRO: Vocabulary Inversion for Time-series Representation Optimization. To appear at ICASSP 2025. w/ Filipos Bellos and Nam Nguyen.

👉 Project Page w/ code: fil-mp.github.io/project_page/

👉 Paper Link: arxiv.org/abs/2412.17921

New Paper Alert!

Explainable Procedural Mistake Detection

With coauthors Shane Storks, Itamar Bar-Yossef, Yayuan Li, Zheyuan Zhang and Joyce Chai

Full Paper: arxiv.org/abs/2412.11927

New Paper Alert!

Explainable Procedural Mistake Detection

With coauthors Shane Storks, Itamar Bar-Yossef, Yayuan Li, Zheyuan Zhang and Joyce Chai

Full Paper: arxiv.org/abs/2412.11927

State of the art mislabel detection to fix the glass ceiling, save MLE time, and save money! Try it on your problem!

👉 Paper Link: arxiv.org/abs/2412.02596

👉 GitHub Repo: github.com/voxel51/reco...

State of the art mislabel detection to fix the glass ceiling, save MLE time, and save money! Try it on your problem!

👉 Paper Link: arxiv.org/abs/2412.02596

👉 GitHub Repo: github.com/voxel51/reco...

New Paper Alert!

👉 Project Page: excitedbutter.github.io/project_page/

👉 Paper Link: arxiv.org/abs/2412.04189

👉 GitHub Repo: github.com/ExcitedButte...

New Paper Alert!

👉 Project Page: excitedbutter.github.io/project_page/

👉 Paper Link: arxiv.org/abs/2412.04189

👉 GitHub Repo: github.com/ExcitedButte...

New open source AI feature alert! Leaky splits can be the bane of ML models, giving a false sense of confidence, and a nasty surprise in production.

Blog medium.com/voxel51/on-l...

Code github.com/voxel51/fift...

New open source AI feature alert! Leaky splits can be the bane of ML models, giving a false sense of confidence, and a nasty surprise in production.

Blog medium.com/voxel51/on-l...

Code github.com/voxel51/fift...

Class-wise Autoencoders Measure Classification Difficulty and Detect Label Mistakes

Understanding how hard a machine learning problem is has been quite elusive. Not any more.

Paper Link: arxiv.org/abs/2412.02596

GitHub Repo: github.com/voxel51/reco...

Class-wise Autoencoders Measure Classification Difficulty and Detect Label Mistakes

Understanding how hard a machine learning problem is has been quite elusive. Not any more.

Paper Link: arxiv.org/abs/2412.02596

GitHub Repo: github.com/voxel51/reco...

Zero-Shot Coreset Selection: Efficient Pruning for Unlabeled Data

Training models requires massive amounts of labeled data. ZCore shows you that you need less labeled data to train good models.

Paper Link: arxiv.org/abs/2411.15349

GitHub Repo: github.com/voxel51/zcore

Zero-Shot Coreset Selection: Efficient Pruning for Unlabeled Data

Training models requires massive amounts of labeled data. ZCore shows you that you need less labeled data to train good models.

Paper Link: arxiv.org/abs/2411.15349

GitHub Repo: github.com/voxel51/zcore