https://hazeldoughty.github.io

The VQA benchmark only scratches the surfaces of what is possible to evaluate with this detail of annotations.

Check out the website if you want to know more: hd-epic.github.io

The VQA benchmark only scratches the surfaces of what is possible to evaluate with this detail of annotations.

Check out the website if you want to know more: hd-epic.github.io

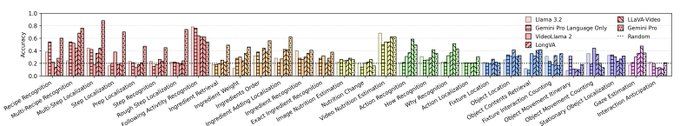

Our benchmark tests understanding in recipes, ingredients, nutrition, fine-grained actions, 3D perception, object movement and gaze. Current models have a long way to go with a best performance of 38% vs. 90% human baseline.

Our benchmark tests understanding in recipes, ingredients, nutrition, fine-grained actions, 3D perception, object movement and gaze. Current models have a long way to go with a best performance of 38% vs. 90% human baseline.

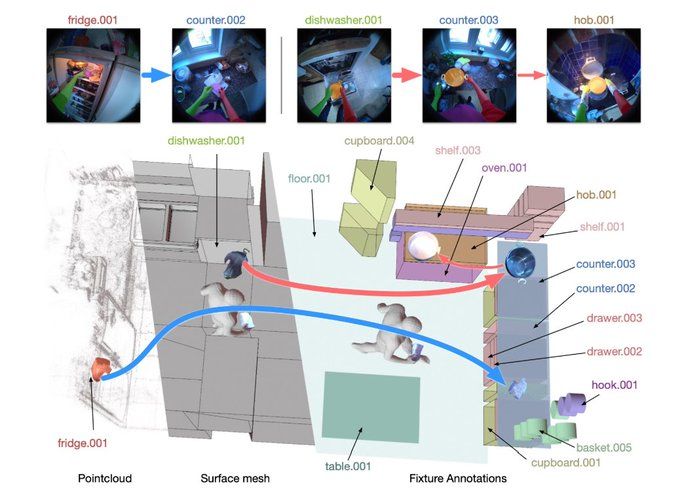

We reconstruct participants kitchens and annotate every time an object is moved.

We reconstruct participants kitchens and annotate every time an object is moved.

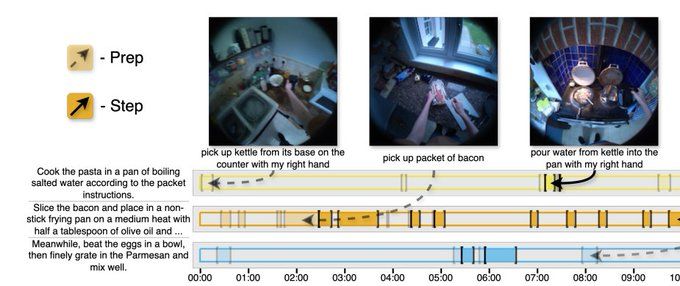

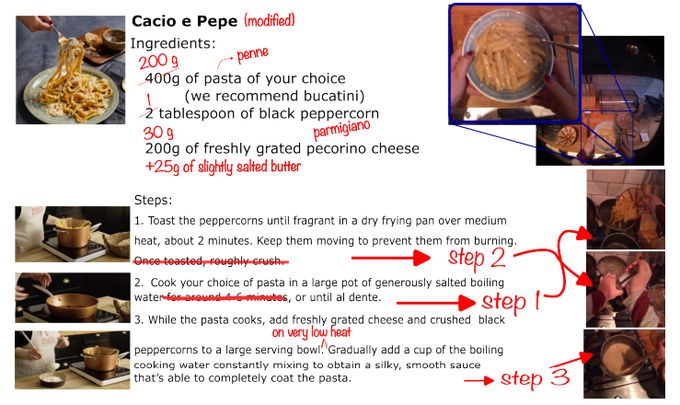

Every action has a dense description not only describing what happens in detail, but also how and why it happens.

Every action has a dense description not only describing what happens in detail, but also how and why it happens.

We collect details of all the recipes participants chose to perform over 3 days in their own kitchen. Alongside ingredient weights and nutrition.

We collect details of all the recipes participants chose to perform over 3 days in their own kitchen. Alongside ingredient weights and nutrition.

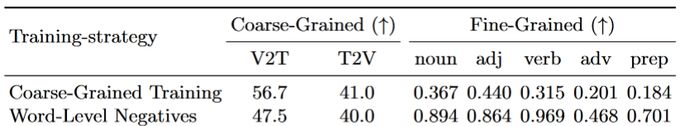

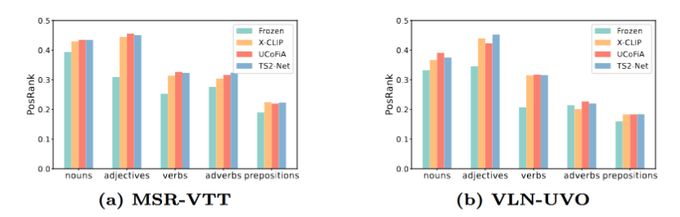

We also see that good coarse-grained performance does not necessarily indicate good fine-grained performance.

We also see that good coarse-grained performance does not necessarily indicate good fine-grained performance.

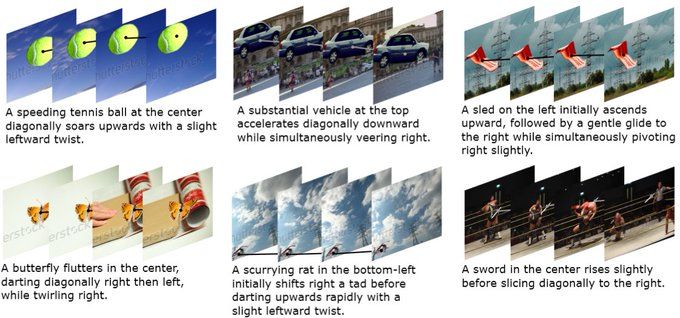

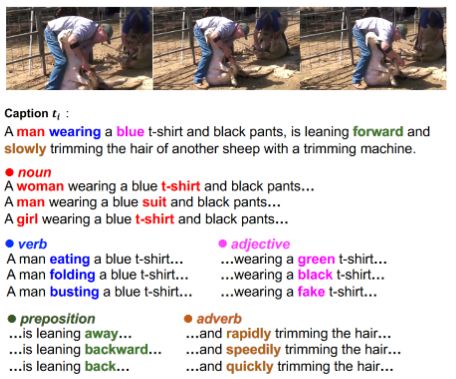

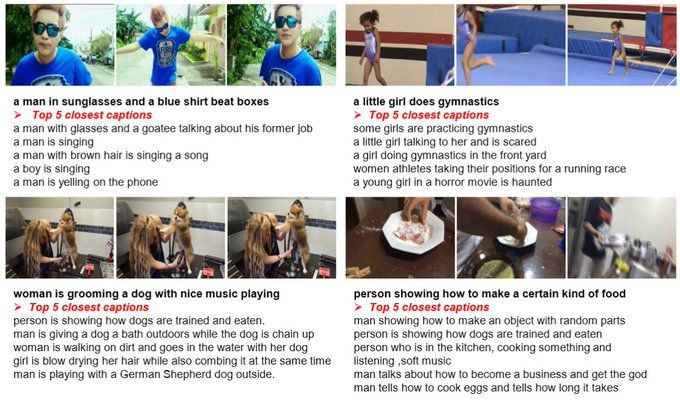

Our approach automatically creates new fine-grained negative captions and can be applied to any existing dataset.

Our approach automatically creates new fine-grained negative captions and can be applied to any existing dataset.

Captions thus rarely differ by a single word or concept.

Captions thus rarely differ by a single word or concept.