haokunliu.com

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/i...

- github.com/ChicagoHAI/i...

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/i...

- github.com/ChicagoHAI/i...

- github.com/ChicagoHAI/l...

- github.com/ChicagoHAI/l...

Full blog with technical details:

hypogenic.ai/blog/weekly-...

Substack: open.substack.com/pub/cichicag...

Full blog with technical details:

hypogenic.ai/blog/weekly-...

Substack: open.substack.com/pub/cichicag...

Because agents clearly can accelerate early-stage exploration. But they need human oversight at every step. Transparent benchmarking beats cherry-picked demos. Community feedback improves agents faster. And honestly, we're all figuring this out together.

Because agents clearly can accelerate early-stage exploration. But they need human oversight at every step. Transparent benchmarking beats cherry-picked demos. Community feedback improves agents faster. And honestly, we're all figuring this out together.

That's what I call the "meta intelligence" gap.

That's what I call the "meta intelligence" gap.

Some agents run faked human data, used undersized models even though compute was available, or calling simple answer reweighting as "multi-agent interactions". Resource collection and allocation is a bottleneck, but more importantly, the agents do not know when to search or seek help.

Some agents run faked human data, used undersized models even though compute was available, or calling simple answer reweighting as "multi-agent interactions". Resource collection and allocation is a bottleneck, but more importantly, the agents do not know when to search or seek help.

Agents can actually design and run small experiments: sometimes to seed bigger studies, sometimes as sanity checks, and sometimes to straight-up refute the original hypothesis. That kind of evidence is way more useful than “LLM-as-a-judge says the idea is good.”

Agents can actually design and run small experiments: sometimes to seed bigger studies, sometimes as sanity checks, and sometimes to straight-up refute the original hypothesis. That kind of evidence is way more useful than “LLM-as-a-judge says the idea is good.”

Do LLMs have different types of beliefs?

Can formal rules make AI agents honest about their uncertainty?

Can LLMs temporarily ignore their training to follow new rules?

Do LLMs have different types of beliefs?

Can formal rules make AI agents honest about their uncertainty?

Can LLMs temporarily ignore their training to follow new rules?

→ Submit your research idea or upvote existing ones (tag: "Weekly Competition")

→ Each Monday we select top 3 from previous week

→ We run experiments using research agents

→ Share repos + findings back on IdeaHub

Vote here: hypogenic.ai/ideahub

→ Submit your research idea or upvote existing ones (tag: "Weekly Competition")

→ Each Monday we select top 3 from previous week

→ We run experiments using research agents

→ Share repos + findings back on IdeaHub

Vote here: hypogenic.ai/ideahub

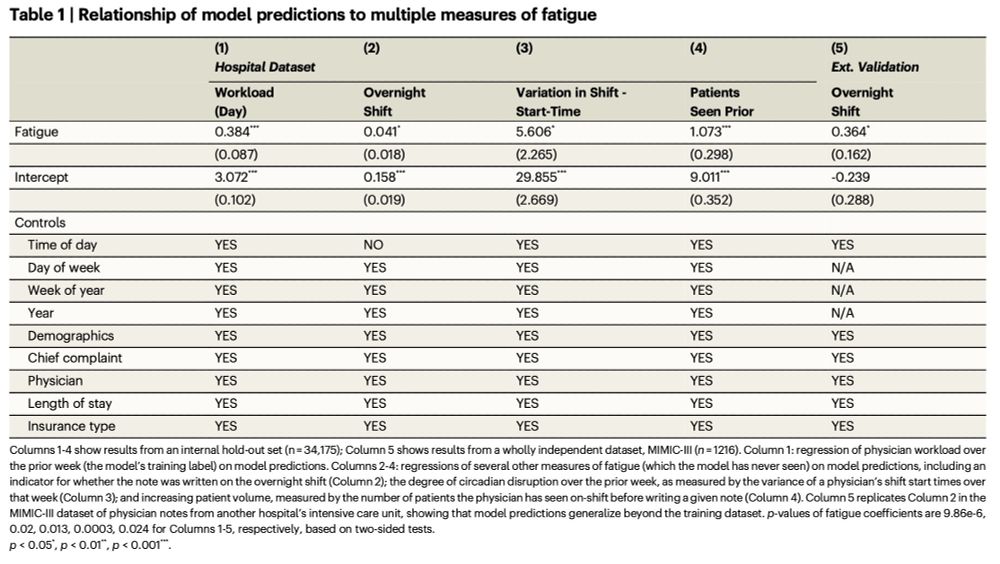

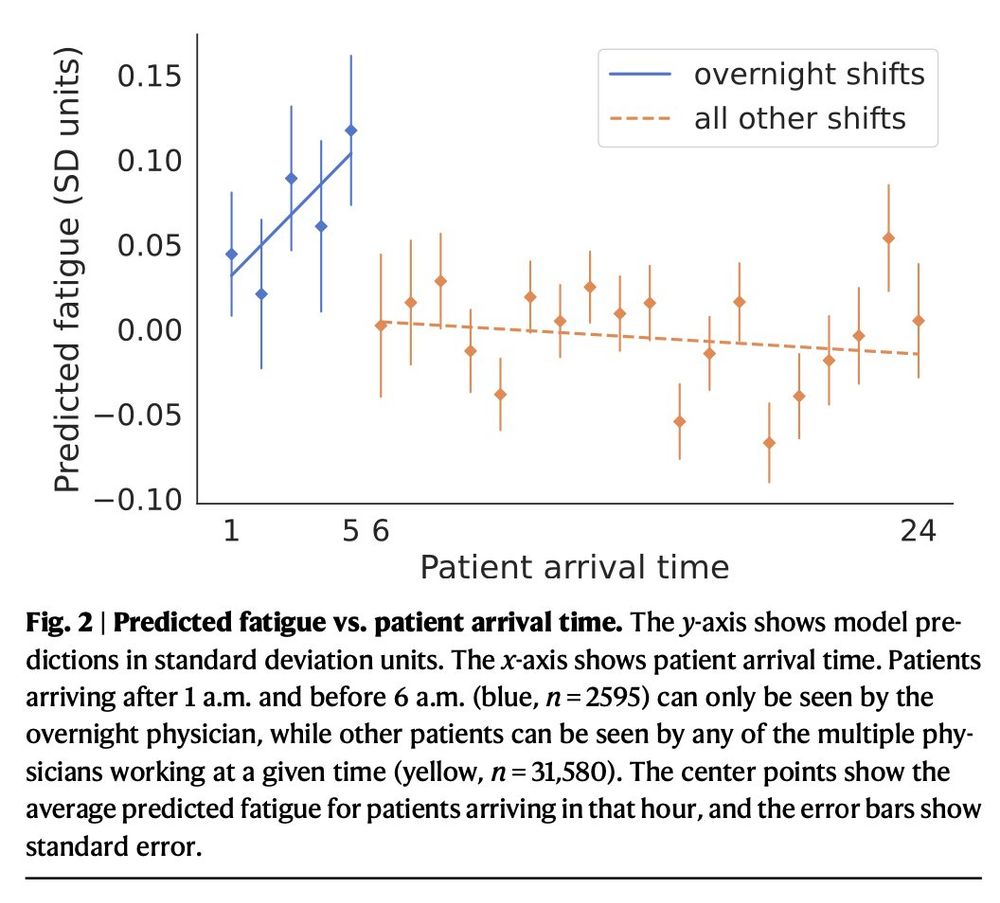

1. Who’s working an overnight shift (in our data + external validation in MIMIC)

2. Who’s working on a disruptive circadian schedule

3. How many patients has the doc seen *on the current shift*

1. Who’s working an overnight shift (in our data + external validation in MIMIC)

2. Who’s working on a disruptive circadian schedule

3. How many patients has the doc seen *on the current shift*