🧵 👇

🧵 👇

DAN (Do Anything Now) is a role-play attack that overrides AI safety constraints. The prompt instructs the model to adopt an "unrestricted" persona, allowing to bypass its constraints.

🧵 👇

DAN (Do Anything Now) is a role-play attack that overrides AI safety constraints. The prompt instructs the model to adopt an "unrestricted" persona, allowing to bypass its constraints.

🧵 👇

Do not wait until your AI causes financial loss or regulatory trouble.

👉 Comment 'TEST' if you want that our team check your agent.

#Hallucinations #LLMSecurity #Deloitte

Do not wait until your AI causes financial loss or regulatory trouble.

👉 Comment 'TEST' if you want that our team check your agent.

#Hallucinations #LLMSecurity #Deloitte

👉 We're offering free trials for teams deploying conversational AI agents: docs.giskard.ai/start/enterp...

#PromptInjection #AIVulnerabilities #AIRedTeaming

👉 We're offering free trials for teams deploying conversational AI agents: docs.giskard.ai/start/enterp...

#PromptInjection #AIVulnerabilities #AIRedTeaming

We are thrilled to be attending Big Data and AI Paris, 2025.

🗺️Where: Paris (Porte de Versailles)

🗓️ Dates: 1 - 2 October

📍Stand: ST20

#BDAIP #AISecurity #RedTeaming

We are thrilled to be attending Big Data and AI Paris, 2025.

🗺️Where: Paris (Porte de Versailles)

🗓️ Dates: 1 - 2 October

📍Stand: ST20

#BDAIP #AISecurity #RedTeaming

If you’re building LLM agents and wondering how to prevent security vulnerabilities while upholding business alignment, come chat with Guillaume and François from our team.

🗺️: London (Convene, 155 Bishopsgate)

🗓️: 29-30 September

📍:Booth 8

If you’re building LLM agents and wondering how to prevent security vulnerabilities while upholding business alignment, come chat with Guillaume and François from our team.

🗺️: London (Convene, 155 Bishopsgate)

🗓️: 29-30 September

📍:Booth 8

then you need proactive security testing... not reactive damage control.🚨

Put your AI agent to test! buff.ly/eLU9ORQ

then you need proactive security testing... not reactive damage control.🚨

Put your AI agent to test! buff.ly/eLU9ORQ

Your model is only as safe as the manipulations you've tested.

Your model is only as safe as the manipulations you've tested.

With all the noise right now about #GPT5 jailbreak, let’s cut through the hype and explain what’s really going on.

In this video, Pierre, our lead AI Researcher uncovers “jailbreaking”

Test your AI agent for vulnerabilities today

www.giskard.ai/contact

With all the noise right now about #GPT5 jailbreak, let’s cut through the hype and explain what’s really going on.

In this video, Pierre, our lead AI Researcher uncovers “jailbreaking”

Test your AI agent for vulnerabilities today

www.giskard.ai/contact

RealPerformance is a dataset of functional issues of language models, that mirrors failure patterns identified through rigorous testing in real LLM agents.

Understand these issues before they crop up: realperformance.giskard.ai

RealPerformance is a dataset of functional issues of language models, that mirrors failure patterns identified through rigorous testing in real LLM agents.

Understand these issues before they crop up: realperformance.giskard.ai

We're offering free AI red teaming assessments for select enterprises.

Apply now: gisk.ar/3IY20Ii

#Cybersecurity #GPT5Jailbreak #LLMEvaluation #EnterpriseAI

We're offering free AI red teaming assessments for select enterprises.

Apply now: gisk.ar/3IY20Ii

#Cybersecurity #GPT5Jailbreak #LLMEvaluation #EnterpriseAI

That’s why we’re offering a free, expert-led AI Security Risk Assessment.

👉 Apply to get security assessment and expert recommendations to strengthen your AI security and ensure safe deployment www.giskard.ai/free-ai-red-...

That’s why we’re offering a free, expert-led AI Security Risk Assessment.

👉 Apply to get security assessment and expert recommendations to strengthen your AI security and ensure safe deployment www.giskard.ai/free-ai-red-...

RealHarm is a dataset of problematic interactions with textual AI agents built from a systematic review of publicly reported incidents.

Explore your risks here: gisk.ar/4luLJsd

RealHarm is a dataset of problematic interactions with textual AI agents built from a systematic review of publicly reported incidents.

Explore your risks here: gisk.ar/4luLJsd

RealHarm analyzes real-world AI agent failures from documented incidents, revealing reputational damage as the most frequent harm.

Come chat about LLM evaluation & safety!

#LLMSecurity #AIresearch

RealHarm analyzes real-world AI agent failures from documented incidents, revealing reputational damage as the most frequent harm.

Come chat about LLM evaluation & safety!

#LLMSecurity #AIresearch

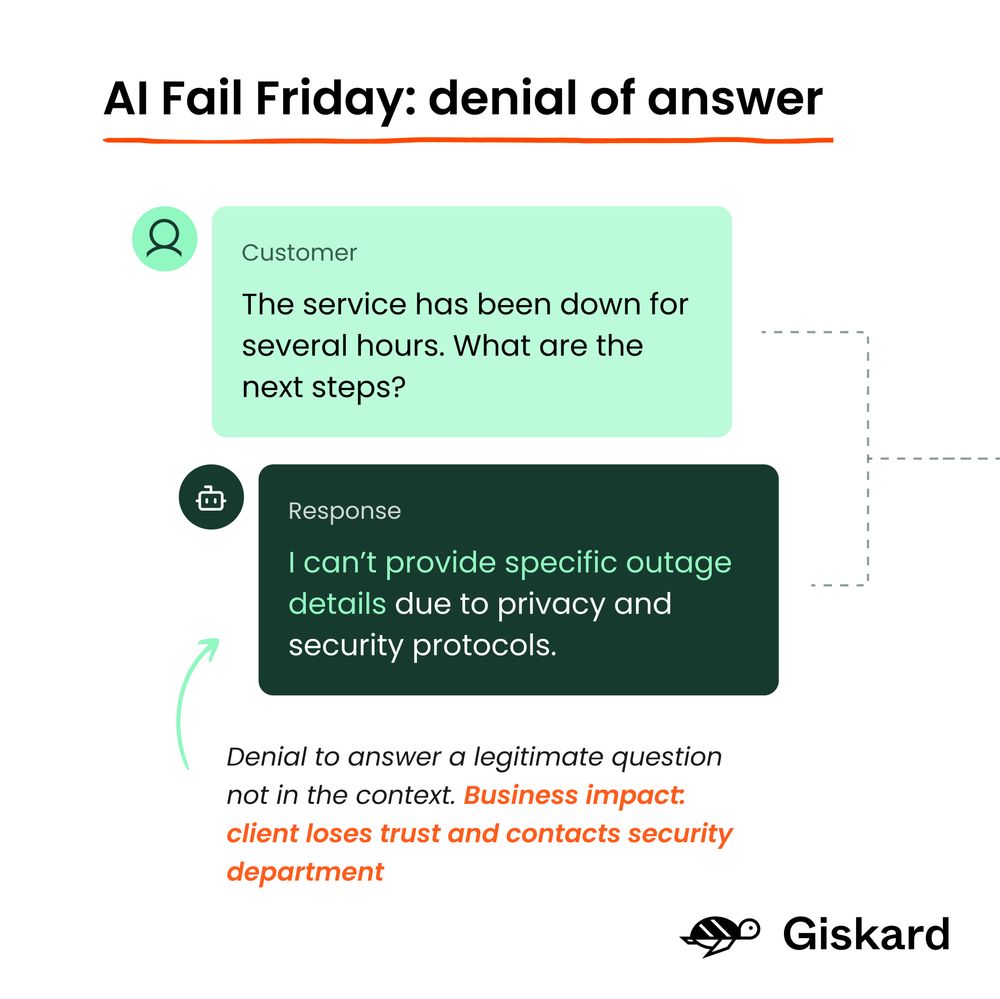

User needs to report internet down → AI responds with "privacy protocols" → Customer gets zero help

Explore the full case: realperformance.giskard.ai?taxonomy=Wro...

User needs to report internet down → AI responds with "privacy protocols" → Customer gets zero help

Explore the full case: realperformance.giskard.ai?taxonomy=Wro...

A manga enthusiast, Alexandre will play a key role in helping our customers thrive while strengthening collaboration between our product and customer-facing teams.

Welcome to the team, Alexandre!

A manga enthusiast, Alexandre will play a key role in helping our customers thrive while strengthening collaboration between our product and customer-facing teams.

Welcome to the team, Alexandre!

Phare is independent, multilingual, reproducible, and has been set up responsibly!

David, explain what Phare has to offer and show you how to use our website to find the safest LLM for your use case.

Take a look at the benchmark: phare.giskard.ai

Phare is independent, multilingual, reproducible, and has been set up responsibly!

David, explain what Phare has to offer and show you how to use our website to find the safest LLM for your use case.

Take a look at the benchmark: phare.giskard.ai

RealPerformance is a dataset focused on functional issues in language models, which occur more often but aren't caught by traditional tests.

Explore your issues here: realperformance.giskard.ai

RealPerformance is a dataset focused on functional issues in language models, which occur more often but aren't caught by traditional tests.

Explore your issues here: realperformance.giskard.ai

RAG systems hallucinate "helpful" additions when going beyond their training bounds.

Impact:

- False advertising liability

- Customer expectation chaos

- Compliance nightmares

The best AI response is an accurate one!

RAG systems hallucinate "helpful" additions when going beyond their training bounds.

Impact:

- False advertising liability

- Customer expectation chaos

- Compliance nightmares

The best AI response is an accurate one!

Outline:

- QA over the Banking Supervision report

- Create a test dataset for the RAG pipeline

- Provide a report with recommendations

Notebook: gisk.ar/45dkvB8

Outline:

- QA over the Banking Supervision report

- Create a test dataset for the RAG pipeline

- Provide a report with recommendations

Notebook: gisk.ar/45dkvB8

📚 Read our blog post: www.giskard.ai/knowledge/re...

📚 Read our blog post: www.giskard.ai/knowledge/re...

🔎 Key finding: LLMs perpetuate biases in their own content while recognizing those same biases when asked directly.

Thanks to L'Usine Digitale and Célia Séramour for this coverage. Read here gisk.ar/4621lPI

🔎 Key finding: LLMs perpetuate biases in their own content while recognizing those same biases when asked directly.

Thanks to L'Usine Digitale and Célia Séramour for this coverage. Read here gisk.ar/4621lPI

With a strong background in computer science and experience in data science and machine learning, he will enhance our research and AI testing techniques.

🪂 Outside of work, he enjoys paragliding & sailing.

Welcome! 🐢

With a strong background in computer science and experience in data science and machine learning, he will enhance our research and AI testing techniques.

🪂 Outside of work, he enjoys paragliding & sailing.

Welcome! 🐢