pnc.st/s/forecast/...

pnc.st/s/forecast/...

Society isn’t prepared for a world with superhuman AI. If you want to help, consider applying to one of our research roles:

forethought.org/careers/res...

Not sure if you’re a good fit? See more in the reply (or just apply — it doesn’t take long)

Society isn’t prepared for a world with superhuman AI. If you want to help, consider applying to one of our research roles:

forethought.org/careers/res...

Not sure if you’re a good fit? See more in the reply (or just apply — it doesn’t take long)

David Duvenaud joins the podcast —

pnc.st/s/forecast/...

David Duvenaud joins the podcast —

pnc.st/s/forecast/...

Raymond Douglas joins the podcast to discuss “Gradual Disempowerment”

Listen: pnc.st/s/forecast/...

Raymond Douglas joins the podcast to discuss “Gradual Disempowerment”

Listen: pnc.st/s/forecast/...

Cullen O’Keefe (Institute for Law & AI) joins the podcast to discuss “law-following AI”.

Listen: pnc.st/s/forecast/...

Cullen O’Keefe (Institute for Law & AI) joins the podcast to discuss “law-following AI”.

Listen: pnc.st/s/forecast/...

In ‘The Basic Case for Better Futures’, Will MacAskill and Philip Trammell describe a more formal way to model the future in those terms.

In ‘The Basic Case for Better Futures’, Will MacAskill and Philip Trammell describe a more formal way to model the future in those terms.

First up is ‘AI-Enabled Coups: How a Small Group Could Use AI to Seize Power’.

First up is ‘AI-Enabled Coups: How a Small Group Could Use AI to Seize Power’.

Read it here: www.forethought.org/research/ho...

Read it here: www.forethought.org/research/ho...

From the podcast on ‘Better Futures’ —

From the podcast on ‘Better Futures’ —

Read it here: www.forethought.org/research/pe...

Read it here: www.forethought.org/research/pe...

Will MacAskill on the better futures model, from our first video podcast:

Will MacAskill on the better futures model, from our first video podcast:

pnc.st/s/forecast/...

pnc.st/s/forecast/...

pnc.st/s/forecast/...

pnc.st/s/forecast/...

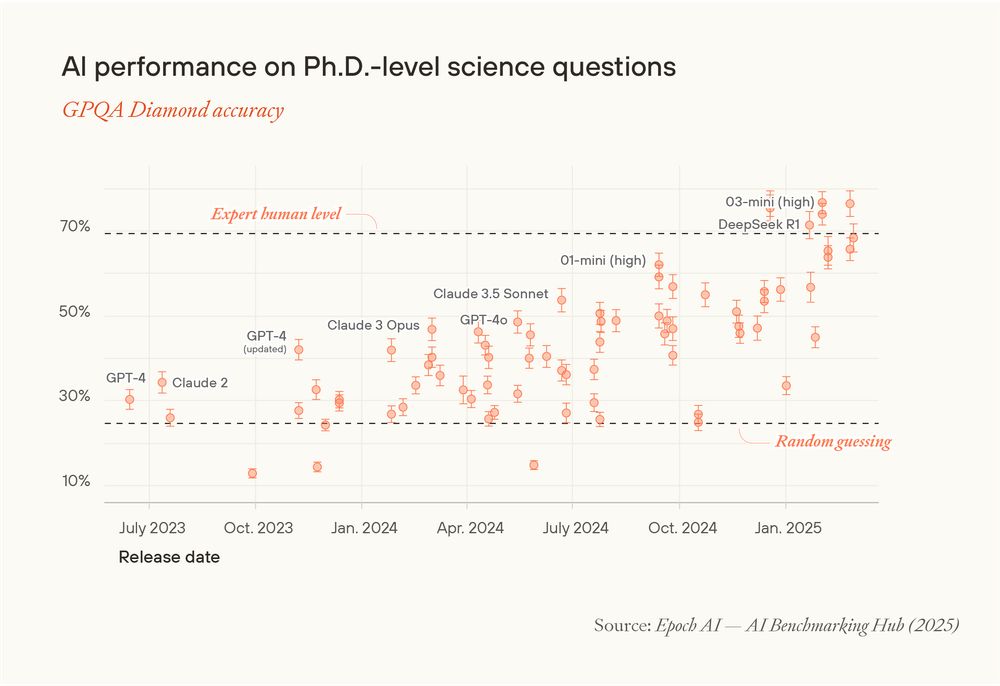

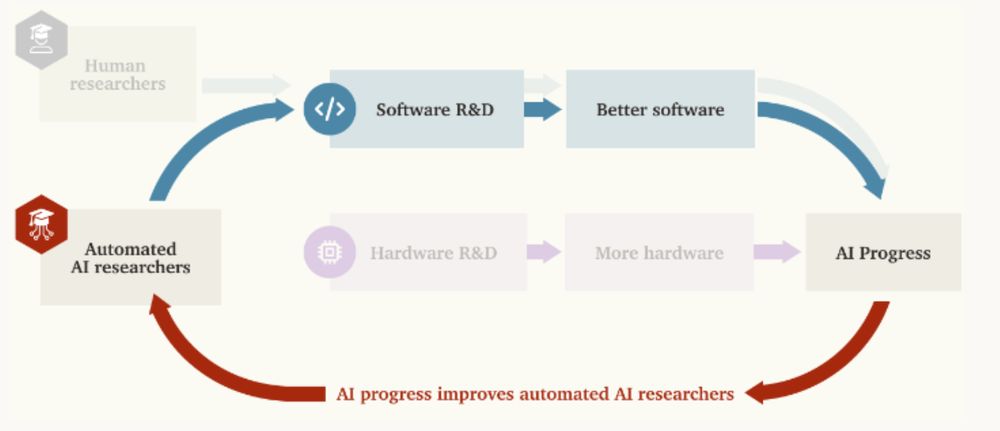

As AI R&D is automated, AI progress may dramatically accelerate. Skeptics counter that hardware stock can only grow so fast. But what if software advances alone can sustain acceleration?

x.com/daniel_2718...

As AI R&D is automated, AI progress may dramatically accelerate. Skeptics counter that hardware stock can only grow so fast. But what if software advances alone can sustain acceleration?

x.com/daniel_2718...