Finbarr

@finbarr.bsky.social

building the future

research at midjourney, deepmind. slinging ai hot takes 🥞at artfintel.com

research at midjourney, deepmind. slinging ai hot takes 🥞at artfintel.com

This is one of my all time favorite papers:

openreview.net/forum?id=ByJ...

It shows that, under fair experimental evaluation, lstms do just as well as a bunch of “improvements”

openreview.net/forum?id=ByJ...

It shows that, under fair experimental evaluation, lstms do just as well as a bunch of “improvements”

On the State of the Art of Evaluation in Neural Language Models

Show that LSTMs are as good or better than recent innovations for LM and that model evaluation is often unreliable.

openreview.net

December 7, 2024 at 3:51 PM

This is one of my all time favorite papers:

openreview.net/forum?id=ByJ...

It shows that, under fair experimental evaluation, lstms do just as well as a bunch of “improvements”

openreview.net/forum?id=ByJ...

It shows that, under fair experimental evaluation, lstms do just as well as a bunch of “improvements”

Really fun conversation with @natolambert.bsky.social!

This is one I've wanted to do for a while: ask why RL has been continually underestimated in the last 2 years.

Interviewing Finbarr Timbers on the "We are So Back" Era of Reinforcement Learning

Interconnects interview #11. An overview on the past, present, and future of RL.

https://buff.ly/3Vqrbqj

Interviewing Finbarr Timbers on the "We are So Back" Era of Reinforcement Learning

Interconnects interview #11. An overview on the past, present, and future of RL.

https://buff.ly/3Vqrbqj

Interviewing Finbarr Timbers on the "We are So Back" Era of Reinforcement Learning

Listen now | Interconnects interview #11. An overview on the past, present, and future of RL.

www.interconnects.ai

December 5, 2024 at 8:43 PM

Really fun conversation with @natolambert.bsky.social!

Apparently there *is* another finbar(r) in Alberta.

December 3, 2024 at 6:19 AM

Apparently there *is* another finbar(r) in Alberta.

New homeowner fear unlocked; someone hit and ran my neighbor’s garage

December 3, 2024 at 6:18 AM

New homeowner fear unlocked; someone hit and ran my neighbor’s garage

there’s a type of “not trying” which means not executing at the level of competence of a $XX billion corporation

this is the complaint about eg Google products. They’re good! better than most startups! But not “trillion dollar corporation famed for engineering expertise” good.

this is the complaint about eg Google products. They’re good! better than most startups! But not “trillion dollar corporation famed for engineering expertise” good.

December 1, 2024 at 4:50 PM

there’s a type of “not trying” which means not executing at the level of competence of a $XX billion corporation

this is the complaint about eg Google products. They’re good! better than most startups! But not “trillion dollar corporation famed for engineering expertise” good.

this is the complaint about eg Google products. They’re good! better than most startups! But not “trillion dollar corporation famed for engineering expertise” good.

I watched too many ski movies and now am trying to convince my wife we should move to Alaska

November 30, 2024 at 4:56 AM

I watched too many ski movies and now am trying to convince my wife we should move to Alaska

building my own mlp implementation from scratch in numpy, including backprop, remains one of the most educational exercises I’ve done

November 30, 2024 at 4:18 AM

building my own mlp implementation from scratch in numpy, including backprop, remains one of the most educational exercises I’ve done

Love this. Very clean implementations of various inference optimizations.

November 26, 2024 at 11:20 PM

Love this. Very clean implementations of various inference optimizations.

Agreed! Folk knowledge is worth publishing!

Does everyone in your community agree on some folk knowledge that isn’t published anywhere? Put it in a paper! It’s a pretty valuable contribution

November 26, 2024 at 10:53 PM

Agreed! Folk knowledge is worth publishing!

my latest article for Artificial Fintelligence is up. i cover the evolution of Vision Language Models over the past few years, from complex architectures to surprisingly simple & effective ones.

(link in next tweet)

(link in next tweet)

November 26, 2024 at 4:19 PM

my latest article for Artificial Fintelligence is up. i cover the evolution of Vision Language Models over the past few years, from complex architectures to surprisingly simple & effective ones.

(link in next tweet)

(link in next tweet)

the tech industry is like California: it gets a few of the most important things right, so it can make a lot of other mistakes and still be wildly successful

I suspect we'd see similar outcomes if there were e.g. a Cravath Legal Resident program or a Mayo Clinic Surgical Resident program

the barrier to entry is b/c of tech's lack of credential gate keeping, which is one of tech’s greatest advantages

the barrier to entry is b/c of tech's lack of credential gate keeping, which is one of tech’s greatest advantages

November 25, 2024 at 4:09 PM

the tech industry is like California: it gets a few of the most important things right, so it can make a lot of other mistakes and still be wildly successful

the remarkable success of the Google brain (and OpenAI) resident programs is an indication to me that smart, hardworking people can do more than you expect

November 25, 2024 at 4:09 PM

the remarkable success of the Google brain (and OpenAI) resident programs is an indication to me that smart, hardworking people can do more than you expect

My favorite thing about Bsky so far is not having the dumb algo requirements. Link in reply and not mentioning substack is stupid!

November 24, 2024 at 4:34 PM

My favorite thing about Bsky so far is not having the dumb algo requirements. Link in reply and not mentioning substack is stupid!

for all the work we researchers do, the best way to improve your model by far is to 1) use better data and 2) use higher quality data

November 24, 2024 at 4:19 PM

for all the work we researchers do, the best way to improve your model by far is to 1) use better data and 2) use higher quality data

If I was doing a phd, this would be one of my top choices for programs.

Hello BlueSky! Joao Henriques (joao.science) and I are hiring a fully funded PhD student (UK/international) for the FAIR-Oxford program. The student will spend 50% of their time @UniofOxford and 50% @MetaAI (FAIR) in London, while completing a DPhil (Oxford PhD). Deadline: 2nd of Dec AOE!!

João F. Henriques

Research of Joao F. Henriques

joao.science

November 23, 2024 at 4:39 PM

If I was doing a phd, this would be one of my top choices for programs.

active learning is top of my list of "things that seem like they should work but don't"

I haven't had much success when I actually implement it

I haven't had much success when I actually implement it

November 23, 2024 at 4:29 PM

active learning is top of my list of "things that seem like they should work but don't"

I haven't had much success when I actually implement it

I haven't had much success when I actually implement it

Why does DeepSeek have so many GPUs? (Purportedly >10k H100s). Is this useful to their main hedge fund business?

November 22, 2024 at 3:09 PM

Why does DeepSeek have so many GPUs? (Purportedly >10k H100s). Is this useful to their main hedge fund business?

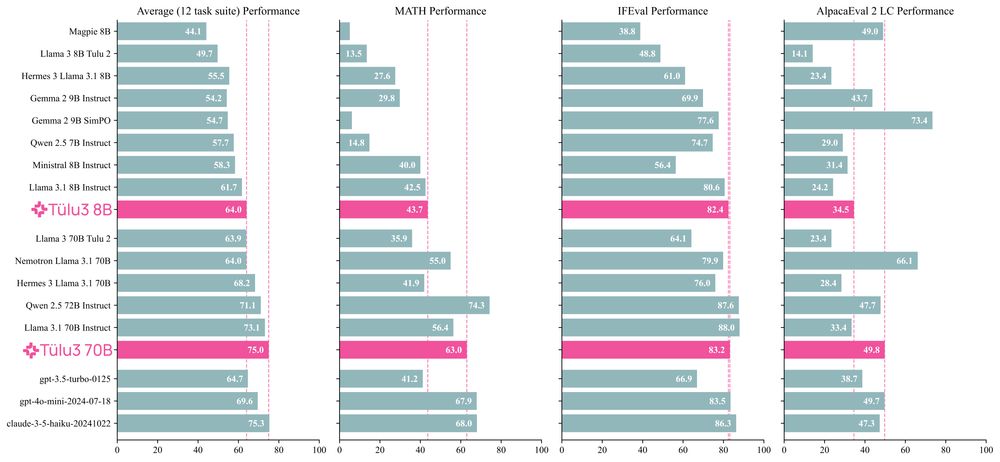

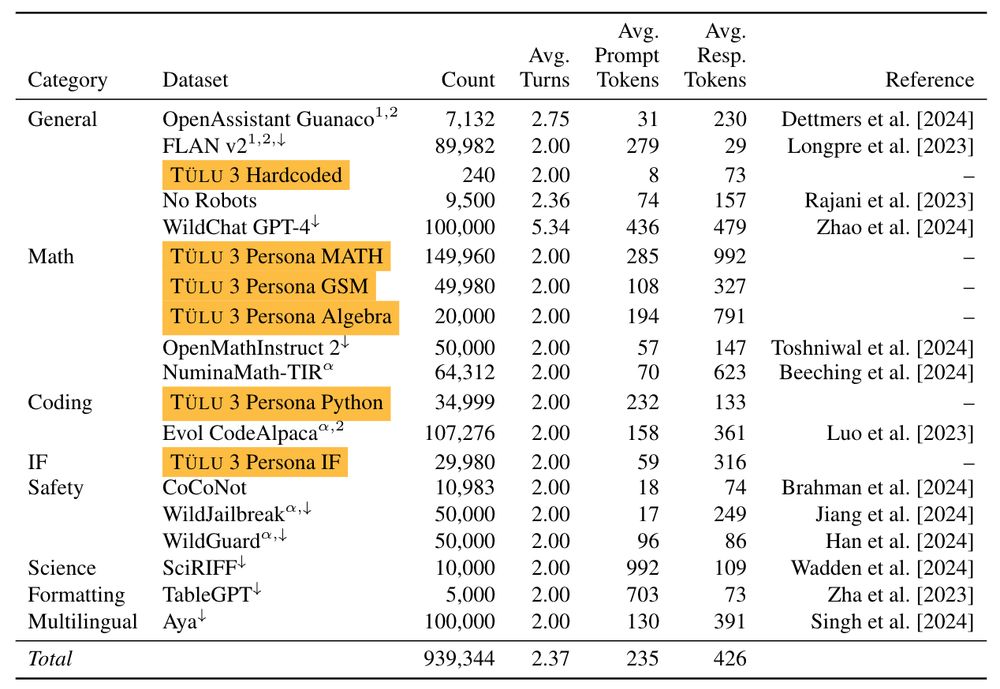

Tulu is very exciting!

I've spent the last two years scouring all available resources on RLHF specifically and post training broadly. Today, with the help of a totally cracked team, we bring you the fruits of that labor — Tülu 3, an entirely open frontier model post training recipe. We beat Llama 3.1 Instruct.

Thread.

Thread.

November 21, 2024 at 8:26 PM

Tulu is very exciting!

Deepseek r1 seems really good

November 20, 2024 at 3:10 PM

Deepseek r1 seems really good

If I was in a position to direct significant research resources/headcount I’d be putting a significant effort behind better exploration in RL.

November 19, 2024 at 2:47 PM

If I was in a position to direct significant research resources/headcount I’d be putting a significant effort behind better exploration in RL.

honestly if houses in Whistler were less expensive I’d be way less motivated to earn money

the prospect of retiring there motivates a non-trivial amount of my life

the prospect of retiring there motivates a non-trivial amount of my life

November 19, 2024 at 2:45 PM

honestly if houses in Whistler were less expensive I’d be way less motivated to earn money

the prospect of retiring there motivates a non-trivial amount of my life

the prospect of retiring there motivates a non-trivial amount of my life

the amount of time I spend thinking about skiing rn is ridiculous

November 19, 2024 at 2:44 PM

the amount of time I spend thinking about skiing rn is ridiculous

ok but seriously at some point we really need to solve exploration in RL

November 19, 2024 at 8:57 AM

ok but seriously at some point we really need to solve exploration in RL

Reposted by Finbarr

galaxy brain take: Google no moats memo is a psyop and a no-downside shot against openAI

It’s a little too flattering to the conceits of open source etc

It’s a little too flattering to the conceits of open source etc

May 5, 2023 at 4:14 AM

galaxy brain take: Google no moats memo is a psyop and a no-downside shot against openAI

It’s a little too flattering to the conceits of open source etc

It’s a little too flattering to the conceits of open source etc

chain of thought reasoning keeps racking up the Ws

May 4, 2023 at 7:59 PM

chain of thought reasoning keeps racking up the Ws