Erik Brockbank

@erikbrockbank.bsky.social

Postdoc @Stanford Psychology

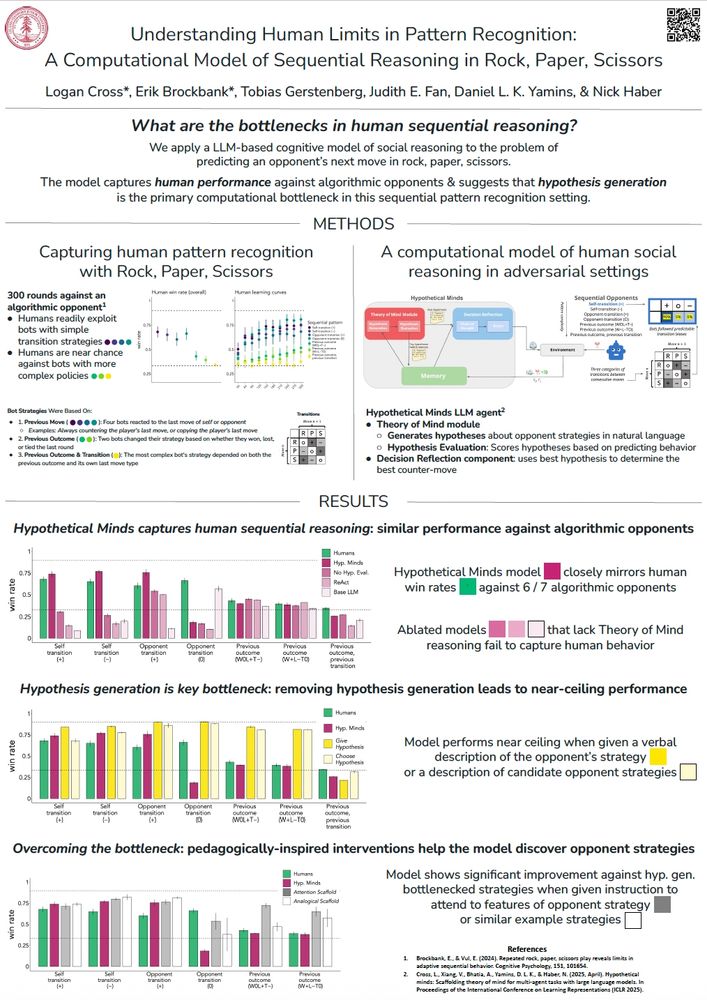

Hmm poster image seems to be a black square. That's fun.

Here's the intended image?

Here's the intended image?

August 12, 2025 at 11:02 PM

Hmm poster image seems to be a black square. That's fun.

Here's the intended image?

Here's the intended image?

This project came together with a wonderful crew of collaborators, co-led by Logan Cross with @tobigerstenberg.bsky.social, @judithfan.bsky.social, @dyamins.bsky.social, and Nick Haber

August 12, 2025 at 10:56 PM

This project came together with a wonderful crew of collaborators, co-led by Logan Cross with @tobigerstenberg.bsky.social, @judithfan.bsky.social, @dyamins.bsky.social, and Nick Haber

Our work shows how LLM-based agents can serve as models of human cognition, helping us pinpoint the bottlenecks in our own learning.

Read the full paper here: tinyurl.com/mr356hyv

Code & Data: tinyurl.com/3napnpsm

Come check out our poster at CCN on Wednesday!

Read the full paper here: tinyurl.com/mr356hyv

Code & Data: tinyurl.com/3napnpsm

Come check out our poster at CCN on Wednesday!

August 12, 2025 at 10:56 PM

Our work shows how LLM-based agents can serve as models of human cognition, helping us pinpoint the bottlenecks in our own learning.

Read the full paper here: tinyurl.com/mr356hyv

Code & Data: tinyurl.com/3napnpsm

Come check out our poster at CCN on Wednesday!

Read the full paper here: tinyurl.com/mr356hyv

Code & Data: tinyurl.com/3napnpsm

Come check out our poster at CCN on Wednesday!

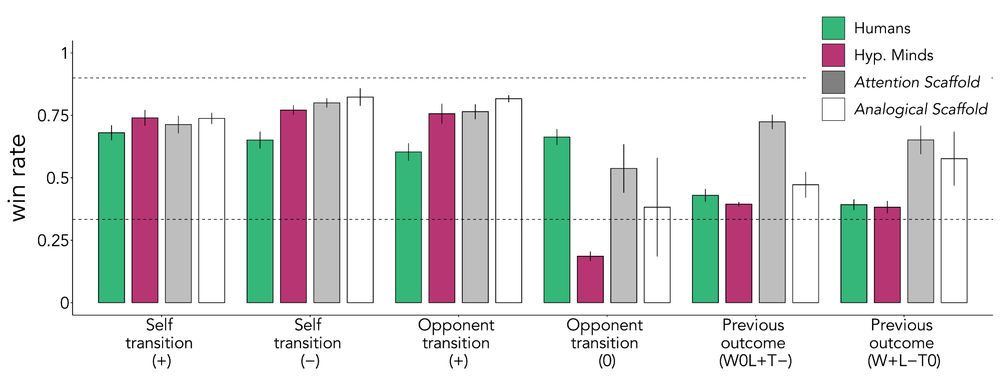

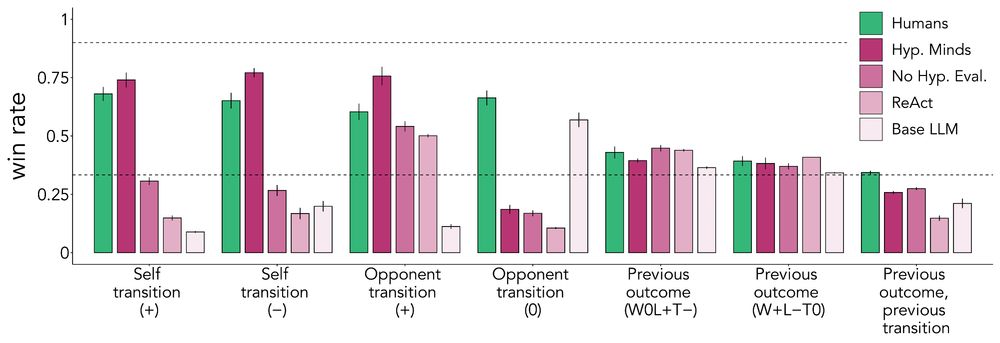

In sum: limitations in pattern learning in this setting aren't just about memory or reasoning power, but about considering the right strategy space.

These results also make a prediction: the same kind of verbal scaffolding might help humans overcome cognitive bottlenecks in the same task.

These results also make a prediction: the same kind of verbal scaffolding might help humans overcome cognitive bottlenecks in the same task.

August 12, 2025 at 10:56 PM

In sum: limitations in pattern learning in this setting aren't just about memory or reasoning power, but about considering the right strategy space.

These results also make a prediction: the same kind of verbal scaffolding might help humans overcome cognitive bottlenecks in the same task.

These results also make a prediction: the same kind of verbal scaffolding might help humans overcome cognitive bottlenecks in the same task.

So, can we "teach" the model to think of better hypotheses?

By giving the model verbal scaffolding that directed its attention to relevant features (e.g., "pay attention to how the opponent's move changes after a win vs. a loss"), it discovered complex patterns it had previously missed.

By giving the model verbal scaffolding that directed its attention to relevant features (e.g., "pay attention to how the opponent's move changes after a win vs. a loss"), it discovered complex patterns it had previously missed.

August 12, 2025 at 10:56 PM

So, can we "teach" the model to think of better hypotheses?

By giving the model verbal scaffolding that directed its attention to relevant features (e.g., "pay attention to how the opponent's move changes after a win vs. a loss"), it discovered complex patterns it had previously missed.

By giving the model verbal scaffolding that directed its attention to relevant features (e.g., "pay attention to how the opponent's move changes after a win vs. a loss"), it discovered complex patterns it had previously missed.

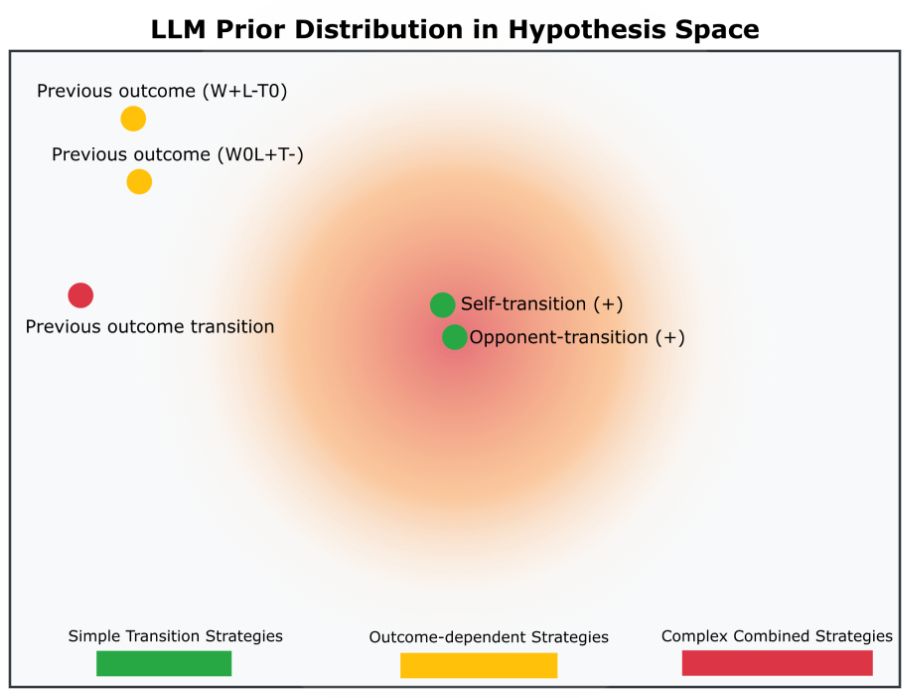

How can we help the model generate the right hypotheses? We started with simple interventions that people could do too: making the model generate more hypotheses or more diverse ones (increasing LLM temp.). Neither worked. HM was stuck searching in the wrong part of the hypothesis space.

August 12, 2025 at 10:56 PM

How can we help the model generate the right hypotheses? We started with simple interventions that people could do too: making the model generate more hypotheses or more diverse ones (increasing LLM temp.). Neither worked. HM was stuck searching in the wrong part of the hypothesis space.

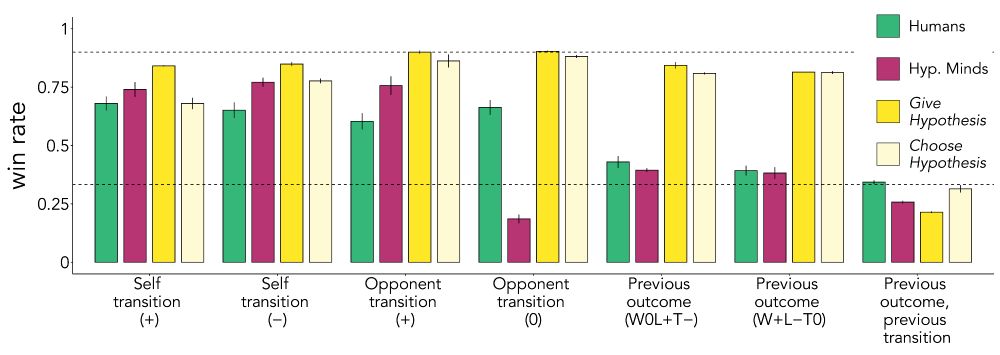

The answer seems to be Hypothesis Generation.

When we gave HM an explicit description of the opponent's strategy, its performance soared to >80% win rates against almost all bots. When we gave it a list of possible strategies, HM was able to accurately evaluate which one fit the data best.

When we gave HM an explicit description of the opponent's strategy, its performance soared to >80% win rates against almost all bots. When we gave it a list of possible strategies, HM was able to accurately evaluate which one fit the data best.

August 12, 2025 at 10:56 PM

The answer seems to be Hypothesis Generation.

When we gave HM an explicit description of the opponent's strategy, its performance soared to >80% win rates against almost all bots. When we gave it a list of possible strategies, HM was able to accurately evaluate which one fit the data best.

When we gave HM an explicit description of the opponent's strategy, its performance soared to >80% win rates against almost all bots. When we gave it a list of possible strategies, HM was able to accurately evaluate which one fit the data best.

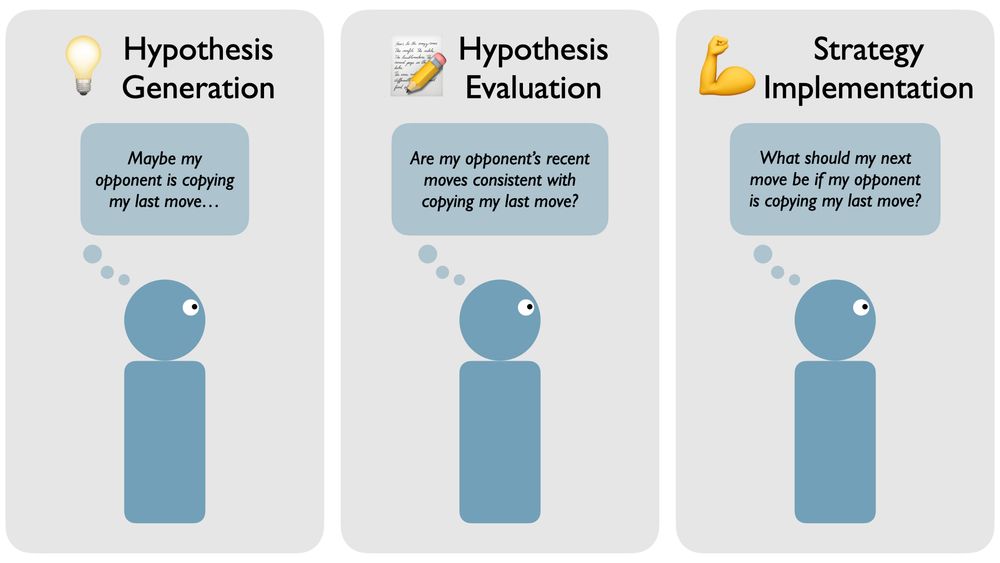

This led to our central question: What is the main bottleneck for both humans and our model?

* Coming up with the right idea? (Hypothesis Generation)

* Figuring out if an idea is correct? (Hypothesis Evaluation)

* Knowing what move to make with the right idea? (Strategy Implementation)

* Coming up with the right idea? (Hypothesis Generation)

* Figuring out if an idea is correct? (Hypothesis Evaluation)

* Knowing what move to make with the right idea? (Strategy Implementation)

August 12, 2025 at 10:56 PM

This led to our central question: What is the main bottleneck for both humans and our model?

* Coming up with the right idea? (Hypothesis Generation)

* Figuring out if an idea is correct? (Hypothesis Evaluation)

* Knowing what move to make with the right idea? (Strategy Implementation)

* Coming up with the right idea? (Hypothesis Generation)

* Figuring out if an idea is correct? (Hypothesis Evaluation)

* Knowing what move to make with the right idea? (Strategy Implementation)

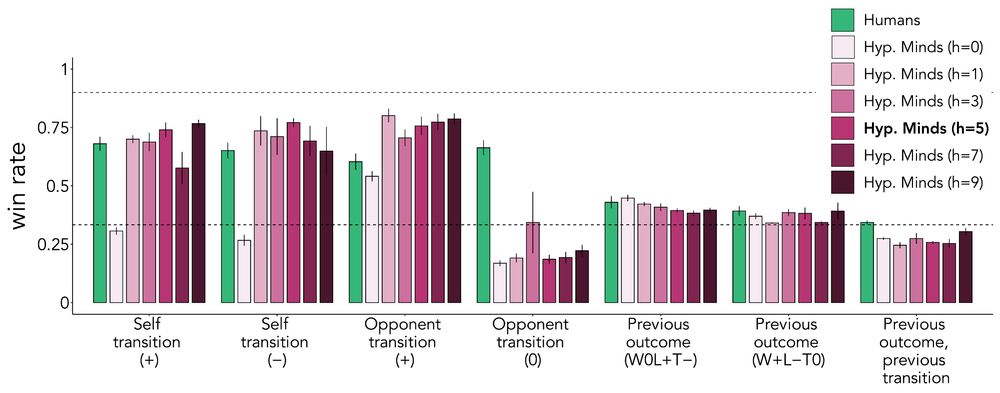

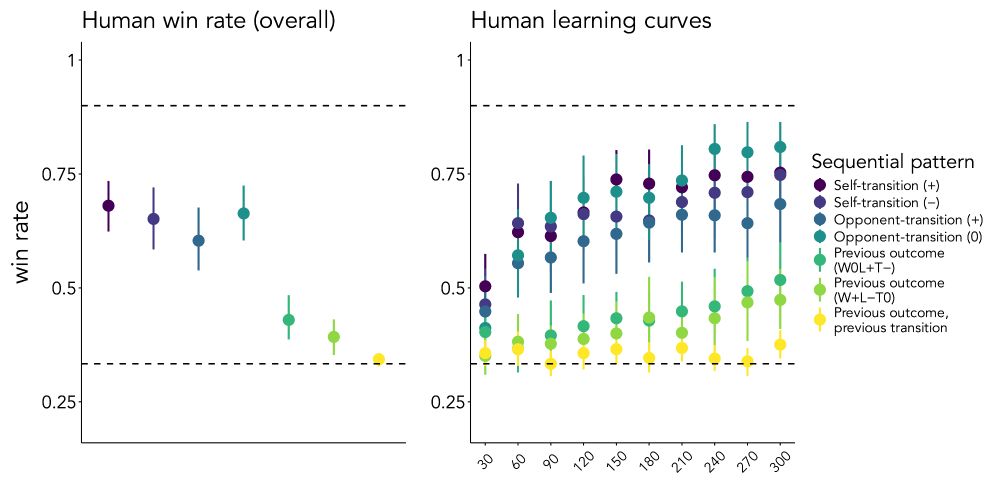

Here's where it gets interesting. When we put HM in the same experiment, it closely mirrored human performance: It succeeded against simple opponents and performed around chance against complex ones, suggesting HM may be mirroring key aspects of the cognitive processes in this task.

August 12, 2025 at 10:56 PM

Here's where it gets interesting. When we put HM in the same experiment, it closely mirrored human performance: It succeeded against simple opponents and performed around chance against complex ones, suggesting HM may be mirroring key aspects of the cognitive processes in this task.

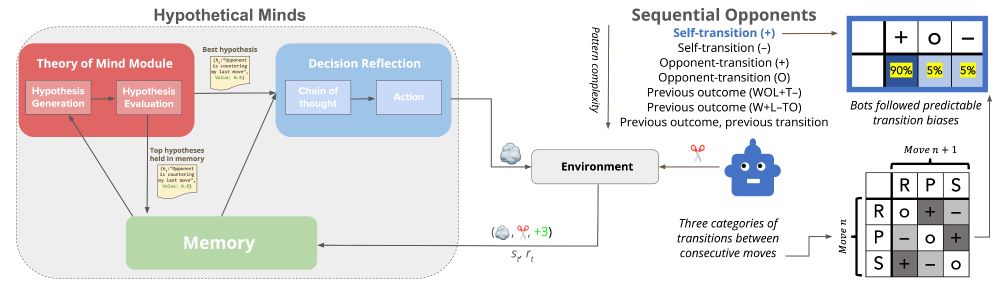

To find out, we deployed an LLM-based agent called Hypothetical Minds (HM) as a model of the cognitive processes needed to adapt to RPS opponents.

HM tries to outwit its opponent by generating and testing natural language hypotheses about their strategy (e.g., "the opponent copies my last move")

HM tries to outwit its opponent by generating and testing natural language hypotheses about their strategy (e.g., "the opponent copies my last move")

August 12, 2025 at 10:56 PM

To find out, we deployed an LLM-based agent called Hypothetical Minds (HM) as a model of the cognitive processes needed to adapt to RPS opponents.

HM tries to outwit its opponent by generating and testing natural language hypotheses about their strategy (e.g., "the opponent copies my last move")

HM tries to outwit its opponent by generating and testing natural language hypotheses about their strategy (e.g., "the opponent copies my last move")

In RPS, you win by exploiting patterns in your opponent’s moves. We tested people’s ability to do this by having them play 300 rounds of RPS against bots with algorithmic strategies. The finding? People are great at exploiting simple patterns but struggle to detect more complex ones. Why?

August 12, 2025 at 10:56 PM

In RPS, you win by exploiting patterns in your opponent’s moves. We tested people’s ability to do this by having them play 300 rounds of RPS against bots with algorithmic strategies. The finding? People are great at exploiting simple patterns but struggle to detect more complex ones. Why?

Since then, we’ve also run a study exploring how good *people* are at this same prediction task.

Come check out our poster at CogSci (poster session 1 on Thursday), or check out our video summary for virtual attendees, to get the full story :)

Come check out our poster at CogSci (poster session 1 on Thursday), or check out our video summary for virtual attendees, to get the full story :)

July 29, 2025 at 7:49 PM

Since then, we’ve also run a study exploring how good *people* are at this same prediction task.

Come check out our poster at CogSci (poster session 1 on Thursday), or check out our video summary for virtual attendees, to get the full story :)

Come check out our poster at CogSci (poster session 1 on Thursday), or check out our video summary for virtual attendees, to get the full story :)

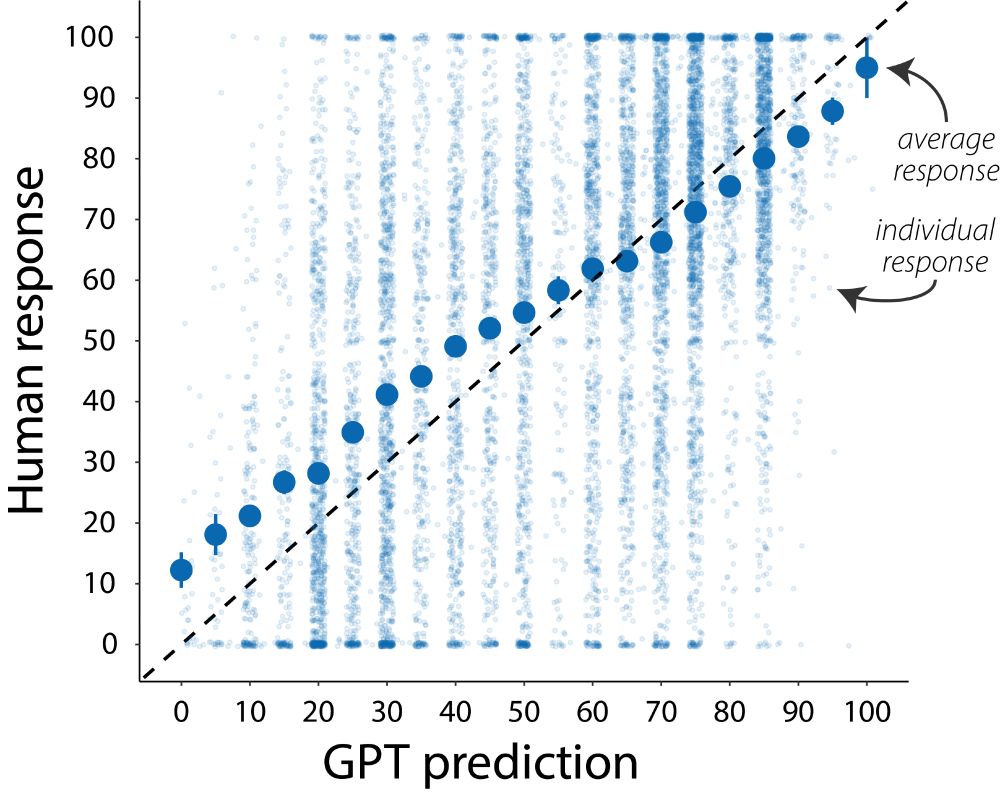

We had GPT-4o use each person’s written answers to guess their responses on the personality scales.

GPT does well, even when we correct for guessing the most typical responses.

This means in some cases, people’s answers to the questions contain information about what they are like.

GPT does well, even when we correct for guessing the most typical responses.

This means in some cases, people’s answers to the questions contain information about what they are like.

July 29, 2025 at 7:49 PM

We had GPT-4o use each person’s written answers to guess their responses on the personality scales.

GPT does well, even when we correct for guessing the most typical responses.

This means in some cases, people’s answers to the questions contain information about what they are like.

GPT does well, even when we correct for guessing the most typical responses.

This means in some cases, people’s answers to the questions contain information about what they are like.

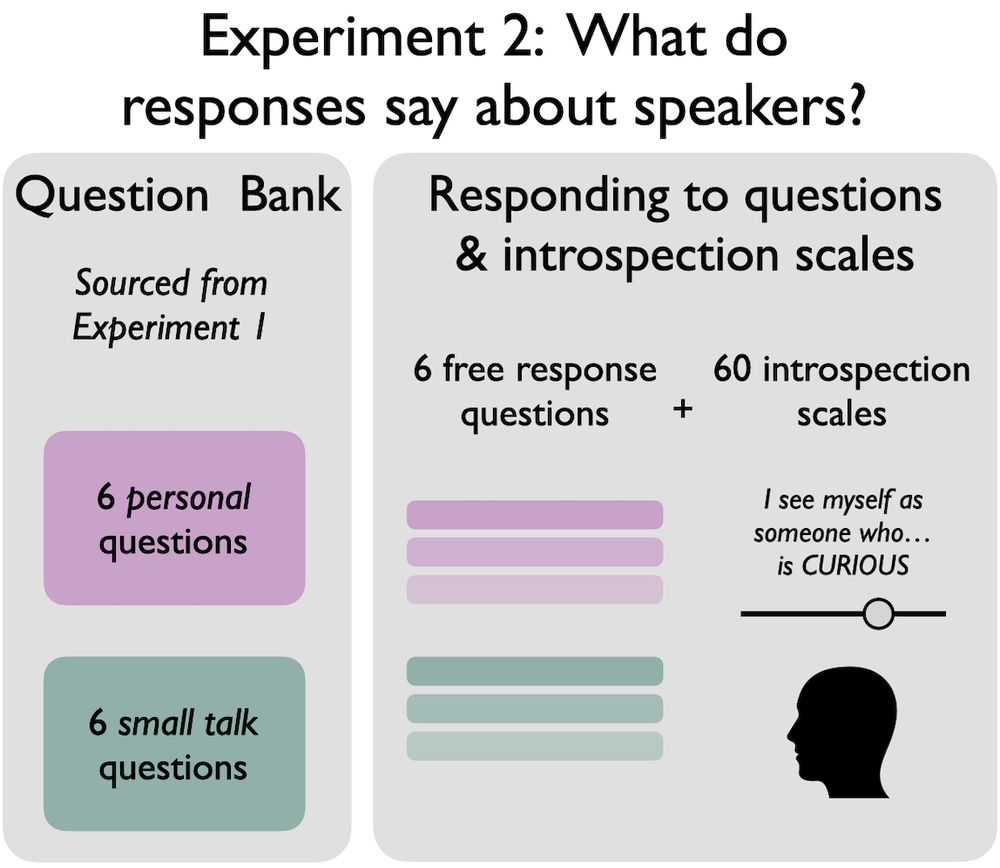

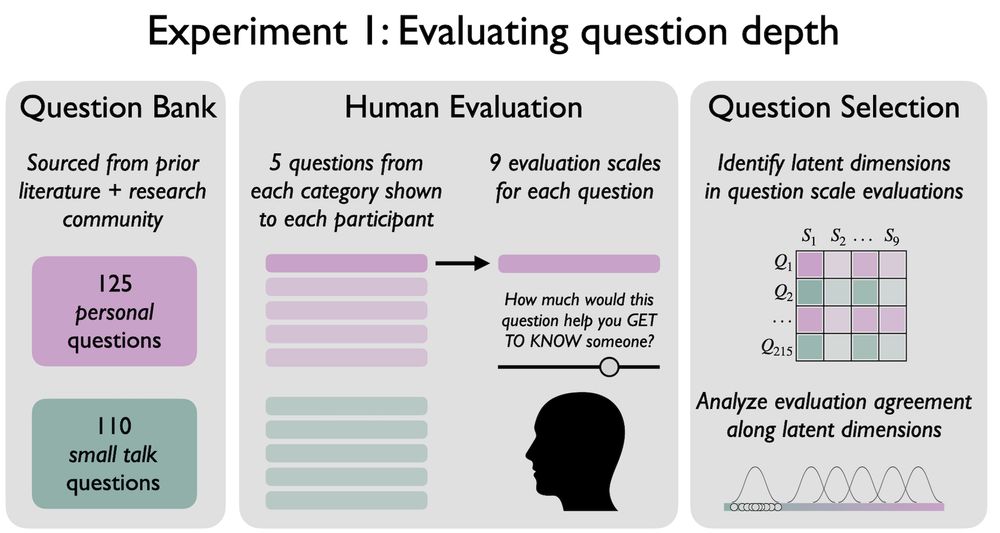

Psychologists often use sliding scale personality surveys to learn about people’s traits.

Do people learn the same thing about others from their answers to “deep” questions?

In our second study, online participants wrote answers to some of these questions and also completed a personality survey.

Do people learn the same thing about others from their answers to “deep” questions?

In our second study, online participants wrote answers to some of these questions and also completed a personality survey.

July 29, 2025 at 7:49 PM

Psychologists often use sliding scale personality surveys to learn about people’s traits.

Do people learn the same thing about others from their answers to “deep” questions?

In our second study, online participants wrote answers to some of these questions and also completed a personality survey.

Do people learn the same thing about others from their answers to “deep” questions?

In our second study, online participants wrote answers to some of these questions and also completed a personality survey.

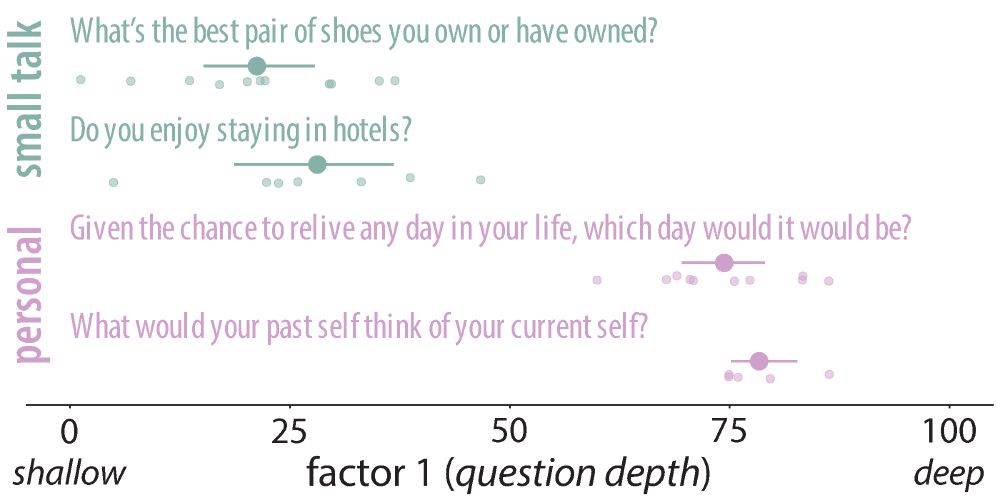

We find that the question ratings tended to be similar across all 9 scales and between different people.

If we combine the ratings for each question, we get a pretty good measure of its “interpersonal depth”, with “small talk” Qs at the low end and more “personal” Qs at the high end.

If we combine the ratings for each question, we get a pretty good measure of its “interpersonal depth”, with “small talk” Qs at the low end and more “personal” Qs at the high end.

July 29, 2025 at 7:49 PM

We find that the question ratings tended to be similar across all 9 scales and between different people.

If we combine the ratings for each question, we get a pretty good measure of its “interpersonal depth”, with “small talk” Qs at the low end and more “personal” Qs at the high end.

If we combine the ratings for each question, we get a pretty good measure of its “interpersonal depth”, with “small talk” Qs at the low end and more “personal” Qs at the high end.

In our first experiment, we developed a corpus of 235 open-ended questions: half were “small talk” (“favorite sports team?”) and half were “personal” (“greatest fear?”).

We asked online participants to rate the Qs on different scales related to whether they would help them get to know a stranger.

We asked online participants to rate the Qs on different scales related to whether they would help them get to know a stranger.

July 29, 2025 at 7:49 PM

In our first experiment, we developed a corpus of 235 open-ended questions: half were “small talk” (“favorite sports team?”) and half were “personal” (“greatest fear?”).

We asked online participants to rate the Qs on different scales related to whether they would help them get to know a stranger.

We asked online participants to rate the Qs on different scales related to whether they would help them get to know a stranger.

This project asks what kind of questions are most useful for getting to know others.

We made a bank of questions and in two studies:

1) people evaluated the questions for whether they would help get to know somebody

2) we measured what people’s answers reveal about their personality

We made a bank of questions and in two studies:

1) people evaluated the questions for whether they would help get to know somebody

2) we measured what people’s answers reveal about their personality

July 29, 2025 at 7:49 PM

This project asks what kind of questions are most useful for getting to know others.

We made a bank of questions and in two studies:

1) people evaluated the questions for whether they would help get to know somebody

2) we measured what people’s answers reveal about their personality

We made a bank of questions and in two studies:

1) people evaluated the questions for whether they would help get to know somebody

2) we measured what people’s answers reveal about their personality

Whoops I apparently have no idea how graphics work, please enjoy this hilarious inverted SVG situation and head to project-nightingale.stanford.edu to see the *real* graphic

Project Nightingale

project-nightingale.stanford.edu

March 7, 2025 at 6:00 PM

Whoops I apparently have no idea how graphics work, please enjoy this hilarious inverted SVG situation and head to project-nightingale.stanford.edu to see the *real* graphic

We’re working on developing those now! Stay tuned for updates from us at Project Nightingale (project-nightingale.stanford.edu), a new collaborative effort to advance the science of how people reason about data!

March 7, 2025 at 5:05 PM

We’re working on developing those now! Stay tuned for updates from us at Project Nightingale (project-nightingale.stanford.edu), a new collaborative effort to advance the science of how people reason about data!