Excited to announce our new work: "Large-scale Pre-training for Grounded Video Caption Generation" with Cordelia Schmid & @josef-sivic.bsky.social.

Paper: arxiv.org/abs/2503.10781

Project: ekazakos.github.io/grounded_vid...

Code (coming soon): github.com/ekazakos/grove 1/7

We will release code, models and datasets within next 2 weeks.

We are also working on a search demo for the proposed datasets with user prompts!

I hope to see you all in Honolulu!

When you’re choosing an internship or a job, what you work on and who you work with matter way more than the logo. Don’t optimize for brands. Become the brand!

When you’re choosing an internship or a job, what you work on and who you work with matter way more than the logo. Don’t optimize for brands. Become the brand!

Eventually these systems build a momentum of their own. That is why choices now matter, things are fluid.

Eventually these systems build a momentum of their own. That is why choices now matter, things are fluid.

MIRAS = a unifying theory of transformers (attention) and state space models (SSM, e.g. Mamba, RNNs)

TITANS = an optimal MIRAS implementation that’s “halfway between” SSM & transformer with a CL memory module

let’s dive in!

research.google/blog/titans-...

MIRAS = a unifying theory of transformers (attention) and state space models (SSM, e.g. Mamba, RNNs)

TITANS = an optimal MIRAS implementation that’s “halfway between” SSM & transformer with a CL memory module

let’s dive in!

research.google/blog/titans-...

#ICML2017 was also in Sydney and was an absolute blast

#ICML2017 was also in Sydney and was an absolute blast

📍 Karlsruhe 🇩🇪

📅 Apply by Dec 31st

🔗 https://bit.ly/49NJsWJ

📍 Karlsruhe 🇩🇪

📅 Apply by Dec 31st

🔗 https://bit.ly/49NJsWJ

📍 Copenhagen 🇩🇰

📅 Apply by Jan 31st

🔗 https://candidate.hr-manager.net/ApplicationInit.aspx?cid=119&ProjectId=181837&DepartmentId=3439&MediaId=5

📍 Copenhagen 🇩🇰

📅 Apply by Jan 31st

🔗 https://candidate.hr-manager.net/ApplicationInit.aspx?cid=119&ProjectId=181837&DepartmentId=3439&MediaId=5

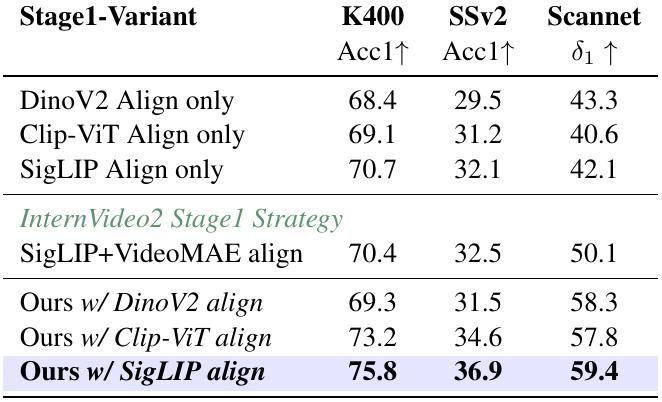

Chenting Wang, Yuhan Zhu, Yicheng Xu ... Limin Wang

arxiv.org/abs/2512.01342

Trending on www.scholar-inbox.com

Chenting Wang, Yuhan Zhu, Yicheng Xu ... Limin Wang

arxiv.org/abs/2512.01342

Trending on www.scholar-inbox.com

Outperforms several SotA in zero-shot classification, retrieval, robustness, and compositional tasks!

arxiv.org/abs/2511.23170

Outperforms several SotA in zero-shot classification, retrieval, robustness, and compositional tasks!

arxiv.org/abs/2511.23170

World models for evaluating autonomous driving, GAIA3 released! End-to-end driving model &loads of insights!

Thanks for visiting &spending the day talking to researchers.

World models for evaluating autonomous driving, GAIA3 released! End-to-end driving model &loads of insights!

Thanks for visiting &spending the day talking to researchers.

Our new work uses diffusion models to decompose neural information down to individual stimuli & features!

🎯Spotlight at #NeurIPS2025 🌟📄

arxiv.org/abs/2505.11309

Our new work uses diffusion models to decompose neural information down to individual stimuli & features!

🎯Spotlight at #NeurIPS2025 🌟📄

arxiv.org/abs/2505.11309

We asked: Can shallow networks be as expressive as deep ones? Inspired by biological vision, we introduce higher-order convolutions that capture complex image patterns standard CNNs miss.

🧵👇

We asked: Can shallow networks be as expressive as deep ones? Inspired by biological vision, we introduce higher-order convolutions that capture complex image patterns standard CNNs miss.

🧵👇

Am I confused? Yes!

Am I hyped? Also yesss!

Congrats @toniwuest.bsky.social et al. for a great paper and for convincing me to look into symbolic reasoning. It makes a lot of sense, especially within VLMs!

We introduce Vision-Language Programs (VLP), a neuro-symbolic framework that combines the perceptual power of VLMs with program synthesis for robust visual reasoning.

Am I confused? Yes!

Am I hyped? Also yesss!

Congrats @toniwuest.bsky.social et al. for a great paper and for convincing me to look into symbolic reasoning. It makes a lot of sense, especially within VLMs!

We introduce Vision-Language Programs (VLP), a neuro-symbolic framework that combines the perceptual power of VLMs with program synthesis for robust visual reasoning.

We introduce Vision-Language Programs (VLP), a neuro-symbolic framework that combines the perceptual power of VLMs with program synthesis for robust visual reasoning.

I think we care about blind review only because our publishing system is poorly designed and needs change in the modern era anyway.

I think we care about blind review only because our publishing system is poorly designed and needs change in the modern era anyway.

As far as I can tell it's between 5.1 and 10.2 seconds, depending on which end of the 2019 IEA Netflix energy usage estimate you use

simonwillison.net/2025/Nov/29/...

As far as I can tell it's between 5.1 and 10.2 seconds, depending on which end of the 2019 IEA Netflix energy usage estimate you use

simonwillison.net/2025/Nov/29/...

- Nothing will ever be blind anymore because there's enough data in the wild to train a reviewer de-anonymizer

- What about fine-tuning a seq2seq model conditioned

- Nothing will ever be blind anymore because there's enough data in the wild to train a reviewer de-anonymizer

- What about fine-tuning a seq2seq model conditioned

100% Reliability doesn't exist! Ask Boeing engineers

Btw, I understand the anger & fear. Now, pls disclose other similar stands of yours, eg measures & actions after Boeing ✈️ incidents.

my student just made this joke:

ICLR= I Can Locate Reviewers 😅

my student just made this joke:

ICLR= I Can Locate Reviewers 😅

1/2

1/2