https://www.ehkennedy.com/

interested in causality, machine learning, nonparametrics, public policy, etc

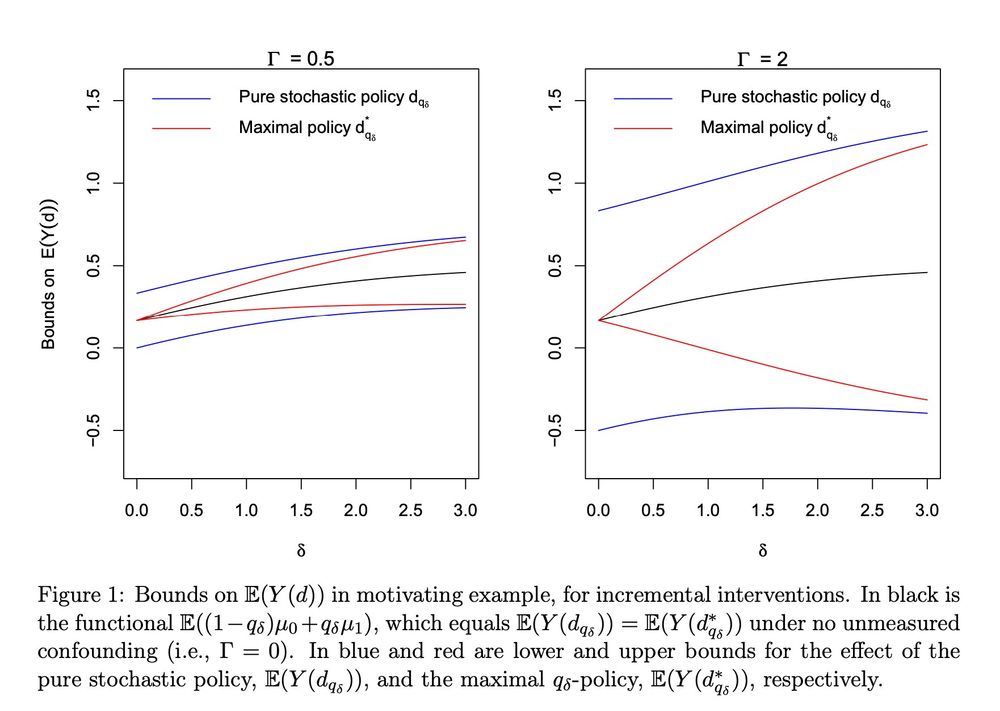

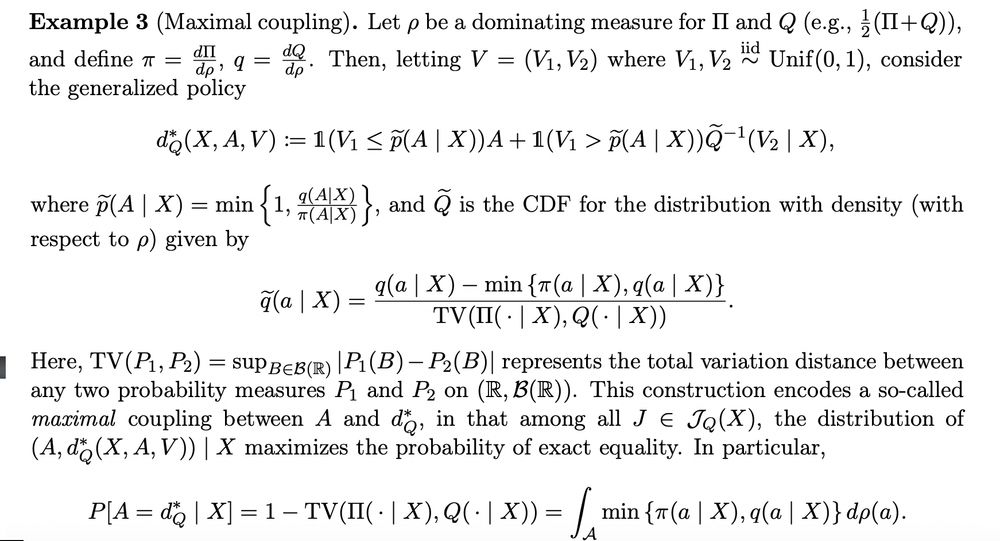

Led by amazing postdoc Alex Levis: www.awlevis.com/about/

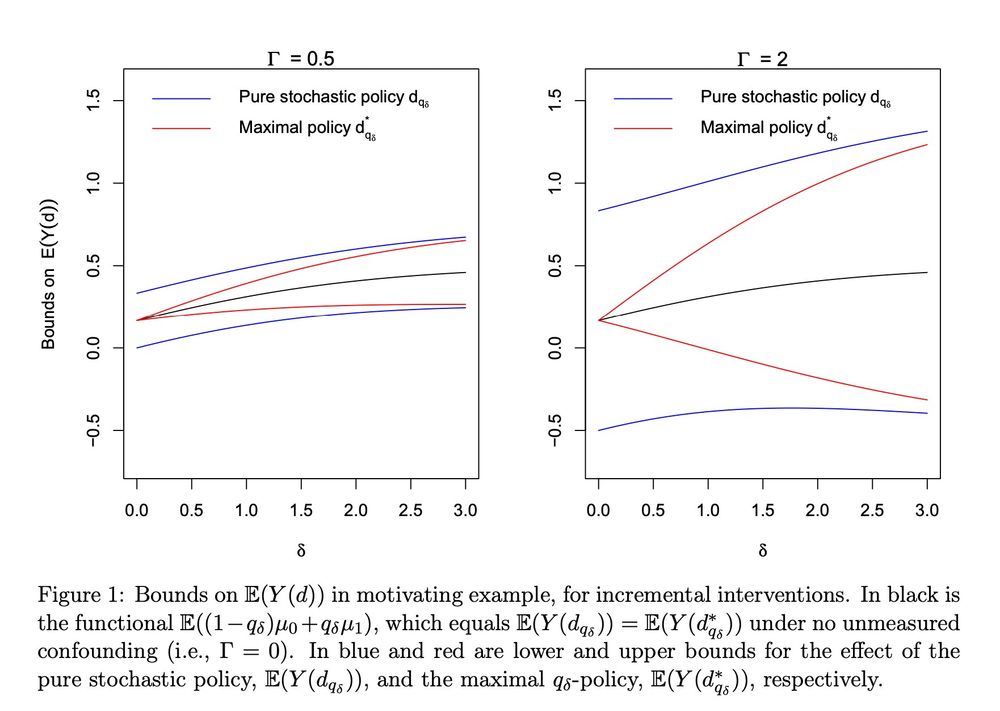

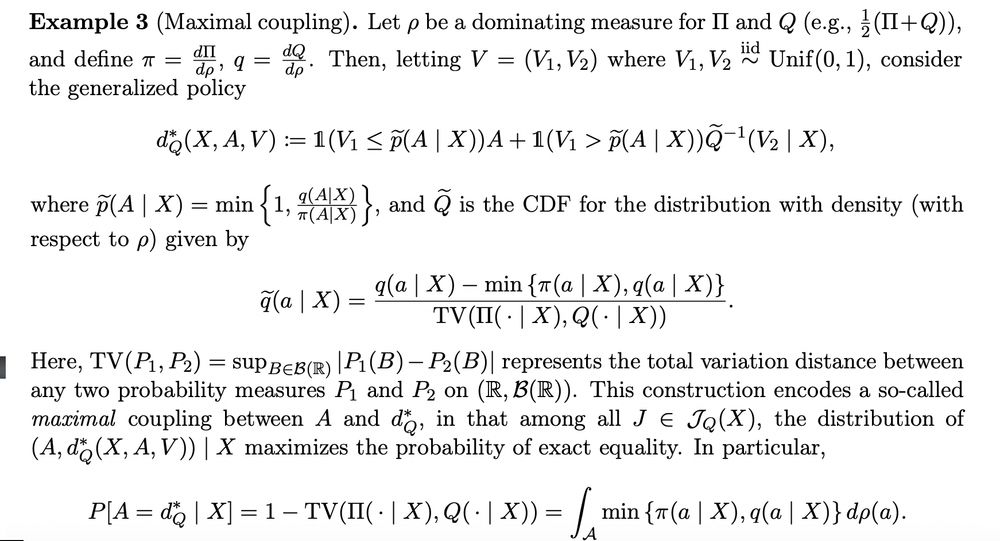

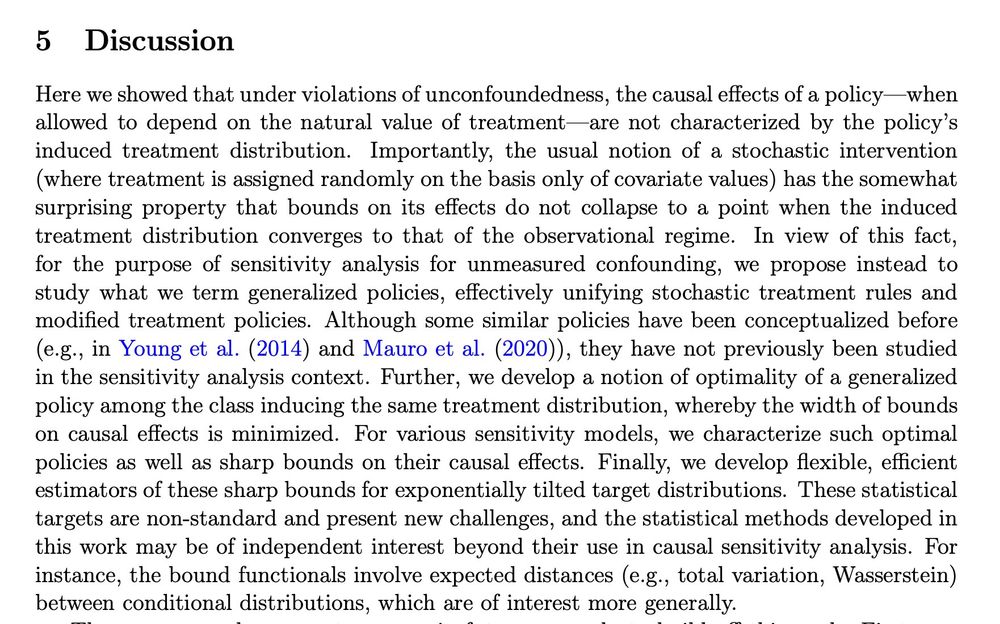

We show causal effects of new "soft" interventions are less sensitive to unmeasured confounding

& study which effects are *least* sensitive to confounding -> makes new connections to optimal transport

"Not reading to the end of Le Cam's papers became not uncommon in later years. His ideas have been regularly rediscovered."

At least they're in good company.

"Not reading to the end of Le Cam's papers became not uncommon in later years. His ideas have been regularly rediscovered."

At least they're in good company.

A short thread:

It amazes me how many crucial ideas underlying now-popular semiparametrics (aka doubly robust parameter/functional estimation / TMLE / double/debiased/orthogonal ML etc etc) were first proposed many decades ago.

I think this is widely under-appreciated!

A short thread:

It amazes me how many crucial ideas underlying now-popular semiparametrics (aka doubly robust parameter/functional estimation / TMLE / double/debiased/orthogonal ML etc etc) were first proposed many decades ago.

I think this is widely under-appreciated!

But the reality is that observational methods are used everyday to answer pressing causal questions that cannot be studied in randomized trials."

- Jamie Robins, 2002

tinyurl.com/4yuxfxes

tinyurl.com/zncp39mr

But the reality is that observational methods are used everyday to answer pressing causal questions that cannot be studied in randomized trials."

- Jamie Robins, 2002

tinyurl.com/4yuxfxes

tinyurl.com/zncp39mr

We tackle the challenge of comparing multiple treatments when some subjects have zero prob. of receiving certain treatments. Eg, provider profiling: comparing hospitals (the “treatments”) for patient outcomes. Positivity violations are everywhere.

We tackle the challenge of comparing multiple treatments when some subjects have zero prob. of receiving certain treatments. Eg, provider profiling: comparing hospitals (the “treatments”) for patient outcomes. Positivity violations are everywhere.

We tackle the challenge of comparing multiple treatments when some subjects have zero prob. of receiving certain treatments. Eg, provider profiling: comparing hospitals (the “treatments”) for patient outcomes. Positivity violations are everywhere.

My favourite: "Find the easiest problem you can't solve. The more embarrassing, the better!"

Slides: drive.google.com/file/d/15VaT...

TCS For all: sigact.org/tcsforall/

My favourite: "Find the easiest problem you can't solve. The more embarrassing, the better!"

Slides: drive.google.com/file/d/15VaT...

TCS For all: sigact.org/tcsforall/

Why not both?

Here we propose novel hybrid smooth+agnostic model, give minimax rates, & new optimal methods

arxiv.org/pdf/2405.08525

-> fast rates under weaker conditions

Why not both?

Here we propose novel hybrid smooth+agnostic model, give minimax rates, & new optimal methods

arxiv.org/pdf/2405.08525

-> fast rates under weaker conditions

📖 ccanonne.github.io/survey-topic... [Latest draft+exercise solns, free]

📗 nowpublishers.com/article/Deta... [Official pub]

📝 github.com/ccanonne/sur... [LaTeX source]

📖 ccanonne.github.io/survey-topic... [Latest draft+exercise solns, free]

📗 nowpublishers.com/article/Deta... [Official pub]

📝 github.com/ccanonne/sur... [LaTeX source]

- Brad Efron #statsquotes

- Brad Efron #statsquotes

Led by amazing postdoc Alex Levis: www.awlevis.com/about/

We show causal effects of new "soft" interventions are less sensitive to unmeasured confounding

& study which effects are *least* sensitive to confounding -> makes new connections to optimal transport

Led by amazing postdoc Alex Levis: www.awlevis.com/about/

We show causal effects of new "soft" interventions are less sensitive to unmeasured confounding

& study which effects are *least* sensitive to confounding -> makes new connections to optimal transport

(shameless repost of my pinned tweet)

(shameless repost of my pinned tweet)

1) Probability/Inference/Regression

2) Causal Inference

3) Model based inference (MLE/Bayes)

4) Machine Learning

1) Probability/Inference/Regression

2) Causal Inference

3) Model based inference (MLE/Bayes)

4) Machine Learning

arxiv.org/abs/2409.11967

i.e., soft interventions on cts treatments like dose, duration, frequency

it turns out exponential tilts preserve all nice properties of incremental effects with binary trt (arxiv.org/abs/1704.00211)

arxiv.org/abs/2409.11967

i.e., soft interventions on cts treatments like dose, duration, frequency

it turns out exponential tilts preserve all nice properties of incremental effects with binary trt (arxiv.org/abs/1704.00211)

www.awlevis.com/about/

www.awlevis.com/about/

arxiv.org/pdf/2301.121...

We give new methods for estimating bounds on avg treatment effects - trt is confounded, but an instrument is available. Super common in practice

The bounds are non-smooth, so std efficiency theory isn't applicable

Lots of useful nuggets throughout!

arxiv.org/pdf/2301.121...

We give new methods for estimating bounds on avg treatment effects - trt is confounded, but an instrument is available. Super common in practice

The bounds are non-smooth, so std efficiency theory isn't applicable

Lots of useful nuggets throughout!

arxiv.org/abs/2305.04116

We study if one can improve popular semiparametric / doubly robust / DML causal effect estimators - w/o adding structural assumptions...

Short answer: nope!

Turns out these methods are minimax optimal here

www.ehkennedy.com/uploads/5/8/...

arxiv.org/abs/2305.04116

We study if one can improve popular semiparametric / doubly robust / DML causal effect estimators - w/o adding structural assumptions...

Short answer: nope!

Turns out these methods are minimax optimal here

www.ehkennedy.com/uploads/5/8/...