sebastiandziadzio.com

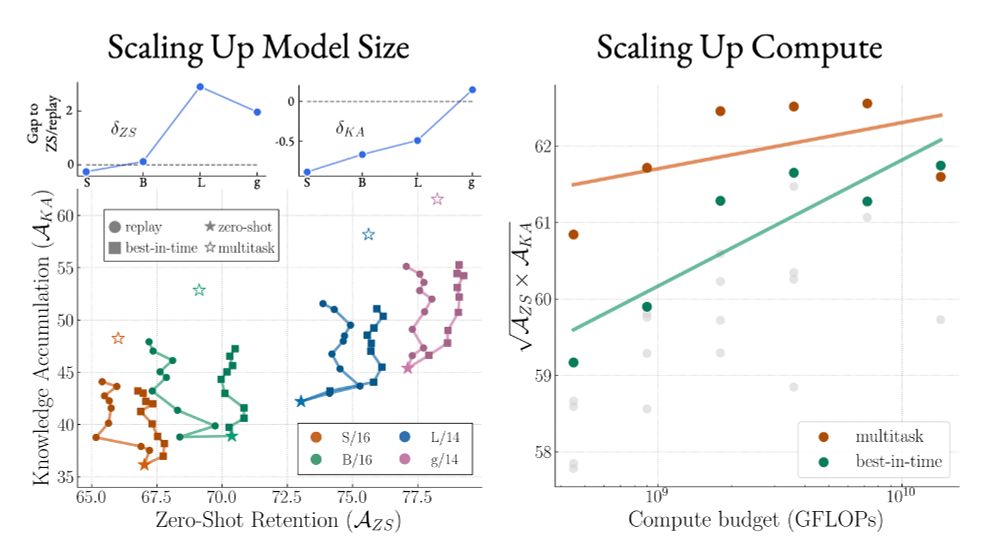

🚀 Larger models benefit more from temporal merging than sequential finetuning.

🚀 Larger compute budgets allow temporal merging to match (and surpass!) multitask performance.

🚀 Best-in-TIME scales effectively across longer task sequences (50, 100).

🚀 Larger models benefit more from temporal merging than sequential finetuning.

🚀 Larger compute budgets allow temporal merging to match (and surpass!) multitask performance.

🚀 Best-in-TIME scales effectively across longer task sequences (50, 100).

In the temporal setting, complex merging techniques like TIES or Breadcrumbs offer only marginal gains compared to simpler ones like weight averaging.

In the temporal setting, complex merging techniques like TIES or Breadcrumbs offer only marginal gains compared to simpler ones like weight averaging.

One strategy stands out—using exponential moving average for both initialization and deployment strikes the best balance between knowledge accumulation and zero-shot retention. We call this approach ✨Best-in-TIME✨

One strategy stands out—using exponential moving average for both initialization and deployment strikes the best balance between knowledge accumulation and zero-shot retention. We call this approach ✨Best-in-TIME✨

Standard merging struggles with the temporal dynamics. Replay and weighting schemes, which factor in the sequential nature of the problem, help (but only to a point).

Standard merging struggles with the temporal dynamics. Replay and weighting schemes, which factor in the sequential nature of the problem, help (but only to a point).

📌 Accounting for time is essential.

📌 Initialization and deployment choices are crucial.

📌 The choice of merging technique doesn’t matter much.

📌 Accounting for time is essential.

📌 Initialization and deployment choices are crucial.

📌 The choice of merging technique doesn’t matter much.

Enter TIME (Temporal Integration of Model Expertise), a unifying approach that considers:

1️⃣ Initialization

2️⃣ Deployment

3️⃣ Merging Techniques

We study these three axes on the large FoMo-in-Flux benchmark.

Enter TIME (Temporal Integration of Model Expertise), a unifying approach that considers:

1️⃣ Initialization

2️⃣ Deployment

3️⃣ Merging Techniques

We study these three axes on the large FoMo-in-Flux benchmark.

The firehose is relentless, so over time my strategy became to skim in the moment if interesting and save to zotero, otherwise close the tab. There is only the present. Important stuff will come back.

The firehose is relentless, so over time my strategy became to skim in the moment if interesting and save to zotero, otherwise close the tab. There is only the present. Important stuff will come back.