www.nature.com/articles/s41...

openreview.net/pdf?id=Vp2OA...

proceedings.mlr.press/v235/brenner...

www.nature.com/articles/s41...

(6/6)

(6/6)

(5/6)

(5/6)

#AI

(4/6)

#AI

(4/6)

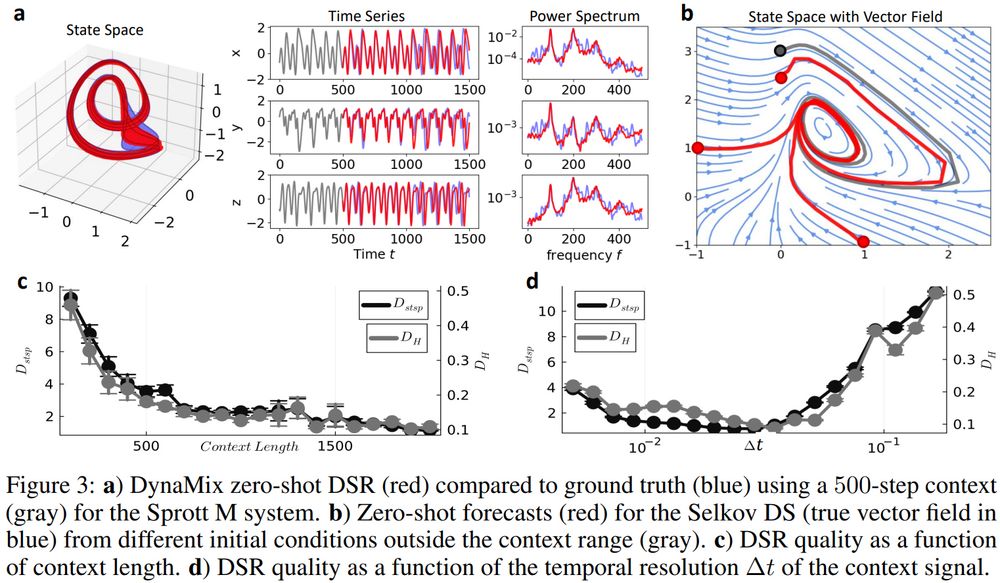

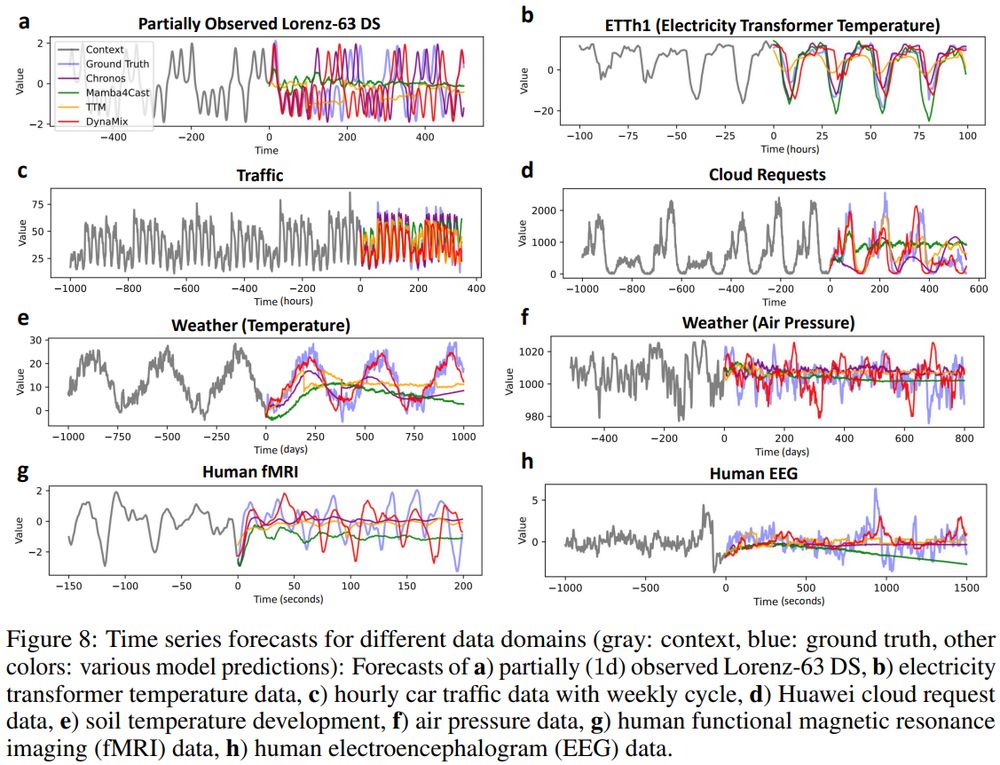

This is surprising, cos DynaMix’ training corpus consists *solely* of simulated limit cycles & chaotic systems, no empirical data at all!

(3/6)

This is surprising, cos DynaMix’ training corpus consists *solely* of simulated limit cycles & chaotic systems, no empirical data at all!

(3/6)

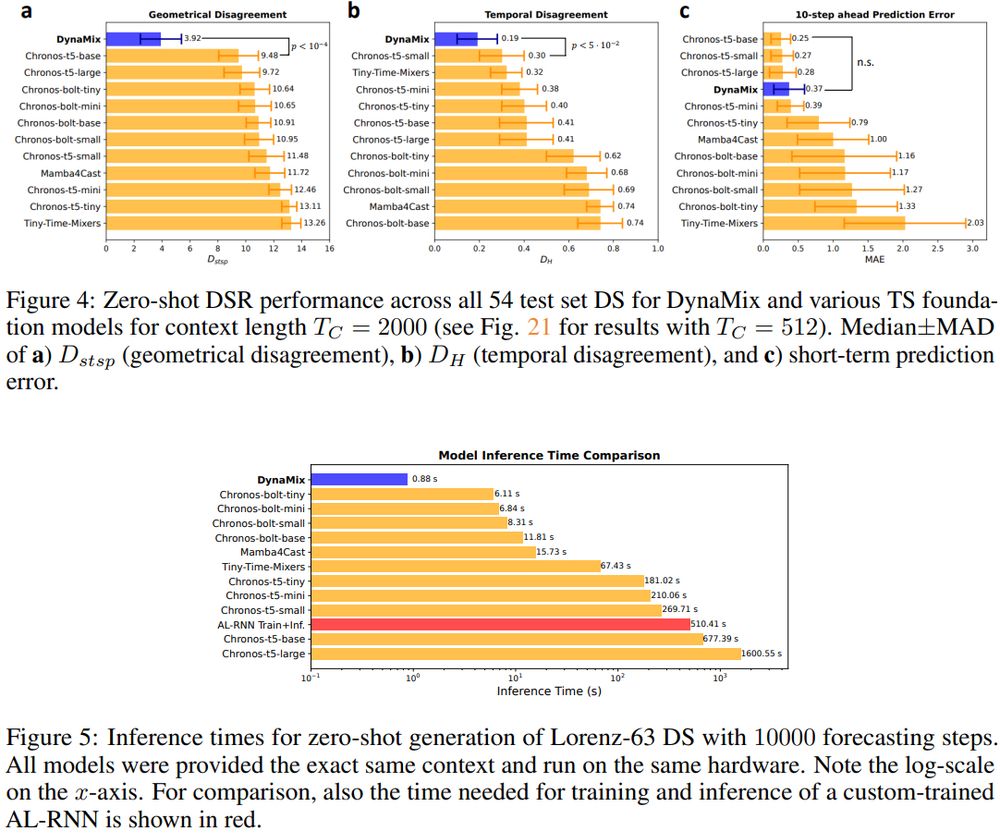

It does so with only 0.1% of the parameters of Chronos & 10x faster inference times than the closest competitor.

(2/6)

It does so with only 0.1% of the parameters of Chronos & 10x faster inference times than the closest competitor.

(2/6)