@huggingface page: https://huggingface.co/papers/2502.12996

congrats to my collaborators @SatyenKale who led that work and Yani Donchev

@huggingface page: https://huggingface.co/papers/2502.12996

congrats to my collaborators @SatyenKale who led that work and Yani Donchev

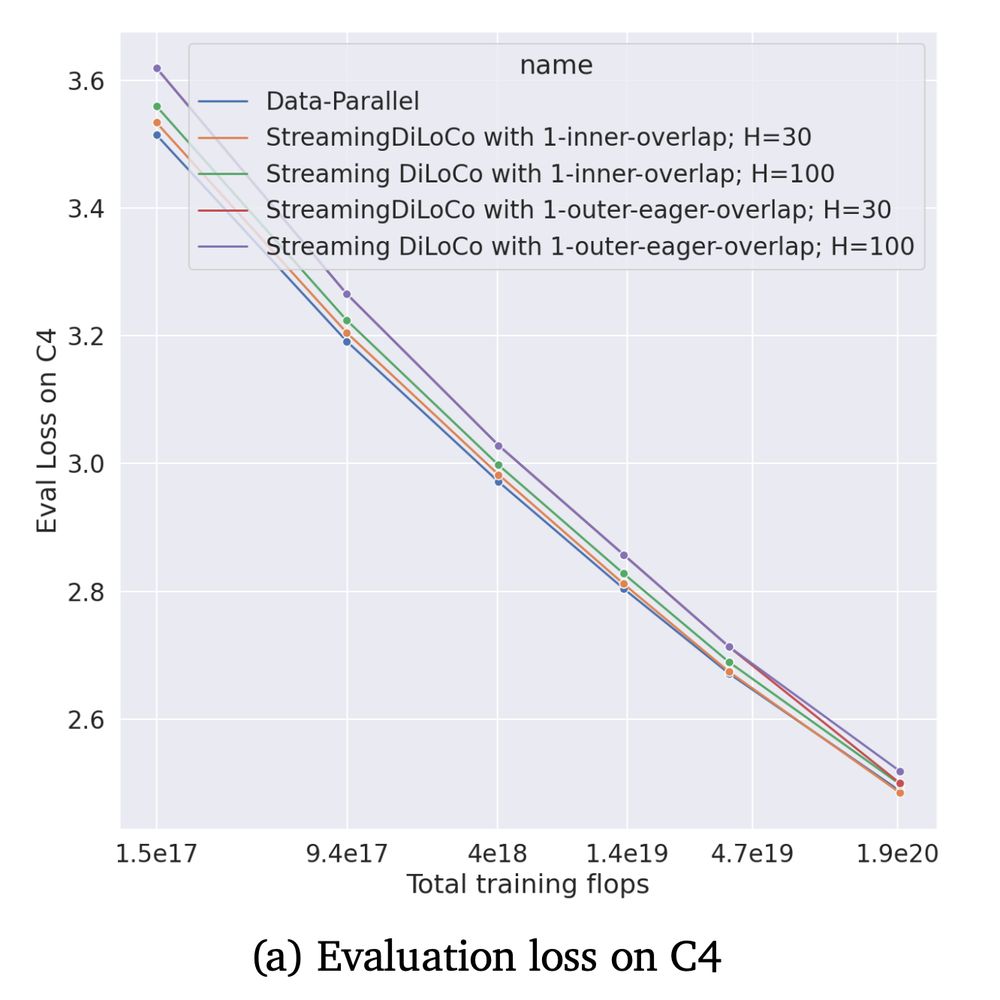

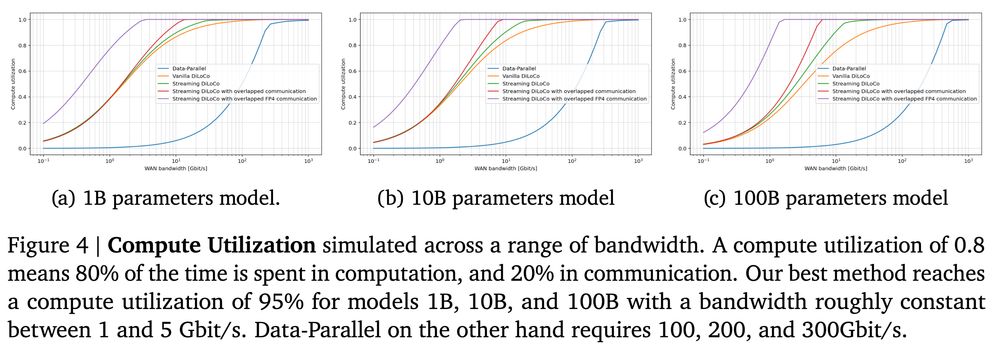

DP requires 471 Gbits/s

Streaming DiLoCo with inner com. overlap: 1.4 Gbits/s

Streaming DiLoCo with eager outer com. overlap: 400Mbits/s, more than 1000x reduction

400Mbits/s is consumer-grade bandwidth FYI

DP requires 471 Gbits/s

Streaming DiLoCo with inner com. overlap: 1.4 Gbits/s

Streaming DiLoCo with eager outer com. overlap: 400Mbits/s, more than 1000x reduction

400Mbits/s is consumer-grade bandwidth FYI

the update is made of the average of the *local up-to-date* update of the self replica and the *remote stale* update from the other replicas

the update is made of the average of the *local up-to-date* update of the self replica and the *remote stale* update from the other replicas

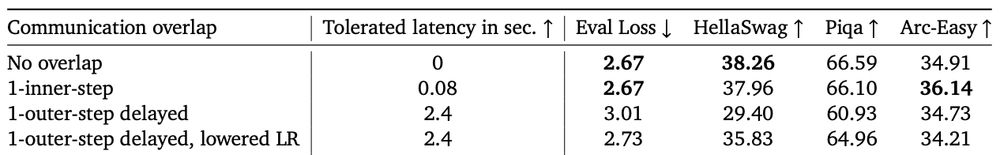

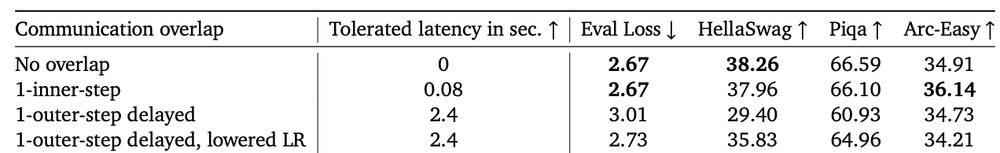

we can recover a bit by lowering the outer learning by 4x, but this is still unsatisfying

we can recover a bit by lowering the outer learning by 4x, but this is still unsatisfying

we first try a naive "delayed" version

we first try a naive "delayed" version

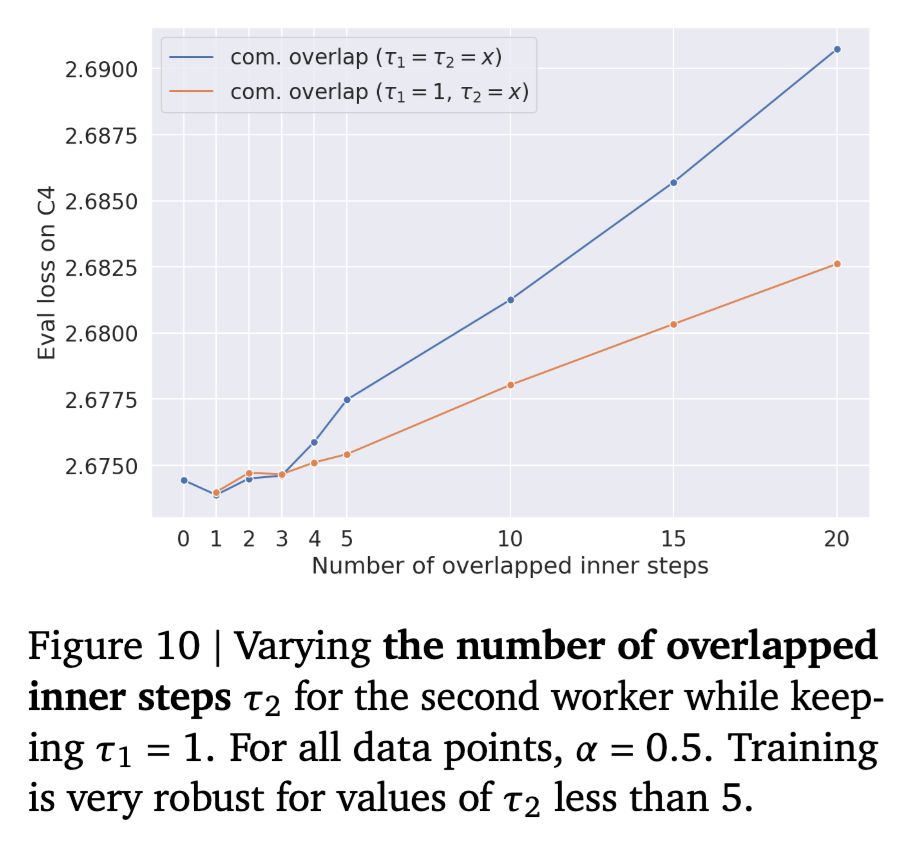

we can safely overlap up to 5 steps, but more than that and performance drops rapidly!

https://x.com/Ar_Douillard/status/1885292127678021751

we can safely overlap up to 5 steps, but more than that and performance drops rapidly!

https://x.com/Ar_Douillard/status/1885292127678021751

however, when syncing, this is a blocking operation!

https://x.com/Ar_Douillard/status/1724732329740976187

however, when syncing, this is a blocking operation!

https://x.com/Ar_Douillard/status/1724732329740976187

We finished this last spring, and it was one of the coolest project i've been on.

The future will be distributed 🫡

https://arxiv.org/abs/2501.18512v1

We finished this last spring, and it was one of the coolest project i've been on.

The future will be distributed 🫡

https://arxiv.org/abs/2501.18512v1

So so many ideas we try just work on top of DiLoCo. And it can scale too! Look at the cracked folks of @PrimeIntellect who scaled their version to 10B

So so many ideas we try just work on top of DiLoCo. And it can scale too! Look at the cracked folks of @PrimeIntellect who scaled their version to 10B

Can we break away from the lockstep synchronization? yes!

Workers can have a few delay steps, and it just work, w/o any special handling.

Can we break away from the lockstep synchronization? yes!

Workers can have a few delay steps, and it just work, w/o any special handling.

Over how many steps can you overlap safely communication?

At least 5 without any significant loss of perf! That's a massive increase of tolerated latency.

Over how many steps can you overlap safely communication?

At least 5 without any significant loss of perf! That's a massive increase of tolerated latency.

It has only 35B activated params, but you need to sync 671B params in total! Hard to do across continents with data-parallel...

However, with our method? ❤️🔥

It has only 35B activated params, but you need to sync 671B params in total! Hard to do across continents with data-parallel...

However, with our method? ❤️🔥

but we need to scale more our pretraining, this is still a relevant axis!

DeepSeek-R1 also notes it:

but we need to scale more our pretraining, this is still a relevant axis!

DeepSeek-R1 also notes it:

Abolish the tyranny of requiring huge bandwidth! ✊

Abolish the tyranny of requiring huge bandwidth! ✊

As @m_ryabinin noted in Swarm Parallelism: larger networks spent more time doing computation O(n^3) vs doing communication O(n^2).

We have much more time to sync at larger scales!

As @m_ryabinin noted in Swarm Parallelism: larger networks spent more time doing computation O(n^3) vs doing communication O(n^2).

We have much more time to sync at larger scales!

It estimates how much time is spent in the costly com. between non-colocated devices and how much is spent crunching flops.

It estimates how much time is spent in the costly com. between non-colocated devices and how much is spent crunching flops.

It's even better when overtraining with a larger token budget? remember the bitter lesson? just put more data and flops in your model, Streaming DiLoCo enables that.

It's even better when overtraining with a larger token budget? remember the bitter lesson? just put more data and flops in your model, Streaming DiLoCo enables that.

Quantize your update with 4 bits is enough -- you can barely see any changes on the performance.

And that's the free dessert 🍦

Quantize your update with 4 bits is enough -- you can barely see any changes on the performance.

And that's the free dessert 🍦