A masked language models like RoBERTa, originally designed for fill-in-the-blank tasks, can be repurposed into fully generative engines by interpreting variable-rate masking as a discrete diffusion process.

A masked language models like RoBERTa, originally designed for fill-in-the-blank tasks, can be repurposed into fully generative engines by interpreting variable-rate masking as a discrete diffusion process.

This repository is a curated collection of the most influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies.

github.com/mbzuai-oryx/...

This repository is a curated collection of the most influential papers, code implementations, benchmarks, and resources related to Large Language Models (LLMs) Post-Training Methodologies.

github.com/mbzuai-oryx/...

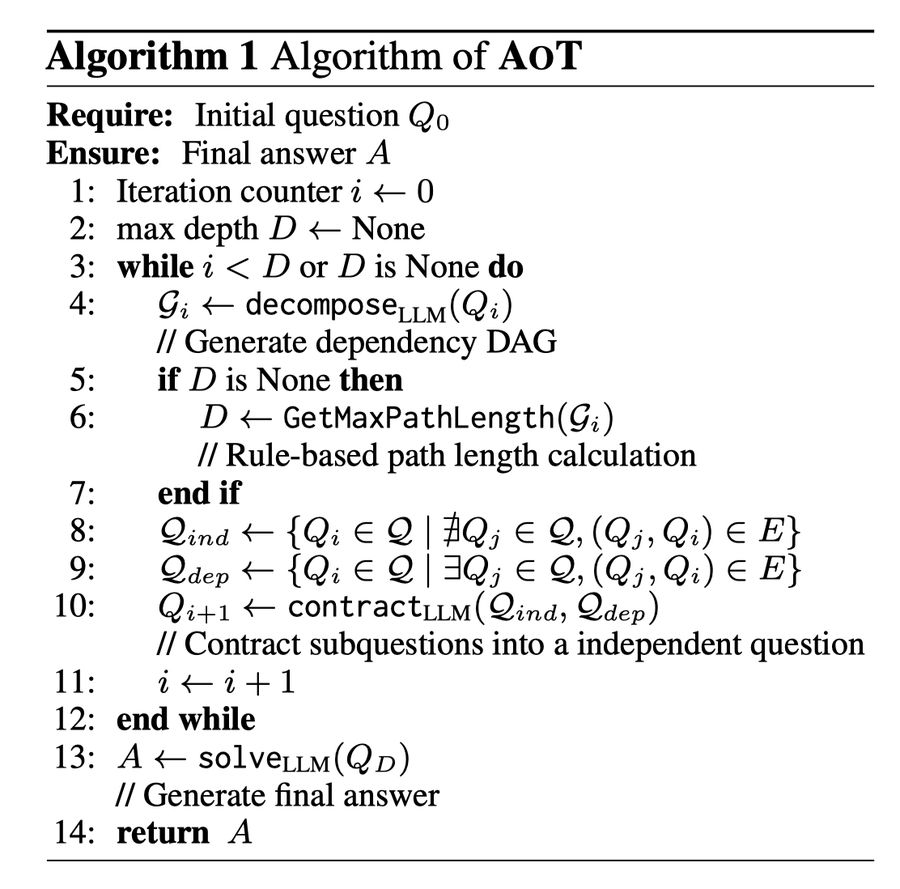

For each reasoning step, it:

1. Decompose the question into DAG

2. Contract the subquestions into a NEW simpler question

3. Iterate until reaching an atomic question

For each reasoning step, it:

1. Decompose the question into DAG

2. Contract the subquestions into a NEW simpler question

3. Iterate until reaching an atomic question

RGAR enhances medical question answering by combining factual and conceptual knowledge from dual sources, outperforming existing systems.

Read more: https://arxiv.org/html/2502.13361v1

RGAR enhances medical question answering by combining factual and conceptual knowledge from dual sources, outperforming existing systems.

Read more: https://arxiv.org/html/2502.13361v1

Code: github.com/scitix/MEAP

Code: github.com/scitix/MEAP

The answer appears to be yes - using 3 agents with a structured review process reduced hallucination scores by 96% across 310 test cases. arxiv.org/pdf/2501.13946

The answer appears to be yes - using 3 agents with a structured review process reduced hallucination scores by 96% across 310 test cases. arxiv.org/pdf/2501.13946

youtubetranscriptoptimizer.com/blog/05_the_...

youtubetranscriptoptimizer.com/blog/05_the_...

Paper: Towards System 2 Reasoning in LLMs: Learning How to Think With Meta Chain-of-Though ( arxiv.org/abs/2501.04682 )

Post on X: x.com/rm_rafailov/...

Paper: Towards System 2 Reasoning in LLMs: Learning How to Think With Meta Chain-of-Though ( arxiv.org/abs/2501.04682 )

Post on X: x.com/rm_rafailov/...

SAEs are interpreter models that help us understand how language models process information internally by decomposing neural activations into interpretable features.

SAEs are interpreter models that help us understand how language models process information internally by decomposing neural activations into interpretable features.

Challenges the conventional wisdom by showing that hierarchical layers in HNSW are unnecessary for high-dimensional data.

📝 arxiv.org/abs/2412.01940

👨🏽💻 github.com/BlaiseMuhirw...

Excited to share our #NeurIPS2024 paper on Amortized Decision-Aware Bayesian Experimental Design: arxiv.org/abs/2411.02064

@lacerbi.bsky.social @samikaski.bsky.social

Details below.

Excited to share our #NeurIPS2024 paper on Amortized Decision-Aware Bayesian Experimental Design: arxiv.org/abs/2411.02064

@lacerbi.bsky.social @samikaski.bsky.social

Details below.