Ex RA at @MSFTResearch

Opinions are my own! Tweets about books & food.

https://research.ibm.com/people/deepak-vijaykeerthy

on.ft.com/4iov2gM

on.ft.com/4iov2gM

They prove that finetuning LLMs with verifier-based (VB) methods based on RL or search is far superior to verifier-free (VF) approaches based on distilling or cloning search traces, given a fixed amount of compute/data budget.

They prove that finetuning LLMs with verifier-based (VB) methods based on RL or search is far superior to verifier-free (VF) approaches based on distilling or cloning search traces, given a fixed amount of compute/data budget.

Day 1: o1-mini. It makes 43 unique statements about Nicholas. 32 are completely false, 9 have major errors, and 2 are factually correct. Looks nice though.

nicholas.carlini.com/writing/2025...

Day 1: o1-mini. It makes 43 unique statements about Nicholas. 32 are completely false, 9 have major errors, and 2 are factually correct. Looks nice though.

nicholas.carlini.com/writing/2025...

simonwillison.net/2024/Dec/22/...

simonwillison.net/2024/Dec/22/...

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

A blog post to celebrate and present it: francisbach.com/my-book-is-o...

This was the 3rd edition and it's so great to see the interest in ML for tables, as well as the diversity and quality of TRL research rising 🚀.

This was the 3rd edition and it's so great to see the interest in ML for tables, as well as the diversity and quality of TRL research rising 🚀.

📄 openreview.net/forum?id=QKR...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

📄 openreview.net/forum?id=QKR...

NeurIPS: Sunday, East Exhibition Hall A, Safe Gen AI workshop

Interested in LLM-as-a-Judge?

Want to get the best judge for ranking your system?

our new work is just for you:

"JuStRank: Benchmarking LLM Judges for System Ranking"

🕺💃

arxiv.org/abs/2412.09569

Interested in LLM-as-a-Judge?

Want to get the best judge for ranking your system?

our new work is just for you:

"JuStRank: Benchmarking LLM Judges for System Ranking"

🕺💃

arxiv.org/abs/2412.09569

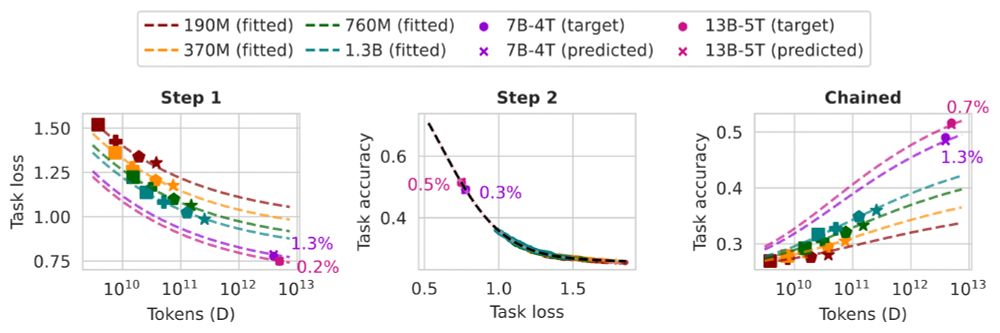

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

In collaboration w/ the amazing @841io.bsky.social @teknology.bsky.social Alireza Salemi and Hamed Zamani.

In collaboration w/ the amazing @841io.bsky.social @teknology.bsky.social Alireza Salemi and Hamed Zamani.

Thanks to Ana and Gustavo for hosting me so wonderfully! 🙏

🖇️ www.madelonhulsebos.com/assets/eth_c...

Bottom line:

Thanks to Ana and Gustavo for hosting me so wonderfully! 🙏

🖇️ www.madelonhulsebos.com/assets/eth_c...

Bottom line:

lacerbi.github.io/blog/2024/vi...

Or just come to play with the interactive variational inference widget that took me way too long to code up.

lacerbi.github.io/blog/2024/vi...

Or just come to play with the interactive variational inference widget that took me way too long to code up.

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

However, it's criminally undocumented. I tried using it outside Google to fine-tune PaliGemma and SigLIP on GPUs, and wrote a tutorial: lb.eyer.be/a/bv_tuto.html

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️