The largest publicly available corpus sourced exclusively from PDFs, containing about 3 trillion tokens across 475 million documents in 1733 languages.

- Long context

- 3T tokens from high-demand domains like legal and science.

- Heavily improves over SoTA

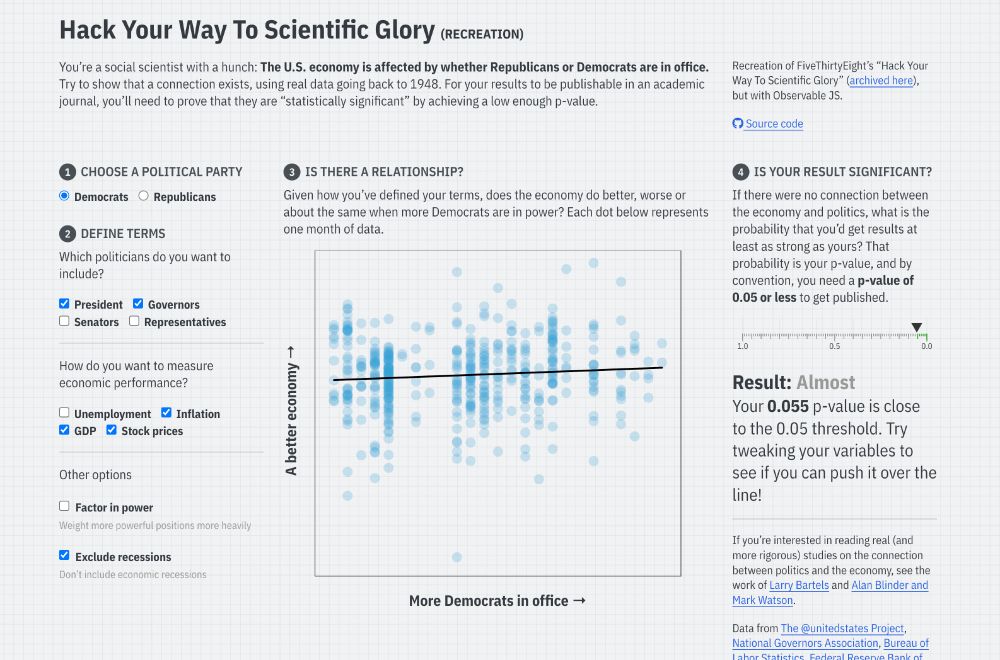

So I made my own with #rstats and Observable and #QuartoPub ! stats.andrewheiss.com/hack-your-way/

So I made my own with #rstats and Observable and #QuartoPub ! stats.andrewheiss.com/hack-your-way/

“To escape our two-party trap, we need a better system of electing people to Congress: proportional representation” write Jesse Wegman and Lee Drutman.

Instead a pre-trained neural network is, the new TabPFN, as we just published in Nature 🎉

Instead a pre-trained neural network is, the new TabPFN, as we just published in Nature 🎉

www.singularity.games/singularity-...

www.singularity.games/singularity-...

This algorithm will tell you "no" all the time. It has been shown to be up to 95% accurate in situations with a prevalence of 5% and *what is even better* even *more accurate* in rarer diseases

This algorithm will tell you "no" all the time. It has been shown to be up to 95% accurate in situations with a prevalence of 5% and *what is even better* even *more accurate* in rarer diseases

The difference between "no evidence that it works," and "evidence that it doesn't work," is

1. extremely confused linguistically

2. extremely important epistemically

3. surprisingly continuous in practice.

The importance of a null study result depends entirely on the power.

The difference between "no evidence that it works," and "evidence that it doesn't work," is

1. extremely confused linguistically

2. extremely important epistemically

3. surprisingly continuous in practice.

The importance of a null study result depends entirely on the power.

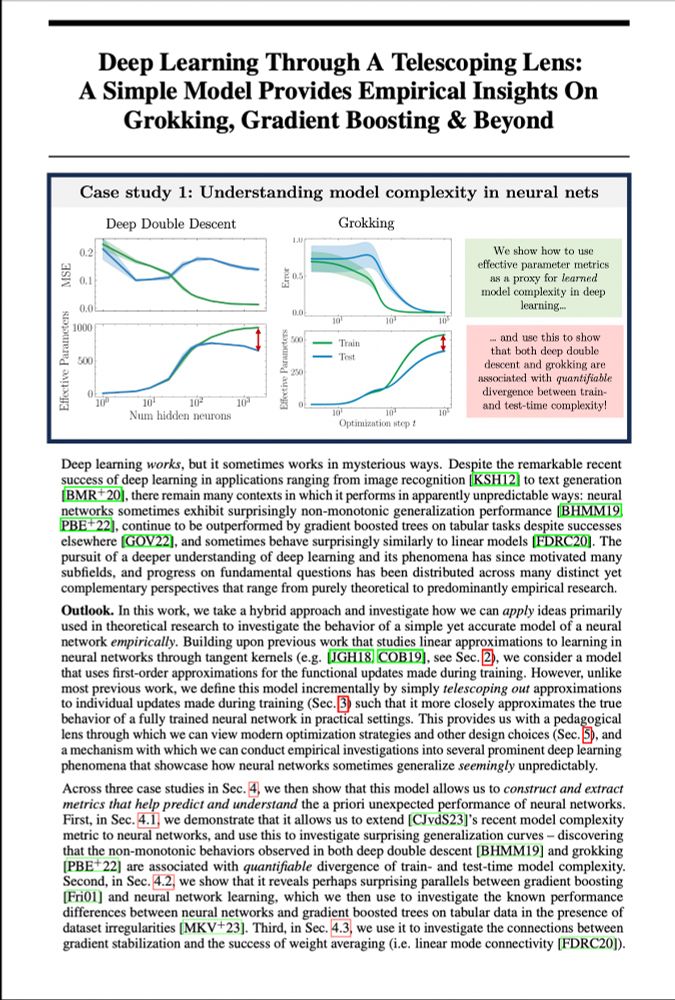

@alanjeffares.bsky.social & I suspected that answers to this are obfuscated by the 2 being considered very different algs🤔

Instead we show they are more similar than you’d think — making their diffs smaller but predictive!🧵1/n

@alanjeffares.bsky.social & I suspected that answers to this are obfuscated by the 2 being considered very different algs🤔

Instead we show they are more similar than you’d think — making their diffs smaller but predictive!🧵1/n

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n

For NeurIPS(my final PhD paper!), @alanjeffares.bsky.social & I explored if&how smart linearisation can help us better understand&predict numerous odd deep learning phenomena — and learned a lot..🧵1/n