I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

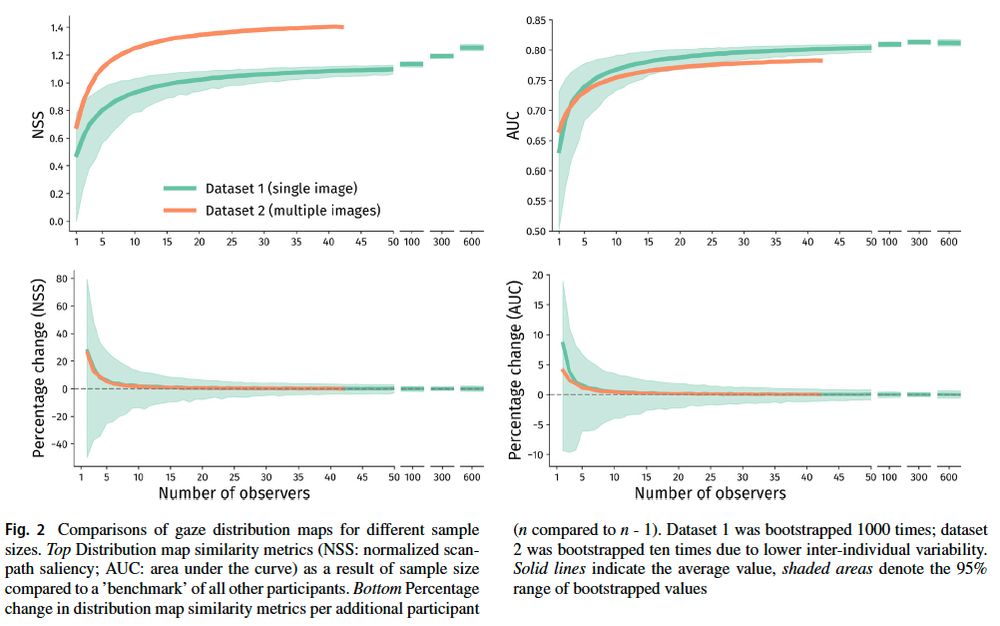

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

@tianyingq.bsky.social's new PBR metaanalysis across 28 exp shows: increases in access cost reliably push toward internal WM use.

doi.org/10.3758/s134...

#VisionScience 🧪

@tianyingq.bsky.social's new PBR metaanalysis across 28 exp shows: increases in access cost reliably push toward internal WM use.

doi.org/10.3758/s134...

#VisionScience 🧪

A rediscovery of the forgotten early wave of pupillometry research – effort, covert attention, imagery all made visible by 1900 in a fascinating literature.

authors.elsevier.com/a/1jKdtbotq3...

A rediscovery of the forgotten early wave of pupillometry research – effort, covert attention, imagery all made visible by 1900 in a fascinating literature.

authors.elsevier.com/a/1jKdtbotq3...

Pupils index the intensity of tactile stimulation (same location), and different sensitivities across body parts (same intensity) -one might build a homuncolos model with it.

Out in Psychophysiology

doi.org/10.1111/psyp...

Pupils index the intensity of tactile stimulation (same location), and different sensitivities across body parts (same intensity) -one might build a homuncolos model with it.

Out in Psychophysiology

doi.org/10.1111/psyp...

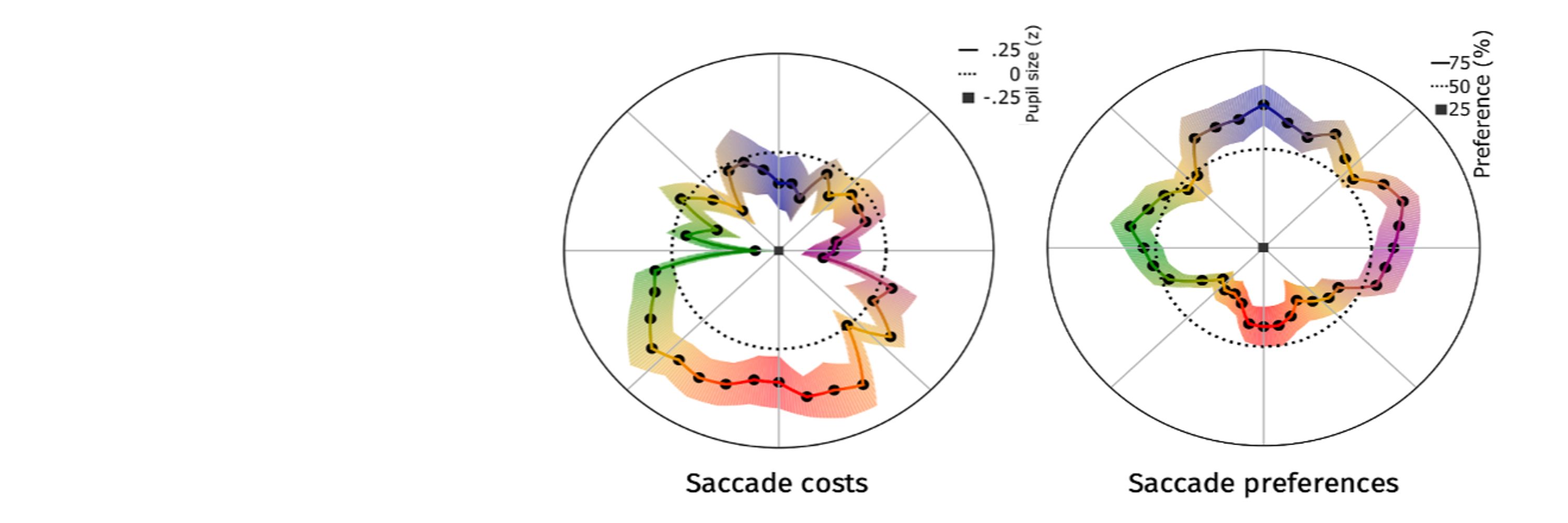

It’s not only what we see, it’s the most frequent human decision. We show that the predictors saliency, goals & scene knowledge need extension:

Eye movements are tuned to minimize effort that comes with planning & making them.

Check 🧵 & www.biorxiv.org/content/10.1...

It’s not only what we see, it’s the most frequent human decision. We show that the predictors saliency, goals & scene knowledge need extension:

Eye movements are tuned to minimize effort that comes with planning & making them.

Check 🧵 & www.biorxiv.org/content/10.1...

Very proud of Yuqing for her first PhD paper.

Very proud of Yuqing for her first PhD paper.

#psynomBRM might help here.

You can use it easily: Load video + eye-tracking data & wait for the model to produce its output

#psynomBRM might help here.

You can use it easily: Load video + eye-tracking data & wait for the model to produce its output

in the Nemo museum Amsterdam! Out in Communications Psych

t.ly/Y1-Ty tldr: models do well, especially if you are a psychology student More:

in the Nemo museum Amsterdam! Out in Communications Psych

t.ly/Y1-Ty tldr: models do well, especially if you are a psychology student More: