Compiler

@compiler.news

A nonprofit newsroom covering the people, institutions and global forces shaping our digital future.

"While there are diverging views on how to regulate AI," wrote @tekendra-parmar.bsky.social, "it's clear that Americans are paying attention and more aware of how AI may benefit or harm their lives."

Read the full story here 👇

www.compiler.news/trump-ai-act...

Read the full story here 👇

www.compiler.news/trump-ai-act...

What American really feel about A.I.

The Trump administration's AI Action Plan received more than 10,000 comments. We analyzed all of them to find out how Americans feel about artificial intelligence and its impact on jobs, human rights,...

www.compiler.news

May 7, 2025 at 9:41 PM

"While there are diverging views on how to regulate AI," wrote @tekendra-parmar.bsky.social, "it's clear that Americans are paying attention and more aware of how AI may benefit or harm their lives."

Read the full story here 👇

www.compiler.news/trump-ai-act...

Read the full story here 👇

www.compiler.news/trump-ai-act...

The future of AI policy won’t be written by tech CEOs alone.

Thousands of Americans just made clear: They want innovation—but not without rules.

Ignore them at your peril.

Thousands of Americans just made clear: They want innovation—but not without rules.

Ignore them at your peril.

May 7, 2025 at 9:41 PM

The future of AI policy won’t be written by tech CEOs alone.

Thousands of Americans just made clear: They want innovation—but not without rules.

Ignore them at your peril.

Thousands of Americans just made clear: They want innovation—but not without rules.

Ignore them at your peril.

Sentiment analysis revealed something surprising: Emotionally, Americans are split—pretty evenly—on AI.

There’s skepticism, yes. But also trust, curiosity, and a surprising amount of hope.

There’s skepticism, yes. But also trust, curiosity, and a surprising amount of hope.

May 7, 2025 at 9:41 PM

Sentiment analysis revealed something surprising: Emotionally, Americans are split—pretty evenly—on AI.

There’s skepticism, yes. But also trust, curiosity, and a surprising amount of hope.

There’s skepticism, yes. But also trust, curiosity, and a surprising amount of hope.

Anthropic warned of bioweapon risks.

Future of Life called for a moratorium on systems that could manipulate humans or evade control.

This was not a kumbaya moment among frontier labs.

Future of Life called for a moratorium on systems that could manipulate humans or evade control.

This was not a kumbaya moment among frontier labs.

May 7, 2025 at 9:41 PM

Anthropic warned of bioweapon risks.

Future of Life called for a moratorium on systems that could manipulate humans or evade control.

This was not a kumbaya moment among frontier labs.

Future of Life called for a moratorium on systems that could manipulate humans or evade control.

This was not a kumbaya moment among frontier labs.

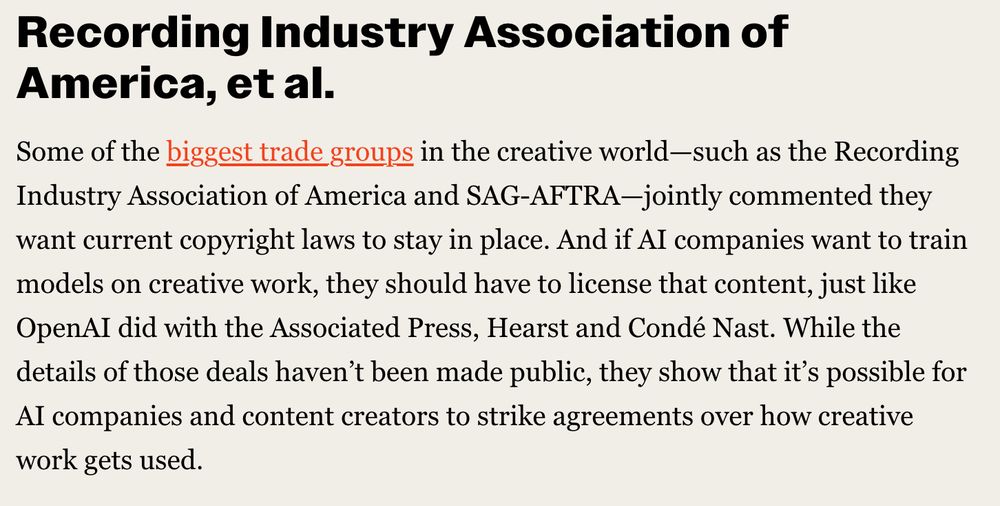

Record industry groups (RIAA, SAG-AFTRA, etc) said: Scrap the race talk—just license the damn content.

EFF said even that’s not enough. Licensing deals often screw over independent artists and do little to stop biased or harmful AI.

EFF said even that’s not enough. Licensing deals often screw over independent artists and do little to stop biased or harmful AI.

May 7, 2025 at 9:41 PM

Record industry groups (RIAA, SAG-AFTRA, etc) said: Scrap the race talk—just license the damn content.

EFF said even that’s not enough. Licensing deals often screw over independent artists and do little to stop biased or harmful AI.

EFF said even that’s not enough. Licensing deals often screw over independent artists and do little to stop biased or harmful AI.

Of course, companies had thoughts, too.

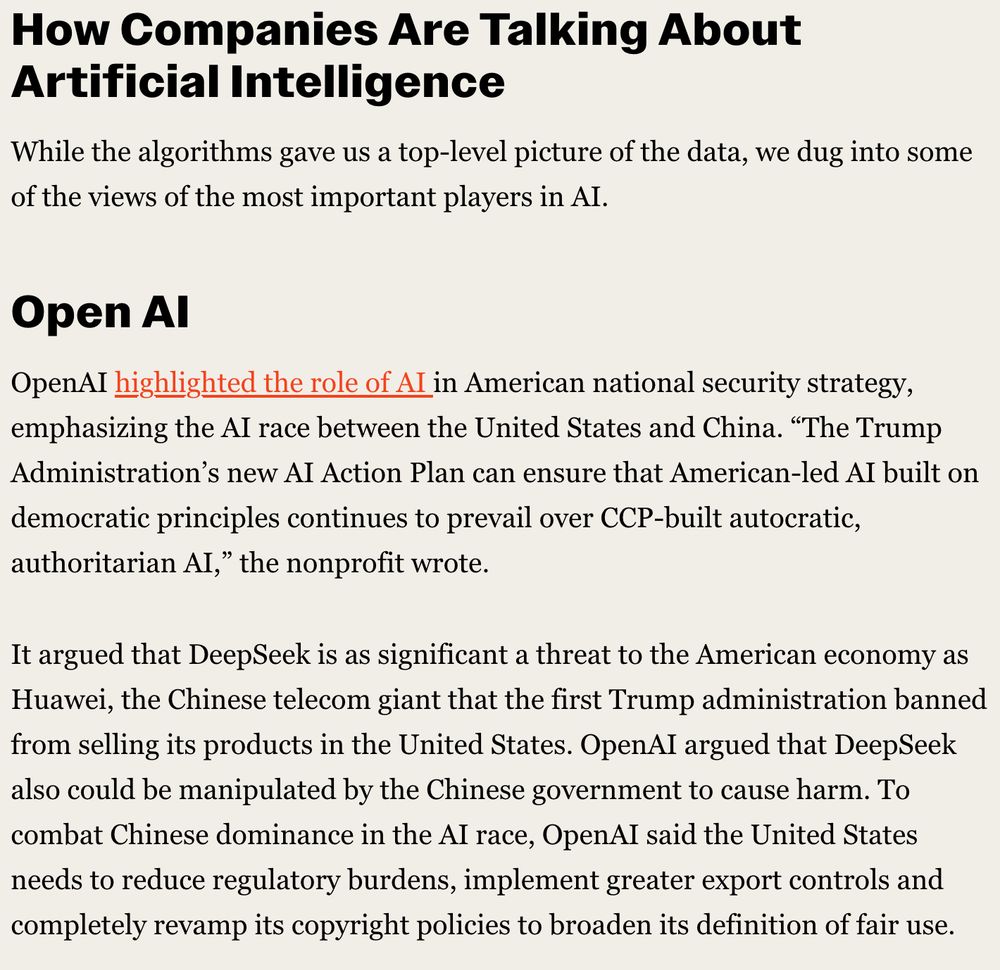

OpenAI used its comment to frame AI as a Cold War tech race with China, arguing for fewer regulations and more export controls.

Also, they’re getting sued by creators for copyright violations.

OpenAI used its comment to frame AI as a Cold War tech race with China, arguing for fewer regulations and more export controls.

Also, they’re getting sued by creators for copyright violations.

May 7, 2025 at 9:41 PM

Of course, companies had thoughts, too.

OpenAI used its comment to frame AI as a Cold War tech race with China, arguing for fewer regulations and more export controls.

Also, they’re getting sued by creators for copyright violations.

OpenAI used its comment to frame AI as a Cold War tech race with China, arguing for fewer regulations and more export controls.

Also, they’re getting sued by creators for copyright violations.

Many raised sharp questions about how the U.S. Copyright Office handles AI-assisted work.

They weren't just mad about stolen art. They were interrogating the rules, precedent and fairness.

They weren't just mad about stolen art. They were interrogating the rules, precedent and fairness.

May 7, 2025 at 9:41 PM

Many raised sharp questions about how the U.S. Copyright Office handles AI-assisted work.

They weren't just mad about stolen art. They were interrogating the rules, precedent and fairness.

They weren't just mad about stolen art. They were interrogating the rules, precedent and fairness.

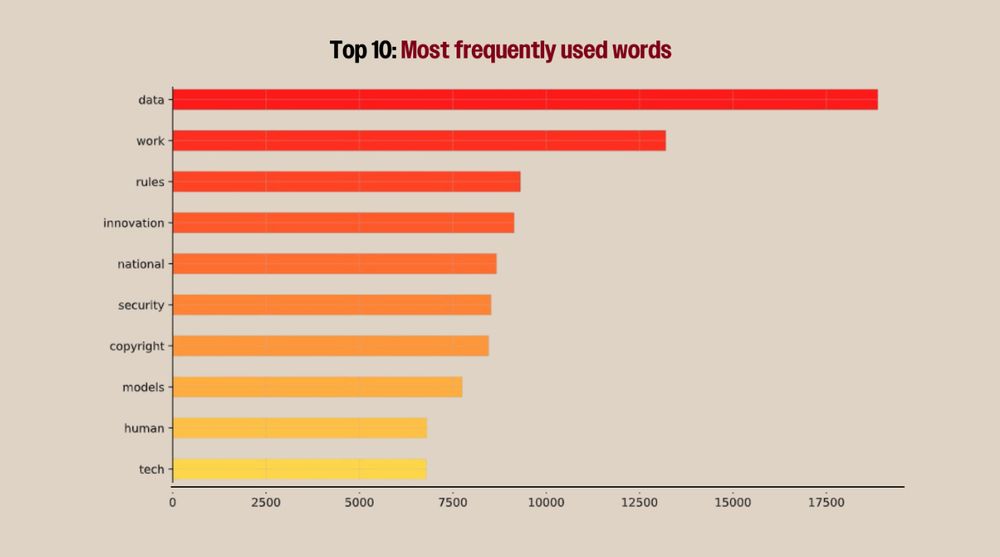

Using machine learning and sentiment tools, we pulled themes from the comments.

Top words? “Data,” “work,” “rules” and “innovation.”

Top concern? Copyright—especially how creators’ work is being scraped to train models.

Top words? “Data,” “work,” “rules” and “innovation.”

Top concern? Copyright—especially how creators’ work is being scraped to train models.

May 7, 2025 at 9:41 PM

Using machine learning and sentiment tools, we pulled themes from the comments.

Top words? “Data,” “work,” “rules” and “innovation.”

Top concern? Copyright—especially how creators’ work is being scraped to train models.

Top words? “Data,” “work,” “rules” and “innovation.”

Top concern? Copyright—especially how creators’ work is being scraped to train models.

The public feedback revealed a deeply engaged, highly nuanced conversation.

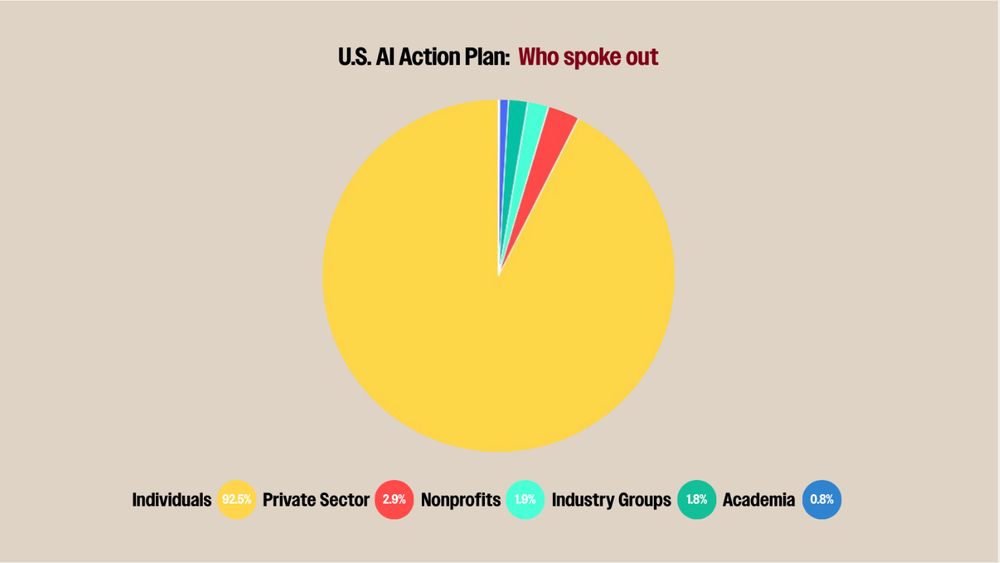

90%+ of responses came from individuals, not companies.

Most weren’t doomsayers—they asked hard questions about labor, copyright and data.

90%+ of responses came from individuals, not companies.

Most weren’t doomsayers—they asked hard questions about labor, copyright and data.

May 7, 2025 at 9:41 PM

The public feedback revealed a deeply engaged, highly nuanced conversation.

90%+ of responses came from individuals, not companies.

Most weren’t doomsayers—they asked hard questions about labor, copyright and data.

90%+ of responses came from individuals, not companies.

Most weren’t doomsayers—they asked hard questions about labor, copyright and data.

Reposted by Compiler

And if you can subscribe to our newsletter and follow @compiler.news. As an editor, I'm always grateful to be edited. @elleryrb.bsky.social is always baller to work with. www.compiler.news

Compiler

Tech policy news, analysis and opinion

www.compiler.news

April 10, 2025 at 6:30 PM

And if you can subscribe to our newsletter and follow @compiler.news. As an editor, I'm always grateful to be edited. @elleryrb.bsky.social is always baller to work with. www.compiler.news