Zaid Khan

@codezakh.bsky.social

PhD student @ UNC NLP with @mohitbansal working on grounded reasoning + code generation | currently interning at Ai2 (PRIOR) | formerly NEC Laboratories America | BS + MS @ Northeastern

zaidkhan.me

zaidkhan.me

It was a fun collaboration with @esteng.bsky.social @archiki.bsky.social @jmincho.bsky.social @mohitbansal.bsky.social! 🥳

Paper: arxiv.org/abs/2504.09763

Project Page: zaidkhan.me/EFAGen

Datasets + Models: huggingface.co/collections/...

HF Paper: huggingface.co/papers/2504....

Paper: arxiv.org/abs/2504.09763

Project Page: zaidkhan.me/EFAGen

Datasets + Models: huggingface.co/collections/...

HF Paper: huggingface.co/papers/2504....

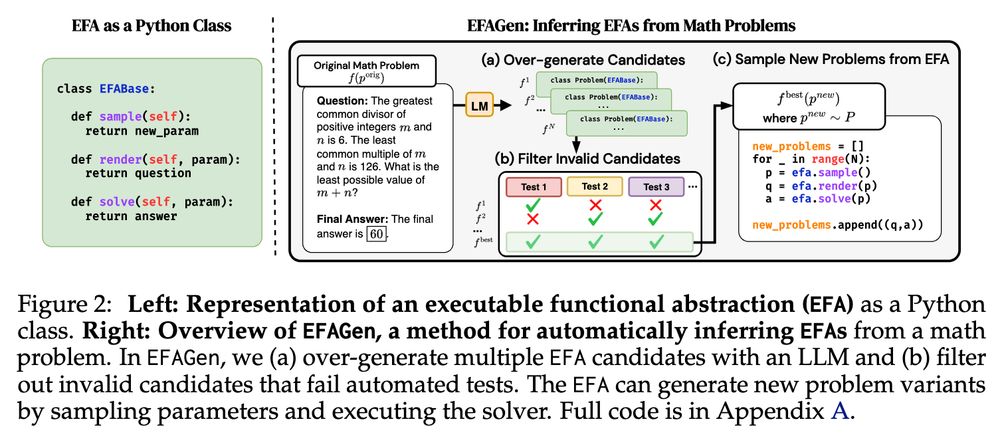

Executable Functional Abstractions: Inferring Generative Programs for Advanced Math Problems

Scientists often infer abstract procedures from specific instances of problems and use the abstractions to generate new, related instances. For example, programs encoding the formal rules and properti...

arxiv.org

April 15, 2025 at 7:37 PM

It was a fun collaboration with @esteng.bsky.social @archiki.bsky.social @jmincho.bsky.social @mohitbansal.bsky.social! 🥳

Paper: arxiv.org/abs/2504.09763

Project Page: zaidkhan.me/EFAGen

Datasets + Models: huggingface.co/collections/...

HF Paper: huggingface.co/papers/2504....

Paper: arxiv.org/abs/2504.09763

Project Page: zaidkhan.me/EFAGen

Datasets + Models: huggingface.co/collections/...

HF Paper: huggingface.co/papers/2504....

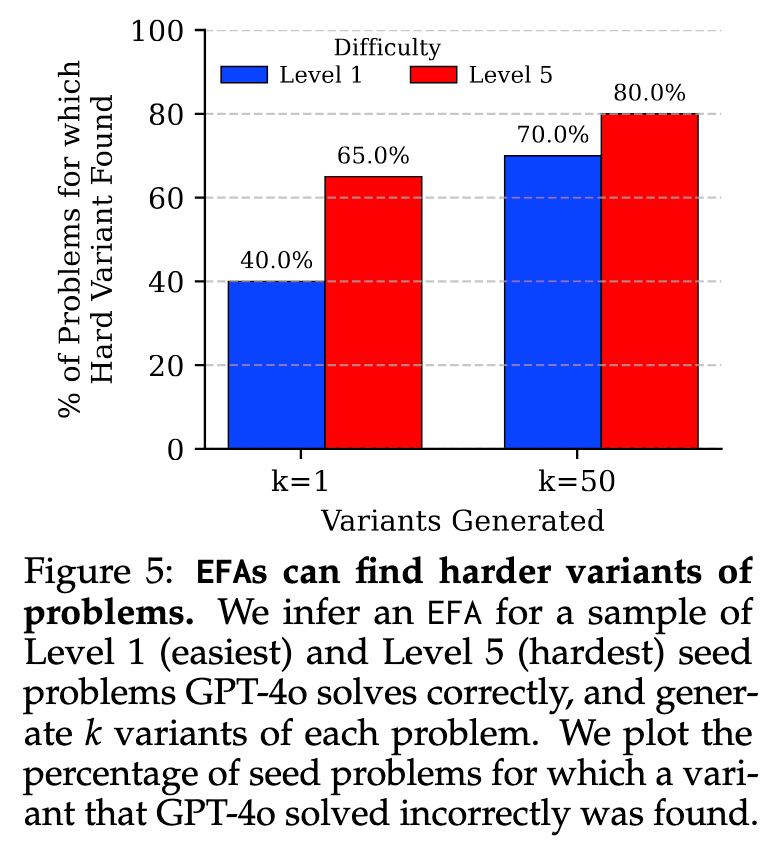

EFAs can be used for adversarial search to find harder problem variants. This has some interesting potential uses, such as finding fresh problems for online RL or identifying gaps / inconsistencies in a model’s reasoning ability. We can find variants of even Level 1 problems (GPT-4o) solves wrong.

April 15, 2025 at 7:37 PM

EFAs can be used for adversarial search to find harder problem variants. This has some interesting potential uses, such as finding fresh problems for online RL or identifying gaps / inconsistencies in a model’s reasoning ability. We can find variants of even Level 1 problems (GPT-4o) solves wrong.

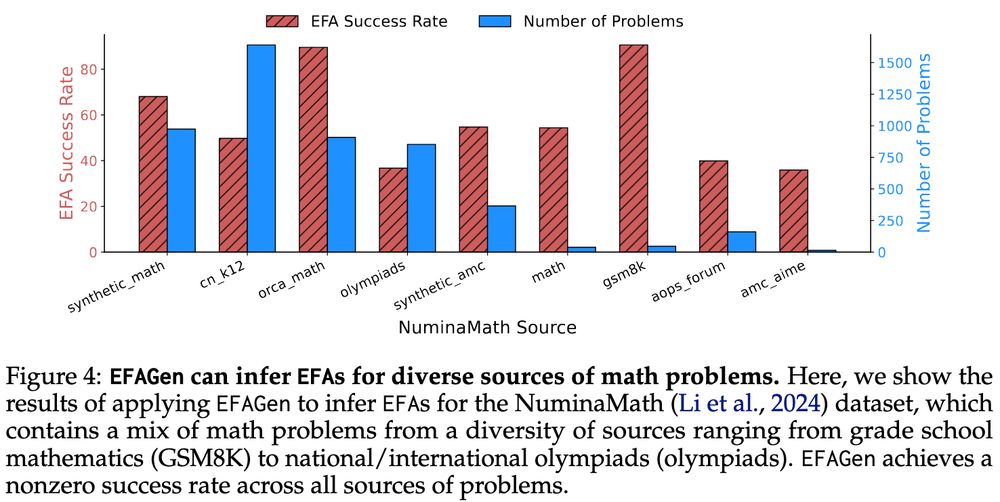

EFAGen can infer EFAs for diverse sources of math data.

We demonstrate this by inferring EFAs on the NuminaMath dataset, which includes problems ranging from grade school to olympiad level problems. EFAGen can successfully infer EFAs for all math sources in NuminaMath, even olympiad-level problems.

We demonstrate this by inferring EFAs on the NuminaMath dataset, which includes problems ranging from grade school to olympiad level problems. EFAGen can successfully infer EFAs for all math sources in NuminaMath, even olympiad-level problems.

April 15, 2025 at 7:37 PM

EFAGen can infer EFAs for diverse sources of math data.

We demonstrate this by inferring EFAs on the NuminaMath dataset, which includes problems ranging from grade school to olympiad level problems. EFAGen can successfully infer EFAs for all math sources in NuminaMath, even olympiad-level problems.

We demonstrate this by inferring EFAs on the NuminaMath dataset, which includes problems ranging from grade school to olympiad level problems. EFAGen can successfully infer EFAs for all math sources in NuminaMath, even olympiad-level problems.

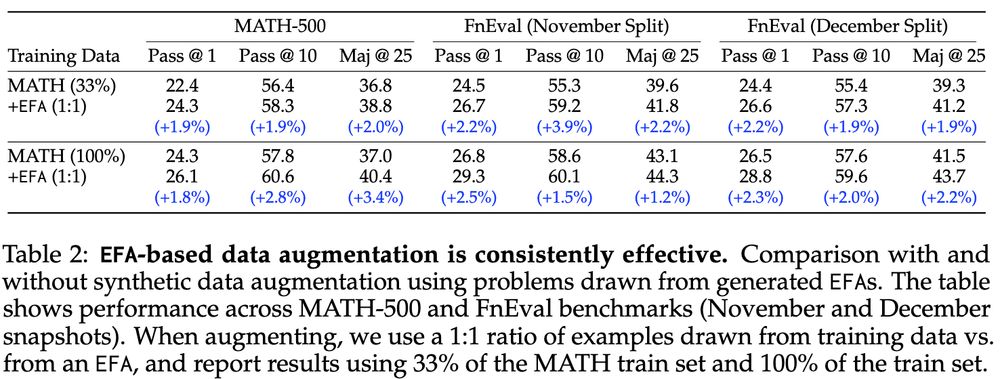

EFAs are effective at augmenting training data.

Getting high-quality math data is expensive. EFAGen offers a way to improve upon existing math training data by generating problem variants through EFAs. EFA-based augmentation leads to consistent improvements across all evaluation metrics.

Getting high-quality math data is expensive. EFAGen offers a way to improve upon existing math training data by generating problem variants through EFAs. EFA-based augmentation leads to consistent improvements across all evaluation metrics.

April 15, 2025 at 7:37 PM

EFAs are effective at augmenting training data.

Getting high-quality math data is expensive. EFAGen offers a way to improve upon existing math training data by generating problem variants through EFAs. EFA-based augmentation leads to consistent improvements across all evaluation metrics.

Getting high-quality math data is expensive. EFAGen offers a way to improve upon existing math training data by generating problem variants through EFAs. EFA-based augmentation leads to consistent improvements across all evaluation metrics.

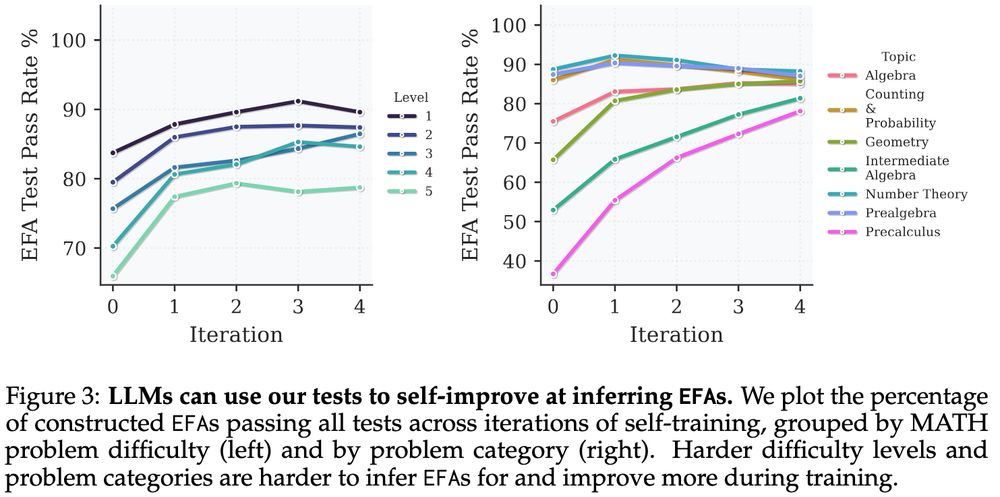

LMs can self-improve at inferring EFAs with execution feedback!

We self-train Llama-3.1-8B-Instruct with rejection finetuning using our derived unit tests as a verifiable reward signal and see substantial improvements in the model’s ability to infer EFAs, especially on harder problems.

We self-train Llama-3.1-8B-Instruct with rejection finetuning using our derived unit tests as a verifiable reward signal and see substantial improvements in the model’s ability to infer EFAs, especially on harder problems.

April 15, 2025 at 7:37 PM

LMs can self-improve at inferring EFAs with execution feedback!

We self-train Llama-3.1-8B-Instruct with rejection finetuning using our derived unit tests as a verifiable reward signal and see substantial improvements in the model’s ability to infer EFAs, especially on harder problems.

We self-train Llama-3.1-8B-Instruct with rejection finetuning using our derived unit tests as a verifiable reward signal and see substantial improvements in the model’s ability to infer EFAs, especially on harder problems.

Key Insight💡: We formalize properties any valid EFA must possess as unit tests and treat EFA inference as a program synthesis task that we can apply test-time search to.

April 15, 2025 at 7:37 PM

Key Insight💡: We formalize properties any valid EFA must possess as unit tests and treat EFA inference as a program synthesis task that we can apply test-time search to.

➡️ EFAGen can generate data to augment static math datasets

➡️ EFAGen can infer EFAs for diverse + difficult math problems

➡️ Use EFAs to find + generate harder variants of existing math problems

➡️ LLMs can self-improve at writing EFAs

➡️ EFAGen can infer EFAs for diverse + difficult math problems

➡️ Use EFAs to find + generate harder variants of existing math problems

➡️ LLMs can self-improve at writing EFAs

April 15, 2025 at 7:37 PM

➡️ EFAGen can generate data to augment static math datasets

➡️ EFAGen can infer EFAs for diverse + difficult math problems

➡️ Use EFAs to find + generate harder variants of existing math problems

➡️ LLMs can self-improve at writing EFAs

➡️ EFAGen can infer EFAs for diverse + difficult math problems

➡️ Use EFAs to find + generate harder variants of existing math problems

➡️ LLMs can self-improve at writing EFAs

Reposted by Zaid Khan

-- positional bias of faithfulness for long-form summarization

-- improving generation faithfulness via multi-agent collaboration

(PS. Also a big thanks to ACs+reviewers for their effort!)

-- improving generation faithfulness via multi-agent collaboration

(PS. Also a big thanks to ACs+reviewers for their effort!)

January 27, 2025 at 9:38 PM

-- positional bias of faithfulness for long-form summarization

-- improving generation faithfulness via multi-agent collaboration

(PS. Also a big thanks to ACs+reviewers for their effort!)

-- improving generation faithfulness via multi-agent collaboration

(PS. Also a big thanks to ACs+reviewers for their effort!)

Reposted by Zaid Khan

-- safe T2I/T2V gener

-- generative infinite games

-- procedural+predictive video repres learning

-- bootstrapping VLN via self-refining data flywheel

-- automated preference data synthesis

-- diagnosing cultural bias of VLMs

-- adaptive decoding to balance contextual+parametric knowl conflicts

🧵

-- generative infinite games

-- procedural+predictive video repres learning

-- bootstrapping VLN via self-refining data flywheel

-- automated preference data synthesis

-- diagnosing cultural bias of VLMs

-- adaptive decoding to balance contextual+parametric knowl conflicts

🧵

January 27, 2025 at 9:38 PM

-- safe T2I/T2V gener

-- generative infinite games

-- procedural+predictive video repres learning

-- bootstrapping VLN via self-refining data flywheel

-- automated preference data synthesis

-- diagnosing cultural bias of VLMs

-- adaptive decoding to balance contextual+parametric knowl conflicts

🧵

-- generative infinite games

-- procedural+predictive video repres learning

-- bootstrapping VLN via self-refining data flywheel

-- automated preference data synthesis

-- diagnosing cultural bias of VLMs

-- adaptive decoding to balance contextual+parametric knowl conflicts

🧵

Reposted by Zaid Khan

-- adapting diverse ctrls to any diffusion model

-- balancing fast+slow sys-1.x planning

-- balancing agents' persuasion resistance+acceptance

-- multimodal compositional+modular video reasoning

-- reverse thinking for stronger LLM reasoning

-- lifelong multimodal instruc tuning via dyn data selec

🧵

-- balancing fast+slow sys-1.x planning

-- balancing agents' persuasion resistance+acceptance

-- multimodal compositional+modular video reasoning

-- reverse thinking for stronger LLM reasoning

-- lifelong multimodal instruc tuning via dyn data selec

🧵

January 27, 2025 at 9:38 PM

-- adapting diverse ctrls to any diffusion model

-- balancing fast+slow sys-1.x planning

-- balancing agents' persuasion resistance+acceptance

-- multimodal compositional+modular video reasoning

-- reverse thinking for stronger LLM reasoning

-- lifelong multimodal instruc tuning via dyn data selec

🧵

-- balancing fast+slow sys-1.x planning

-- balancing agents' persuasion resistance+acceptance

-- multimodal compositional+modular video reasoning

-- reverse thinking for stronger LLM reasoning

-- lifelong multimodal instruc tuning via dyn data selec

🧵