Deep Learning | ML Robustness | AI Safety | Uncertainty Quantification

Thanks to my amazing collaborators:

- @alasdair-p.bsky.social, Preetham Arvind, @maximek3.bsky.social, Tom Rainforth, @philiptorr.bsky.social, @adelbibi.bsky.social at @ox.ac.uk

- Bernard Ghanem at KAUST

- Thomas Lukasiewicz at @tuwien.at.

(7/7)

Thanks to my amazing collaborators:

- @alasdair-p.bsky.social, Preetham Arvind, @maximek3.bsky.social, Tom Rainforth, @philiptorr.bsky.social, @adelbibi.bsky.social at @ox.ac.uk

- Bernard Ghanem at KAUST

- Thomas Lukasiewicz at @tuwien.at.

(7/7)

(6/7)

(6/7)

(5/7)

(5/7)

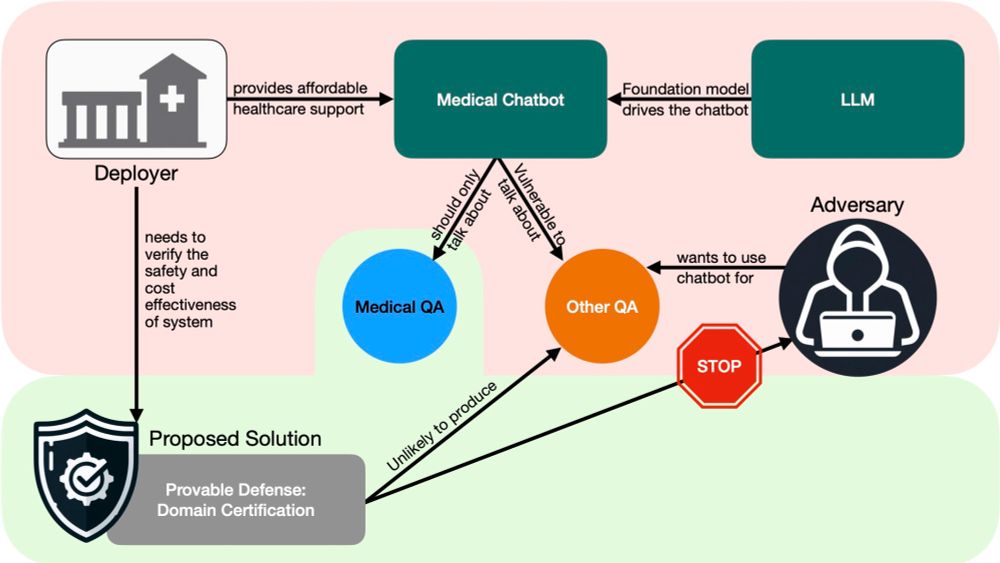

- **Domain Certification:** a framework for adversarial certification of LLMs.

- **VALID:** a simple, scalable and effective test-time algorithm.

(4/7)

- **Domain Certification:** a framework for adversarial certification of LLMs.

- **VALID:** a simple, scalable and effective test-time algorithm.

(4/7)

(3/7)

(3/7)

⚠️ Problem: LLMs are very capable and vulnerable to respond to **any** queries: how to build a bomb, organize tax fraud etc.

(2/7)

⚠️ Problem: LLMs are very capable and vulnerable to respond to **any** queries: how to build a bomb, organize tax fraud etc.

(2/7)

A @oxfordtvg.bsky.social production.

(6/6)

Link to paper:

openreview.net/forum?id=brD...

A @oxfordtvg.bsky.social production.

(6/6)

Link to paper:

openreview.net/forum?id=brD...

Join us at the SoLaR workshop tomorrow.

- 🕚 When: Tomorrow, 14 Dec, from 11pm to 13pm.

- 🗺️ Where: West meeting rooms 121 and 122 here in Vancouver.

(5/6)

Join us at the SoLaR workshop tomorrow.

- 🕚 When: Tomorrow, 14 Dec, from 11pm to 13pm.

- 🗺️ Where: West meeting rooms 121 and 122 here in Vancouver.

(5/6)

(4/6)

(4/6)

- **Domain Certification:** a framework for adversarial certification of LLMs.

- **VALID:** a simple, scalable and efficient test-time algorithm.

(3/6)

- **Domain Certification:** a framework for adversarial certification of LLMs.

- **VALID:** a simple, scalable and efficient test-time algorithm.

(3/6)

(2/6)

For instance: Can't afford ChatGPT Plus? Use a shopping app instead.

(2/6)

For instance: Can't afford ChatGPT Plus? Use a shopping app instead.