website: https://kulyny.ch

Whatever it is, Nature Careers is all in.

Whatever it is, Nature Careers is all in.

@olivia.science

@abeba.bsky.social

@irisvanrooij.bsky.social

@alexhanna.bsky.social

@rocher.lc

@danmcquillan.bsky.social

@robin.berjon.com

& many others have signed

www.iccl.ie/press-releas...

@olivia.science

@abeba.bsky.social

@irisvanrooij.bsky.social

@alexhanna.bsky.social

@rocher.lc

@danmcquillan.bsky.social

@robin.berjon.com

& many others have signed

www.iccl.ie/press-releas...

www.washingtonpost.com/technology/2...

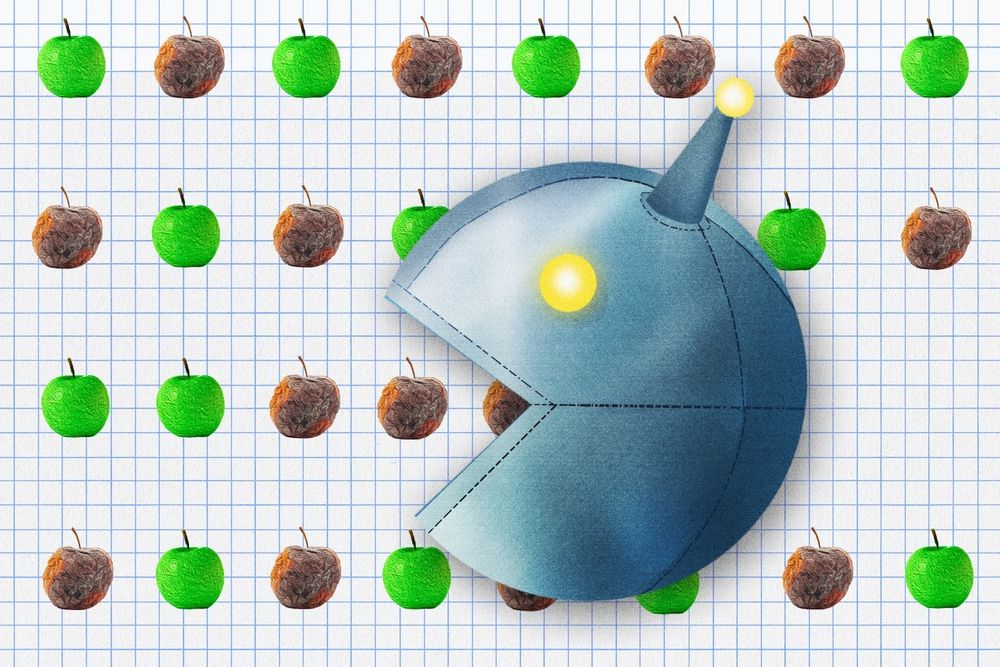

This is due to being overwhelmed by a hundreds of AI generated papers a month.

Yet another open submission process killed by LLMs.

This is due to being overwhelmed by a hundreds of AI generated papers a month.

Yet another open submission process killed by LLMs.

www.bbc.co.uk/mediacentre/...

www.bbc.co.uk/mediacentre/...

Proof: different articles present at the specified journal/volume/page number, and their titles exist nowhere on any searchable repository.

Take this as a warning to not use LMs to generate your references!

Proof: different articles present at the specified journal/volume/page number, and their titles exist nowhere on any searchable repository.

Take this as a warning to not use LMs to generate your references!

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

"Talkin' 'Bout AI Generation: Copyright and the Generative-AI Supply Chain," is out in the 72nd volume of the Journal of the Copyright Society

copyrightsociety.org/journal-entr...

written with @katherinelee.bsky.social & @jtlg.bsky.social (2023)

"Talkin' 'Bout AI Generation: Copyright and the Generative-AI Supply Chain," is out in the 72nd volume of the Journal of the Copyright Society

copyrightsociety.org/journal-entr...

written with @katherinelee.bsky.social & @jtlg.bsky.social (2023)

20 years after, the www is a great technology, yet here we are now with all the dopamine-driven design and social polarization.

20 years after, the www is a great technology, yet here we are now with all the dopamine-driven design and social polarization.

But I guess we’ll see.

Large language models provide unsafe answers to patient-posed medical questions

https://arxiv.org/abs/2507.18905

Large language models provide unsafe answers to patient-posed medical questions

https://arxiv.org/abs/2507.18905

Bogdan Kulynych, Juan Felipe Gomez, Georgios Kaissis, Jamie Hayes, Borja Balle, Flavio du Pin Calmon, Jean Louis Raisaro

http://arxiv.org/abs/2507.06969

It's called Hiding Nemo, and you can read all about it on our website ➡️ https://hiding-nemo.com 🪸

It's called Hiding Nemo, and you can read all about it on our website ➡️ https://hiding-nemo.com 🪸