Bionic Vision Lab

@bionicvisionlab.org

👁️🧠🖥️🧪🤖 What would the world look like with a bionic eye? Interdisciplinary research group at UC Santa Barbara. PI: @mbeyeler.bsky.social

#BionicVision #Blindness #NeuroTech #VisionScience #CompNeuro #NeuroAI

#BionicVision #Blindness #NeuroTech #VisionScience #CompNeuro #NeuroAI

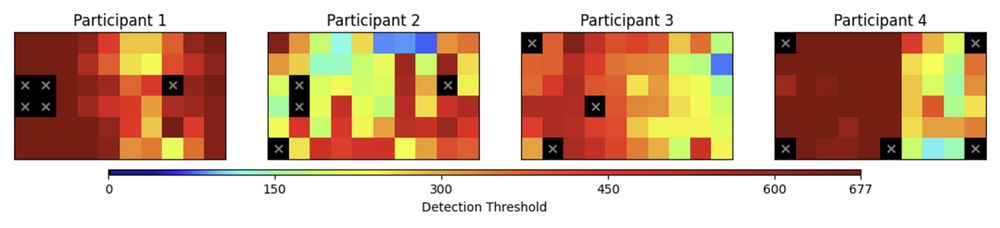

🧠 Building on Roksana Sadeghi’s work: Calibrating retinal implants is slow and tedious. Can Gaussian Process Regression (GPR) guide smarter sampling?

✅ GPR + spatial sampling = fewer trials, same accuracy

🔁 Toward faster, personalized calibration

🔗 bionicvisionlab.org/publications...

#EMBC2025

✅ GPR + spatial sampling = fewer trials, same accuracy

🔁 Toward faster, personalized calibration

🔗 bionicvisionlab.org/publications...

#EMBC2025

Efficient spatial estimation of perceptual thresholds for retinal implants via Gaussian process regression | Bionic Vision Lab

We propose a Gaussian Process Regression (GPR) framework to predict perceptual thresholds at unsampled locations while leveraging uncertainty estimates to guide adaptive sampling.

bionicvisionlab.org

July 13, 2025 at 5:24 PM

🧠 Building on Roksana Sadeghi’s work: Calibrating retinal implants is slow and tedious. Can Gaussian Process Regression (GPR) guide smarter sampling?

✅ GPR + spatial sampling = fewer trials, same accuracy

🔁 Toward faster, personalized calibration

🔗 bionicvisionlab.org/publications...

#EMBC2025

✅ GPR + spatial sampling = fewer trials, same accuracy

🔁 Toward faster, personalized calibration

🔗 bionicvisionlab.org/publications...

#EMBC2025

🎓 Proud of our undergrad(!) Eirini Schoinas for leading this:

bionicvisionlab.org/publications...

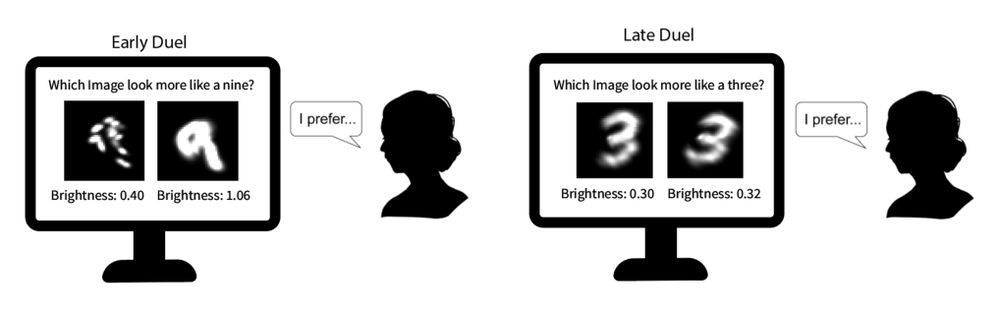

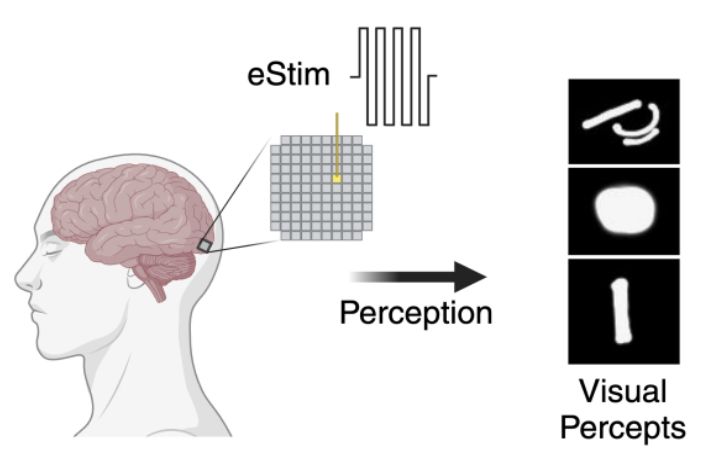

🧠 Human-in-the-loop optimization (HILO) works in silico—but does it hold up with real people?

✅ HILO outperformed naïve and deep encoders

🔁 A step toward personalized #BionicVision

#EMBC2025

bionicvisionlab.org/publications...

🧠 Human-in-the-loop optimization (HILO) works in silico—but does it hold up with real people?

✅ HILO outperformed naïve and deep encoders

🔁 A step toward personalized #BionicVision

#EMBC2025

Evaluating deep human-in-the-loop optimization for retinal implants using sighted participants | Bionic Vision Lab

We evaluate HILO using sighted participants viewing simulated prosthetic vision to assess its ability to optimize stimulation strategies under realistic conditions.

bionicvisionlab.org

July 13, 2025 at 5:24 PM

🎓 Proud of our undergrad(!) Eirini Schoinas for leading this:

bionicvisionlab.org/publications...

🧠 Human-in-the-loop optimization (HILO) works in silico—but does it hold up with real people?

✅ HILO outperformed naïve and deep encoders

🔁 A step toward personalized #BionicVision

#EMBC2025

bionicvisionlab.org/publications...

🧠 Human-in-the-loop optimization (HILO) works in silico—but does it hold up with real people?

✅ HILO outperformed naïve and deep encoders

🔁 A step toward personalized #BionicVision

#EMBC2025

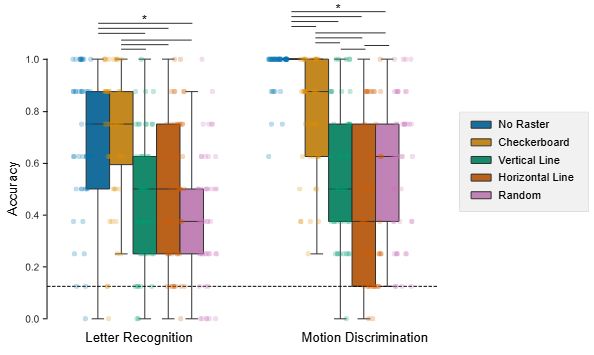

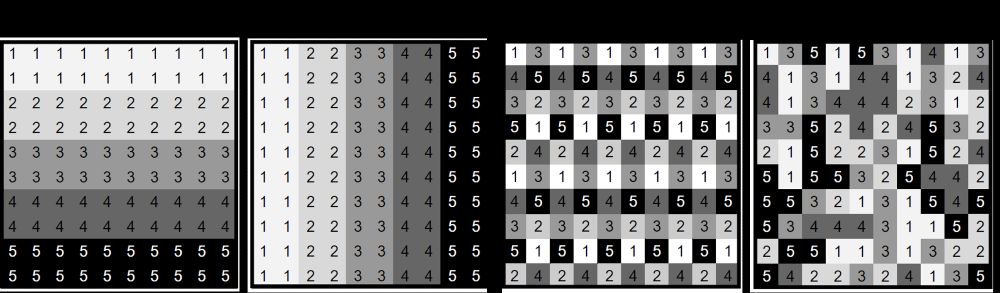

This matters. Checkerboard rastering:

✔️ works across tasks

✔️ requires no fancy calibration

✔️ is hardware-agnostic

A low-cost, high-impact tweak that could make future visual prostheses more usable and more intuitive.

#BionicVision #BCI #NeuroTech

✔️ works across tasks

✔️ requires no fancy calibration

✔️ is hardware-agnostic

A low-cost, high-impact tweak that could make future visual prostheses more usable and more intuitive.

#BionicVision #BCI #NeuroTech

July 9, 2025 at 4:55 PM

This matters. Checkerboard rastering:

✔️ works across tasks

✔️ requires no fancy calibration

✔️ is hardware-agnostic

A low-cost, high-impact tweak that could make future visual prostheses more usable and more intuitive.

#BionicVision #BCI #NeuroTech

✔️ works across tasks

✔️ requires no fancy calibration

✔️ is hardware-agnostic

A low-cost, high-impact tweak that could make future visual prostheses more usable and more intuitive.

#BionicVision #BCI #NeuroTech

✅ Checkerboard consistently outperformed the other patterns—higher accuracy, lower difficulty, fewer motion artifacts.

💡 Why? More spatial separation between activations = less perceptual interference.

It even matched performance of the ideal “no raster” condition, without breaking safety rules.

💡 Why? More spatial separation between activations = less perceptual interference.

It even matched performance of the ideal “no raster” condition, without breaking safety rules.

July 9, 2025 at 4:55 PM

✅ Checkerboard consistently outperformed the other patterns—higher accuracy, lower difficulty, fewer motion artifacts.

💡 Why? More spatial separation between activations = less perceptual interference.

It even matched performance of the ideal “no raster” condition, without breaking safety rules.

💡 Why? More spatial separation between activations = less perceptual interference.

It even matched performance of the ideal “no raster” condition, without breaking safety rules.

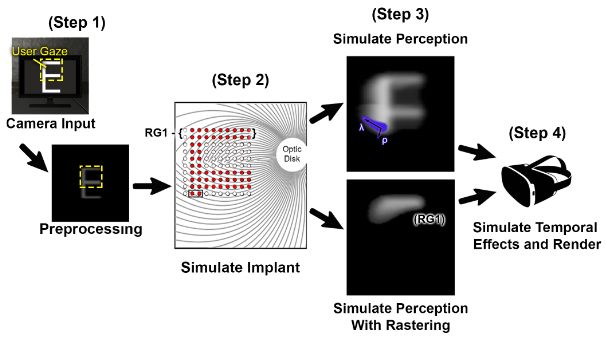

We ran a simulated prosthetic vision study in immersive VR using gaze-contingent, psychophysically grounded models of epiretinal implants.

🧪 Powered by BionicVisionXR.

📐 Modeled 100-electrode Argus-like array.

👀 Realistic phosphene appearance, eye/head tracking.

🧪 Powered by BionicVisionXR.

📐 Modeled 100-electrode Argus-like array.

👀 Realistic phosphene appearance, eye/head tracking.

July 9, 2025 at 4:55 PM

We ran a simulated prosthetic vision study in immersive VR using gaze-contingent, psychophysically grounded models of epiretinal implants.

🧪 Powered by BionicVisionXR.

📐 Modeled 100-electrode Argus-like array.

👀 Realistic phosphene appearance, eye/head tracking.

🧪 Powered by BionicVisionXR.

📐 Modeled 100-electrode Argus-like array.

👀 Realistic phosphene appearance, eye/head tracking.

Checkerboard rastering has been used in #BCI and #NeuroTech applications, often based on intuition.

But is it actually better, or just tradition?

No one had rigorously tested how these patterns impact perception in visual prostheses.

So we did.

But is it actually better, or just tradition?

No one had rigorously tested how these patterns impact perception in visual prostheses.

So we did.

July 9, 2025 at 4:55 PM

Checkerboard rastering has been used in #BCI and #NeuroTech applications, often based on intuition.

But is it actually better, or just tradition?

No one had rigorously tested how these patterns impact perception in visual prostheses.

So we did.

But is it actually better, or just tradition?

No one had rigorously tested how these patterns impact perception in visual prostheses.

So we did.

Reposted by Bionic Vision Lab

Last but not least is Lily Turkstra, whose poster is assessing the efficacy of visual augmentations for high-stress navigation:

Tue, 2:45 - 6:45pm, Pavilion: Poster #56.472

www.visionsciences.org/presentation...

👁️🧪 #XR #VirtualReality #Unity3D #VSS2025

Tue, 2:45 - 6:45pm, Pavilion: Poster #56.472

www.visionsciences.org/presentation...

👁️🧪 #XR #VirtualReality #Unity3D #VSS2025

VSS PresentationPresentation – Vision Sciences Society

www.visionsciences.org

May 20, 2025 at 2:10 PM

Last but not least is Lily Turkstra, whose poster is assessing the efficacy of visual augmentations for high-stress navigation:

Tue, 2:45 - 6:45pm, Pavilion: Poster #56.472

www.visionsciences.org/presentation...

👁️🧪 #XR #VirtualReality #Unity3D #VSS2025

Tue, 2:45 - 6:45pm, Pavilion: Poster #56.472

www.visionsciences.org/presentation...

👁️🧪 #XR #VirtualReality #Unity3D #VSS2025

Reposted by Bionic Vision Lab

Coming up: Jacob Granley on whether V1 maintains working memory via spiking activity. Prior evidence from fMRI and LFPs - now, rare intracortical recordings in a blind human offer a chance to test it directly. 👁️ #VSS2025

🕥 Sun 10:45pm · Talk Room 1

🧠 www.visionsciences.org/presentation...

🕥 Sun 10:45pm · Talk Room 1

🧠 www.visionsciences.org/presentation...

May 18, 2025 at 12:26 PM

Coming up: Jacob Granley on whether V1 maintains working memory via spiking activity. Prior evidence from fMRI and LFPs - now, rare intracortical recordings in a blind human offer a chance to test it directly. 👁️ #VSS2025

🕥 Sun 10:45pm · Talk Room 1

🧠 www.visionsciences.org/presentation...

🕥 Sun 10:45pm · Talk Room 1

🧠 www.visionsciences.org/presentation...

Reposted by Bionic Vision Lab

Check out his talk, "Learning to See Again: Building a Smarter Bionic Eye," on Monday, April 14th, 4pm-6pm at Mosher Alumni House.💡

For more info click here: www.campuscalendar.ucsb.edu/event/beyele...

For more info click here: www.campuscalendar.ucsb.edu/event/beyele...

Michael Beyeler | 2024-2025 Plous Award Lecture

"Learning to See Again: Building a Smarter Bionic Eye" What does it mean to see with a bionic eye? While modern visual prosthetics can generate flashes of light, they don’t yet restore natural…

www.campuscalendar.ucsb.edu

April 11, 2025 at 6:00 PM

Check out his talk, "Learning to See Again: Building a Smarter Bionic Eye," on Monday, April 14th, 4pm-6pm at Mosher Alumni House.💡

For more info click here: www.campuscalendar.ucsb.edu/event/beyele...

For more info click here: www.campuscalendar.ucsb.edu/event/beyele...