Lew et al (2023): arxiv.org/abs/2306.03081

Loula et al (2025): arxiv.org/abs/2504.13139

Lew et al (2023): arxiv.org/abs/2306.03081

Loula et al (2025): arxiv.org/abs/2504.13139

Paper Link: arxiv.org/abs/2504.05410

Paper Link: arxiv.org/abs/2504.05410

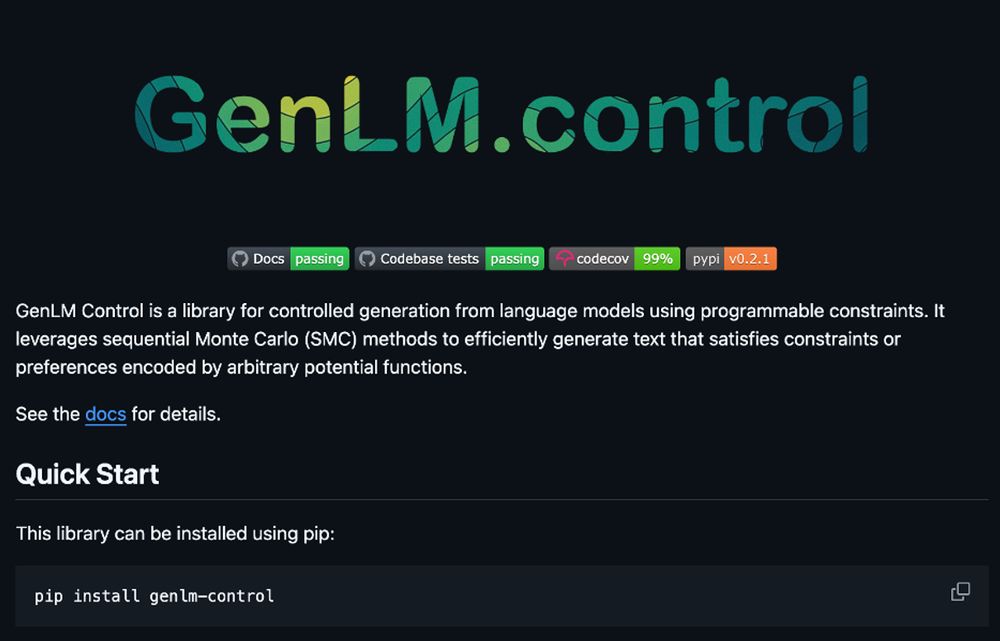

Check out the GenLM control library: github.com/genlm/genlm-...

GenLM supports not only grammars, but arbitrary programmable constraints from type systems to simulators.

If you can write a Python function, you can control your language model!

Check out the GenLM control library: github.com/genlm/genlm-...

GenLM supports not only grammars, but arbitrary programmable constraints from type systems to simulators.

If you can write a Python function, you can control your language model!

Formal and empirical runtime analyses tell a fascinating story.

AWRS scales adaptively with the KL divergence between the conditional and base token-level models.

As your LM better understands the constraint, AWRS gets faster.

As the LM struggles, AWRS closes the gap.

Formal and empirical runtime analyses tell a fascinating story.

AWRS scales adaptively with the KL divergence between the conditional and base token-level models.

As your LM better understands the constraint, AWRS gets faster.

As the LM struggles, AWRS closes the gap.

AWRS SMC outperforms baselines by large margins, e.g., see the jump from 3% -> 53% in the goal inference domain with only ~2.5x clock time overhead.

AWRS SMC outperforms baselines by large margins, e.g., see the jump from 3% -> 53% in the goal inference domain with only ~2.5x clock time overhead.

These particles are resampled proportional to their weights, re-allocating computation towards the most promising sequences.

These particles are resampled proportional to their weights, re-allocating computation towards the most promising sequences.

This process yields equivalently distributed samples at a fraction of the cost.

AWRS then estimates and propagates an importance weight alongside these samples.

This process yields equivalently distributed samples at a fraction of the cost.

AWRS then estimates and propagates an importance weight alongside these samples.

AWRS SMC is a hierarchical inference framework based on sequential Monte Carlo using a novel stochastic proposal algorithm.

By jointly considering local and global signals, AWRS SMC is both probabilistically sound and sample efficient.

How does it work?

AWRS SMC is a hierarchical inference framework based on sequential Monte Carlo using a novel stochastic proposal algorithm.

By jointly considering local and global signals, AWRS SMC is both probabilistically sound and sample efficient.

How does it work?

Consider this simple LM over the tokens `a` and `b` with the constraint that “strings must end with `a`”.

While the distribution on complete strings favors `ba`, autoregressive sampling will favor `ab`.

We don’t want this.

Consider this simple LM over the tokens `a` and `b` with the constraint that “strings must end with `a`”.

While the distribution on complete strings favors `ba`, autoregressive sampling will favor `ab`.

We don’t want this.

Must classify all 100,000+ tokens in the vocab at each step.

While regular and context-free grammars support low-overhead solutions using tools like Outlines (dottxtai.bsky.social), open-ended constraint enforcement has been harder.

Must classify all 100,000+ tokens in the vocab at each step.

While regular and context-free grammars support low-overhead solutions using tools like Outlines (dottxtai.bsky.social), open-ended constraint enforcement has been harder.

At each step, mask the next-token distribution to prevent violations.

Pros: All samples are constraint-satisfying.

Cons: A) Masking a large vocabulary is slow. B) LCD distorts the sampled distribution.

Example:

At each step, mask the next-token distribution to prevent violations.

Pros: All samples are constraint-satisfying.

Cons: A) Masking a large vocabulary is slow. B) LCD distorts the sampled distribution.

Example:

Draw 𝑁 strings from the LM and use the constraint to rank/filter.

Pros: Samples 𝑥 ∝ 𝑃 as 𝑁 grows.

Cons: 𝑁 required to get a target sample scales exp(KL[𝑃||𝑄]). For difficult constraints, this becomes infeasible.

Example:

Draw 𝑁 strings from the LM and use the constraint to rank/filter.

Pros: Samples 𝑥 ∝ 𝑃 as 𝑁 grows.

Cons: 𝑁 required to get a target sample scales exp(KL[𝑃||𝑄]). For difficult constraints, this becomes infeasible.

Example:

Our goal is to sample a string 𝑥 from the conditional distribution 𝑃 = 𝑄(·|𝐶(𝑥)=1) (the target posterior).

How do people do this now, and why do current approaches fall short?

Our goal is to sample a string 𝑥 from the conditional distribution 𝑃 = 𝑄(·|𝐶(𝑥)=1) (the target posterior).

How do people do this now, and why do current approaches fall short?