80000hours.org/speak-with-us/

And follow the links in the full post for more:

benjamintodd.substack.com/p/work-on-a...

80000hours.org/speak-with-us/

And follow the links in the full post for more:

benjamintodd.substack.com/p/work-on-a...

Though, I don't think it's for everyone:

Though, I don't think it's for everyone:

If AGI emerges in the next 5 years, you’ll be part of one of the most important transitions in human history. If not, you’ll have time to return to your previous path.

If AGI emerges in the next 5 years, you’ll be part of one of the most important transitions in human history. If not, you’ll have time to return to your previous path.

80000hours.org/agi/guide/w...

80000hours.org/agi/guide/w...

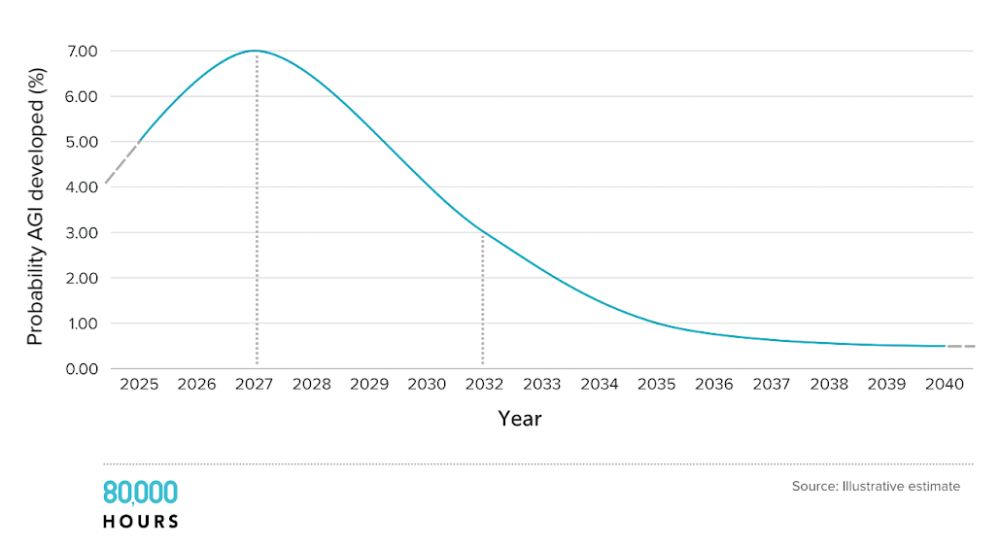

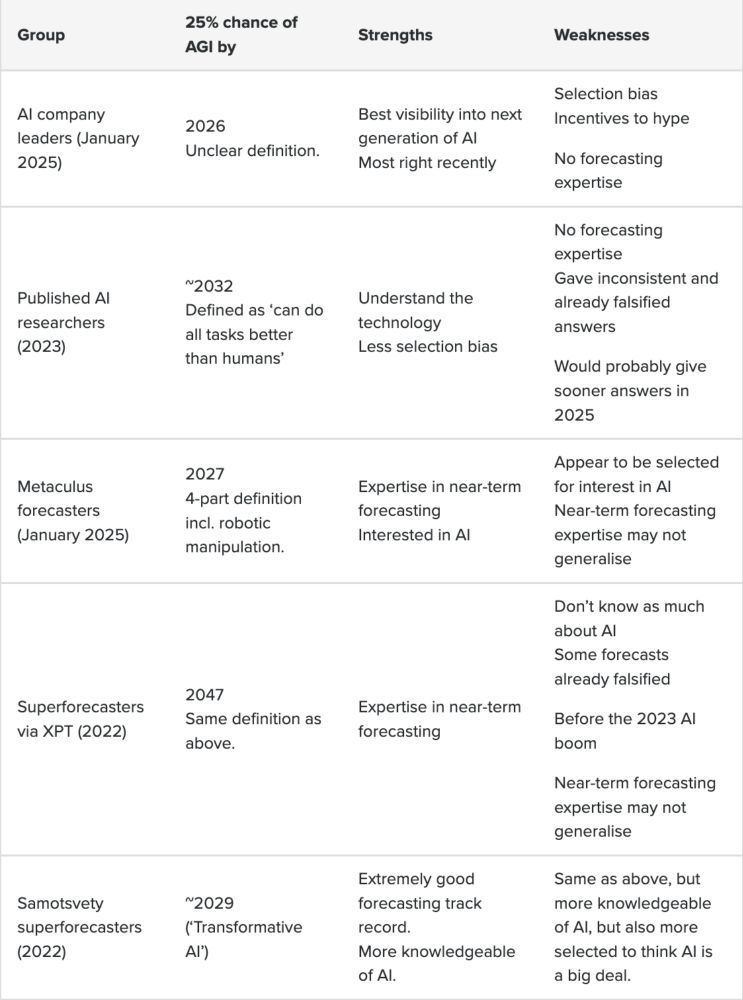

There's a lot of uncertainty, but high uncertainty means we can neither rule it out, nor rule it in.

And every group agrees it's coming sooner.

Full post:

80000hours.org/2025/03/when...

There's a lot of uncertainty, but high uncertainty means we can neither rule it out, nor rule it in.

And every group agrees it's coming sooner.

Full post:

80000hours.org/2025/03/when...

In 2023, they gave shorter estimates: 25% by 2029.

This was also down vs. their 2022 forecast.

But unfortunately this still used the terrible Metaculus definition.

In 2023, they gave shorter estimates: 25% by 2029.

This was also down vs. their 2022 forecast.

But unfortunately this still used the terrible Metaculus definition.

They gave much longer answers: 25% chance by 2047.

But 2022 is before the great timeline shortening.

And their predictions about compute have already been falsified, and they don't seem to know that much about AI.

More:

asteriskmag.com/issues/03/th...

They gave much longer answers: 25% chance by 2047.

But 2022 is before the great timeline shortening.

And their predictions about compute have already been falsified, and they don't seem to know that much about AI.

More:

asteriskmag.com/issues/03/th...

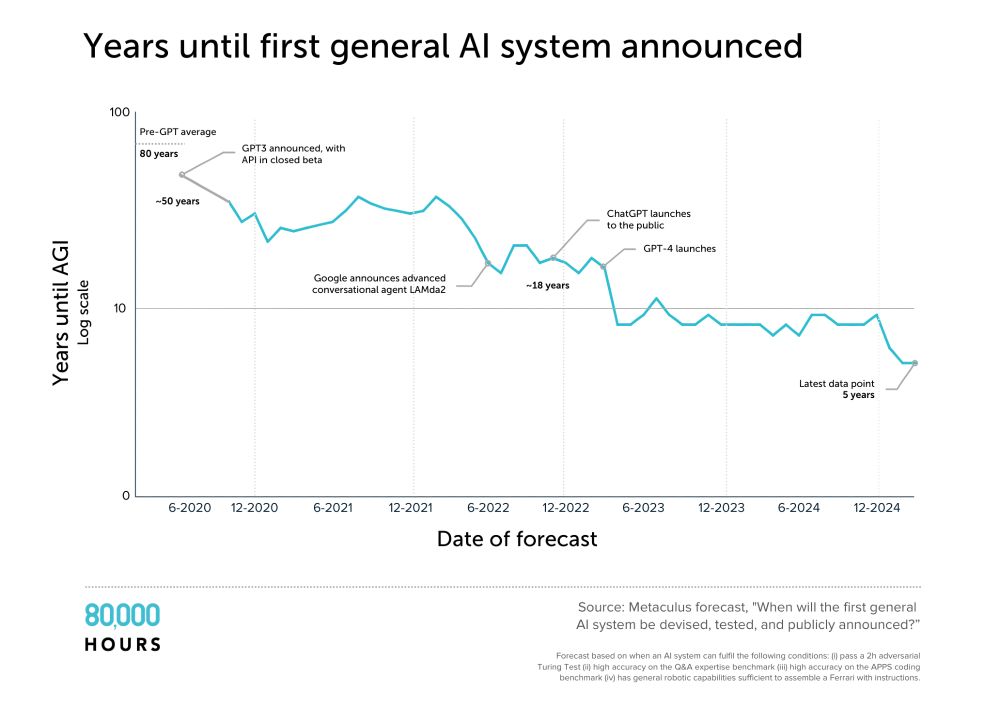

The Metaculus AGI Q has 1000+ forecasts.

The median has fallen from 50 years to 5.

Unfortunately, the definition is both too stringent for AGI, and not stringent enough. So I'm skeptical of the specific numbers.

The Metaculus AGI Q has 1000+ forecasts.

The median has fallen from 50 years to 5.

Unfortunately, the definition is both too stringent for AGI, and not stringent enough. So I'm skeptical of the specific numbers.

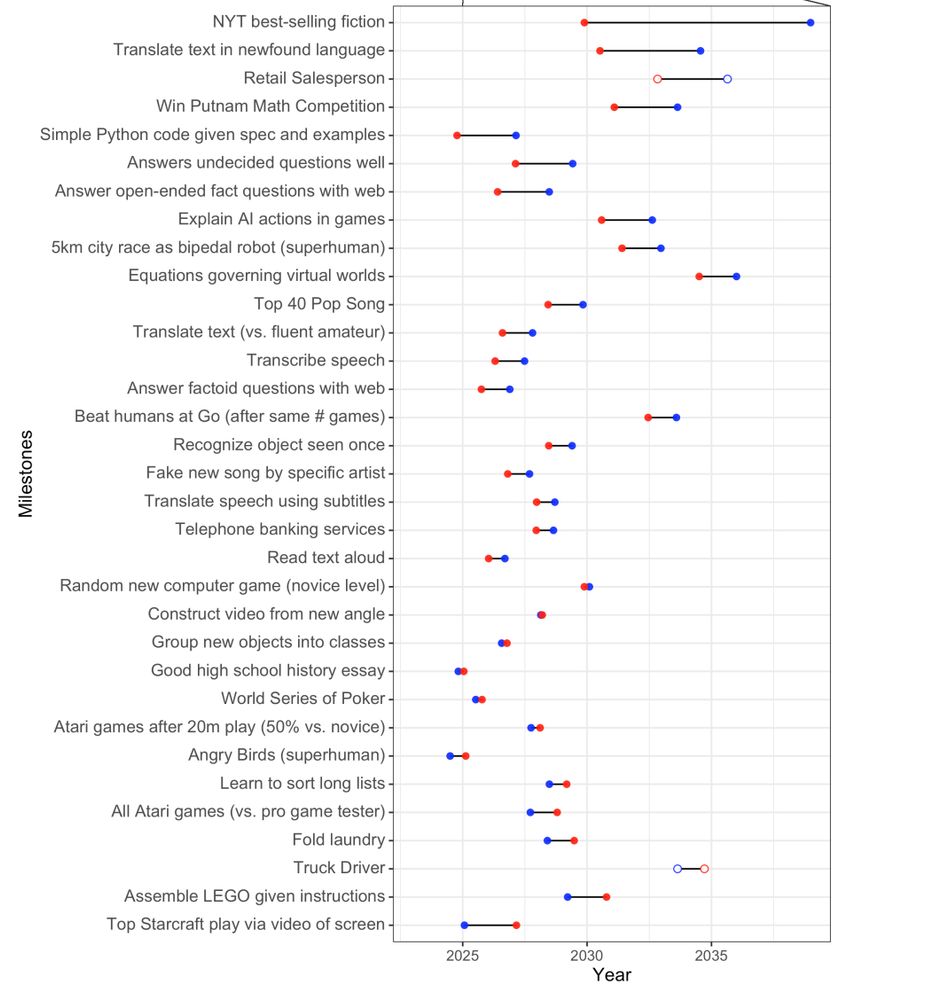

And even in 2023 (red), they predicted 2025!

They gave much longer answers for "full automation of labour" for unclear reasons.

Also AI expertise ≠ forecasting expertise.

And even in 2023 (red), they predicted 2025!

They gave much longer answers for "full automation of labour" for unclear reasons.

Also AI expertise ≠ forecasting expertise.

Median: 25% chance of AI better than humans at "all tasks" by 2032.

But this is from 2023, their answers have been too pessimistic historically.

Median: 25% chance of AI better than humans at "all tasks" by 2032.

But this is from 2023, their answers have been too pessimistic historically.

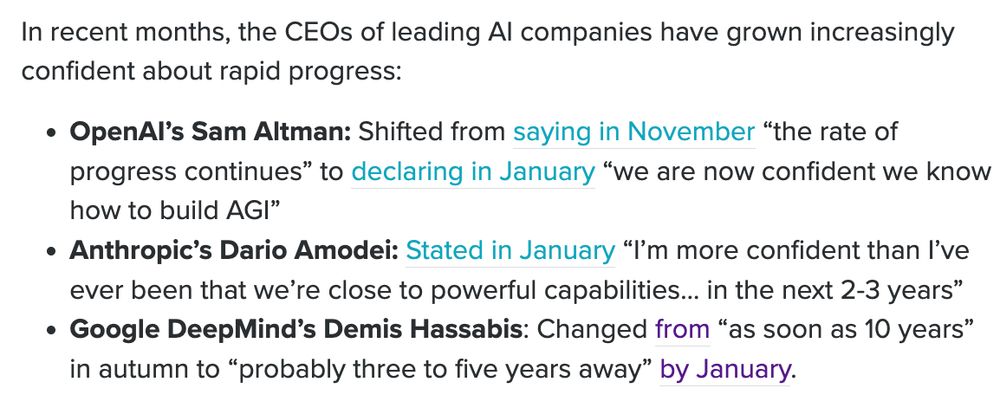

They tend to be most bullish – predicting AGI in 2-5 years.

It's obvious why they might be biased.

But I don't think should be totally ignored – they have the most visibility into next gen capabilities.

(And have been more right about recent progress.)

They tend to be most bullish – predicting AGI in 2-5 years.

It's obvious why they might be biased.

But I don't think should be totally ignored – they have the most visibility into next gen capabilities.

(And have been more right about recent progress.)

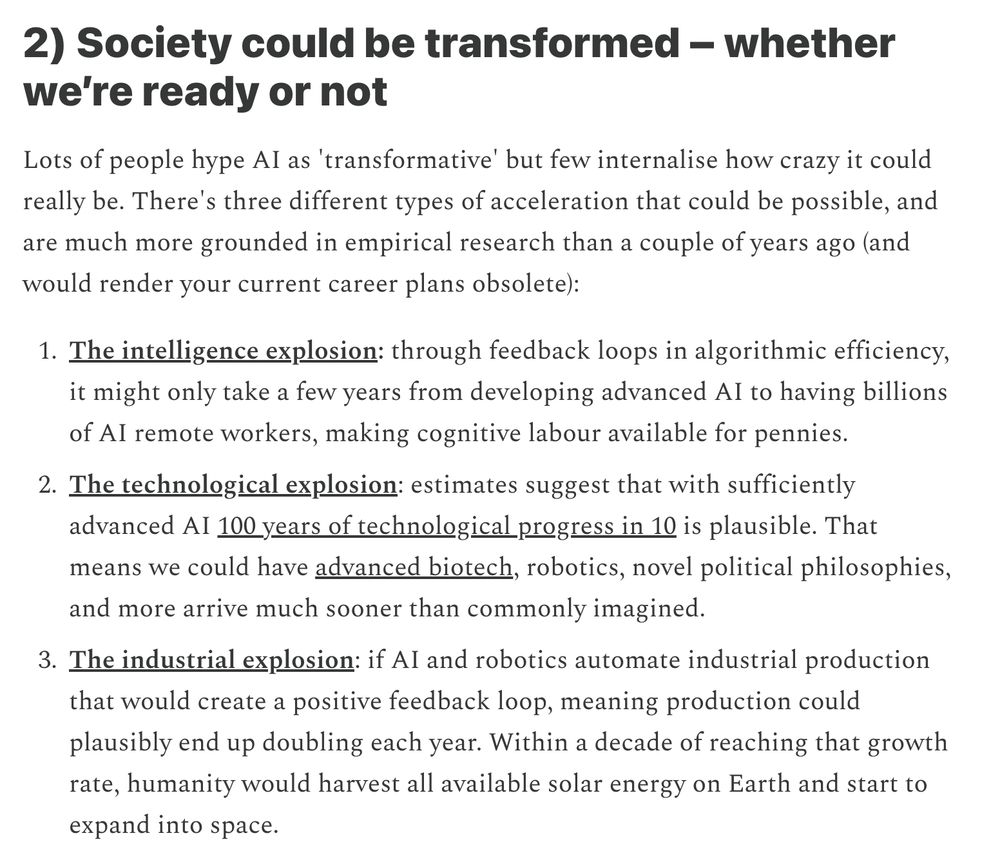

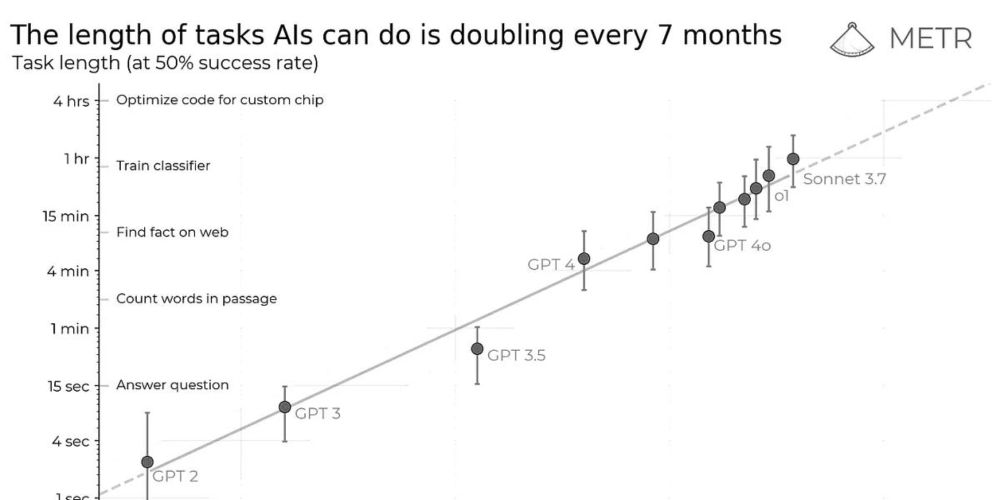

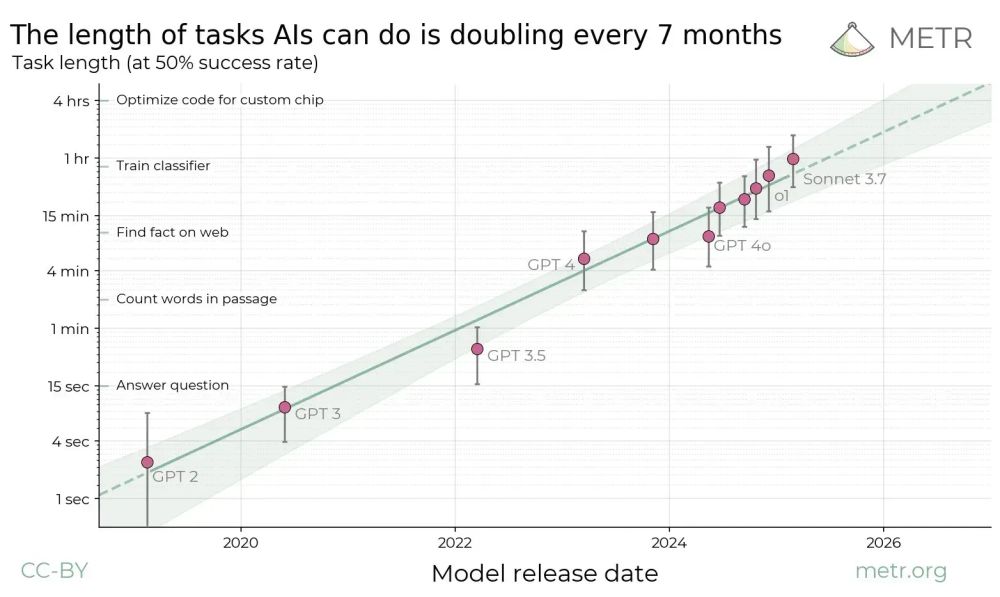

In 2 years, can do many 1-day computer use tasks

In 4 years, many 1-week tasks

On substack I argue we should expect the trend to continue, and discuss some limitations:

benjamintodd.substack.com/p/the-most-i...

In 2 years, can do many 1-day computer use tasks

In 4 years, many 1-week tasks

On substack I argue we should expect the trend to continue, and discuss some limitations:

benjamintodd.substack.com/p/the-most-i...

Not saying it's certain—just that it could happen with only an extension of current trends.

Full analysis here:

80000hours.org/agi/guide/w...

Not saying it's certain—just that it could happen with only an extension of current trends.

Full analysis here:

80000hours.org/agi/guide/w...

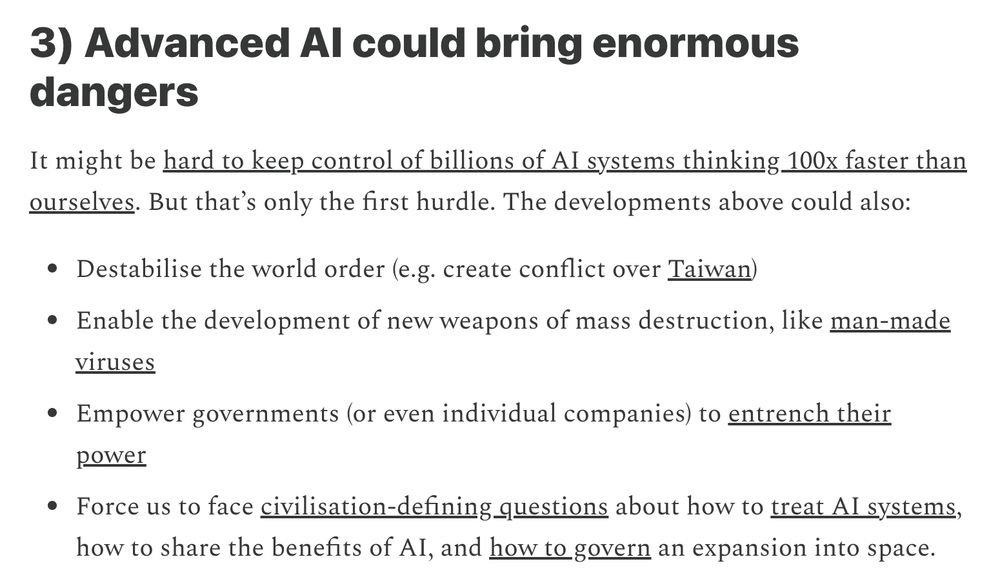

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

You can boil it down to whether this trend will continue:

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

You can boil it down to whether this trend will continue:

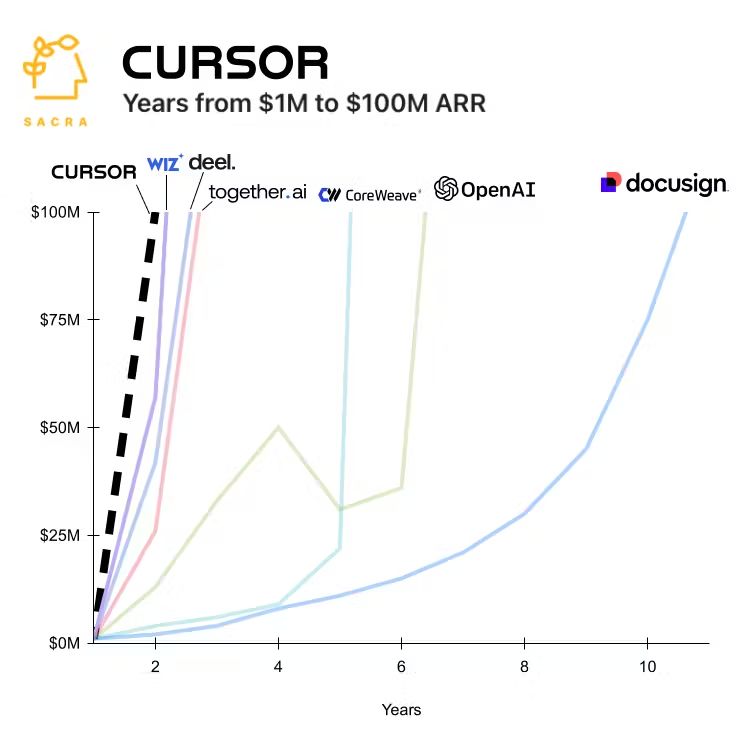

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.