Though, I don't think it's for everyone:

Though, I don't think it's for everyone:

If AGI emerges in the next 5 years, you’ll be part of one of the most important transitions in human history. If not, you’ll have time to return to your previous path.

If AGI emerges in the next 5 years, you’ll be part of one of the most important transitions in human history. If not, you’ll have time to return to your previous path.

In 2023, they gave shorter estimates: 25% by 2029.

This was also down vs. their 2022 forecast.

But unfortunately this still used the terrible Metaculus definition.

In 2023, they gave shorter estimates: 25% by 2029.

This was also down vs. their 2022 forecast.

But unfortunately this still used the terrible Metaculus definition.

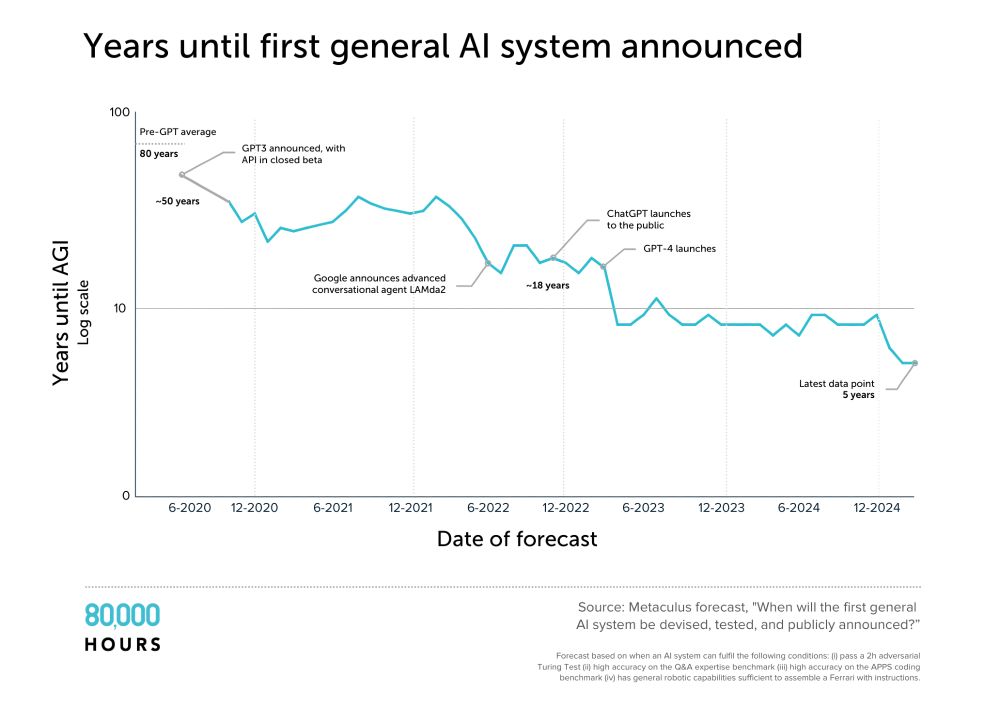

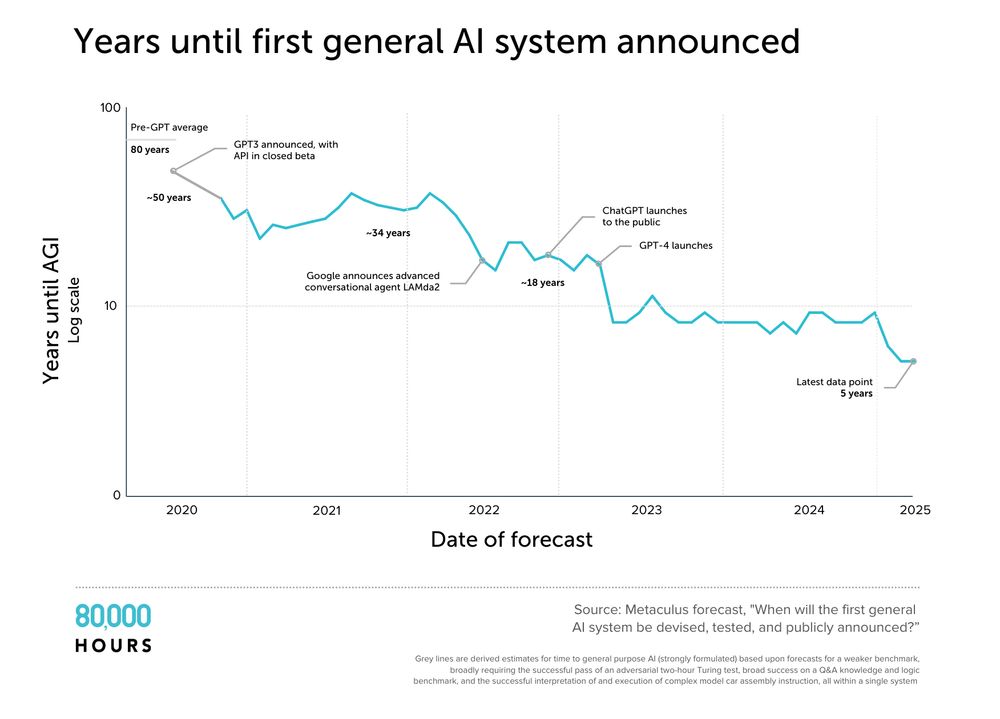

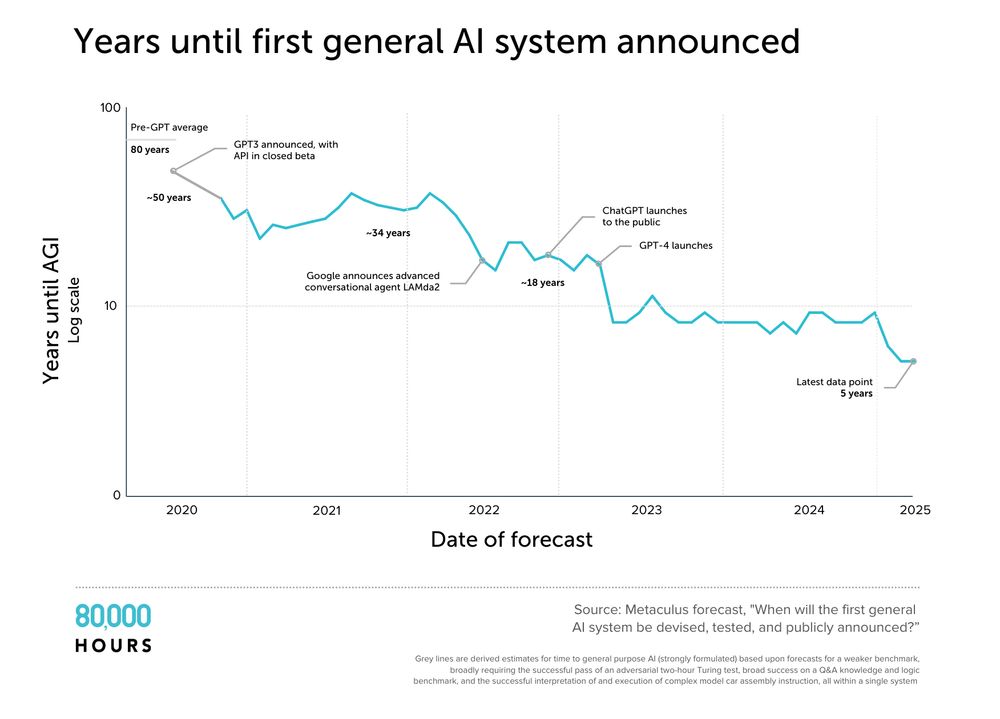

The Metaculus AGI Q has 1000+ forecasts.

The median has fallen from 50 years to 5.

Unfortunately, the definition is both too stringent for AGI, and not stringent enough. So I'm skeptical of the specific numbers.

The Metaculus AGI Q has 1000+ forecasts.

The median has fallen from 50 years to 5.

Unfortunately, the definition is both too stringent for AGI, and not stringent enough. So I'm skeptical of the specific numbers.

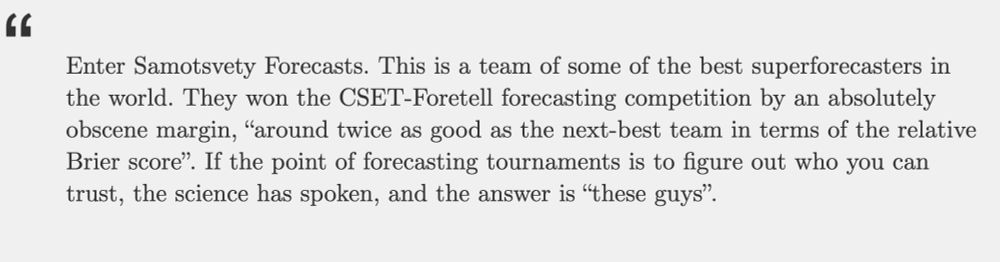

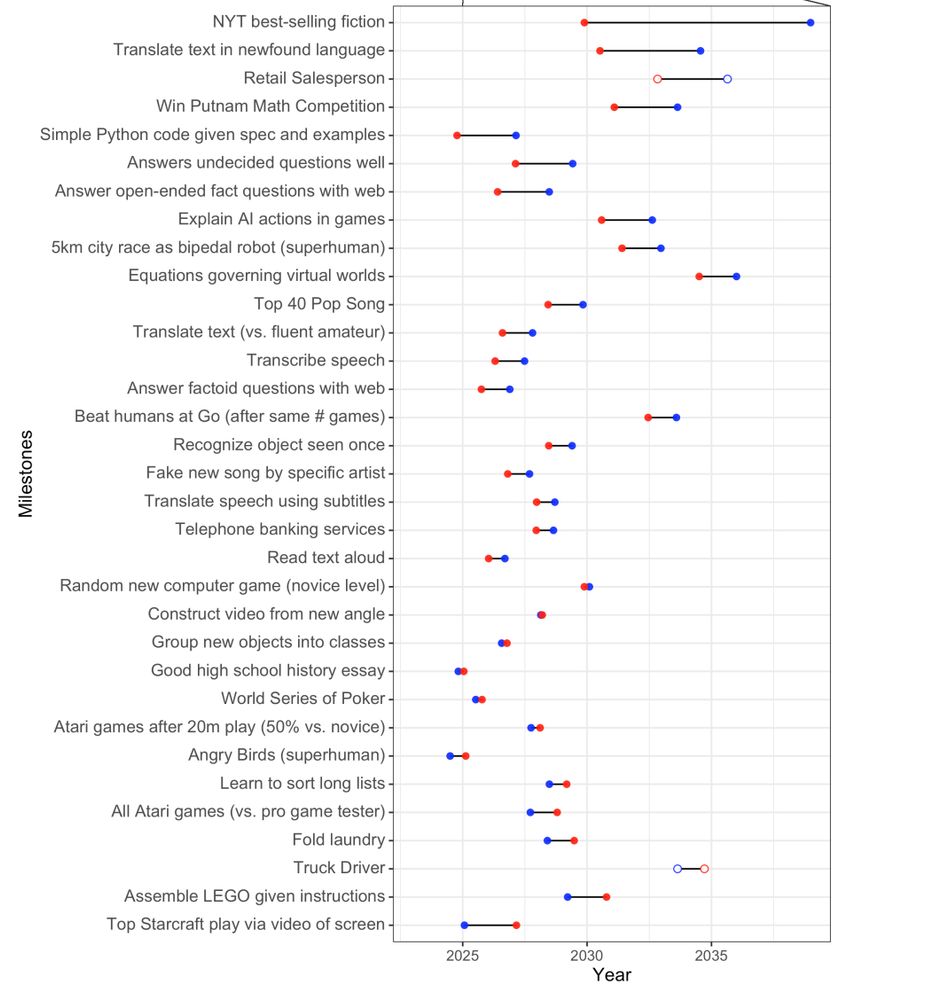

And even in 2023 (red), they predicted 2025!

They gave much longer answers for "full automation of labour" for unclear reasons.

Also AI expertise ≠ forecasting expertise.

And even in 2023 (red), they predicted 2025!

They gave much longer answers for "full automation of labour" for unclear reasons.

Also AI expertise ≠ forecasting expertise.

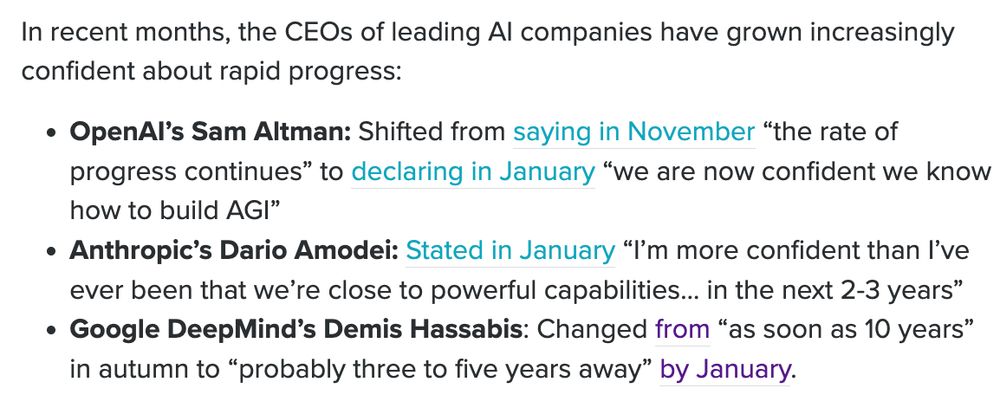

They tend to be most bullish – predicting AGI in 2-5 years.

It's obvious why they might be biased.

But I don't think should be totally ignored – they have the most visibility into next gen capabilities.

(And have been more right about recent progress.)

They tend to be most bullish – predicting AGI in 2-5 years.

It's obvious why they might be biased.

But I don't think should be totally ignored – they have the most visibility into next gen capabilities.

(And have been more right about recent progress.)

Maybe not much. Except that it's coming sooner than before.

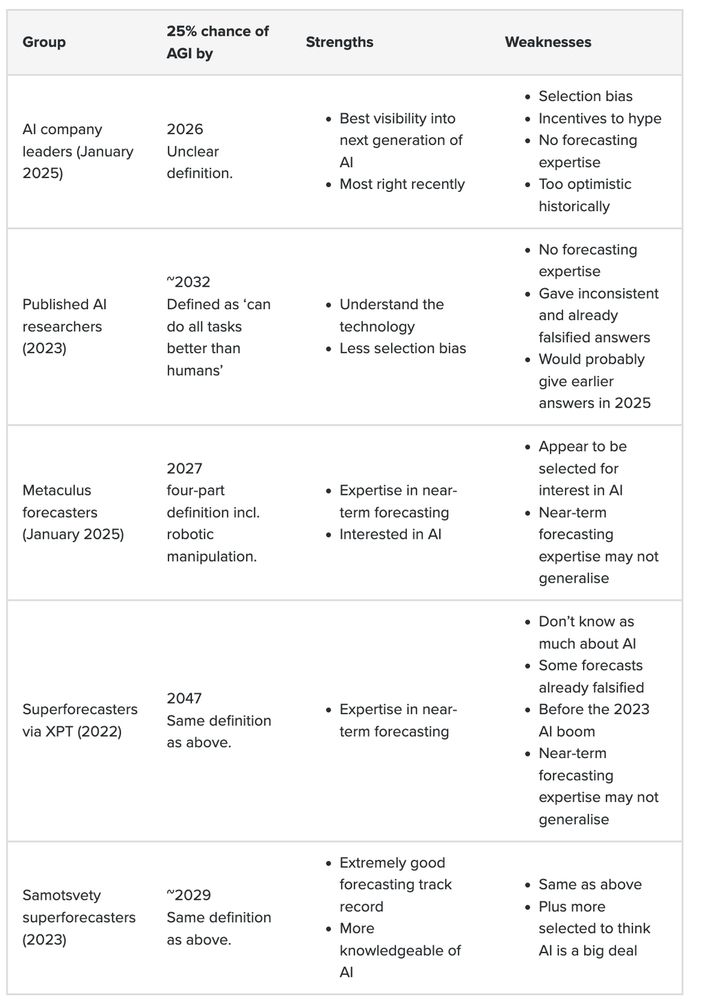

I did a review of the 5 most relevant expert groups and what we can learn from them..

Maybe not much. Except that it's coming sooner than before.

I did a review of the 5 most relevant expert groups and what we can learn from them..

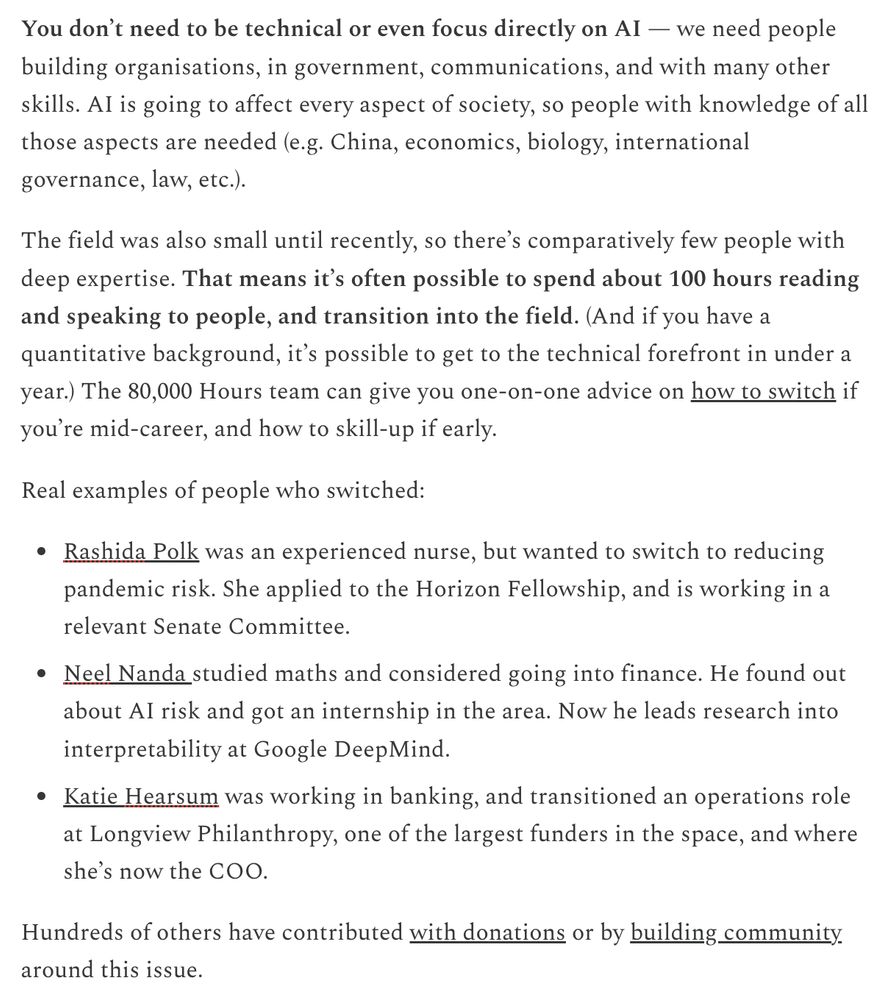

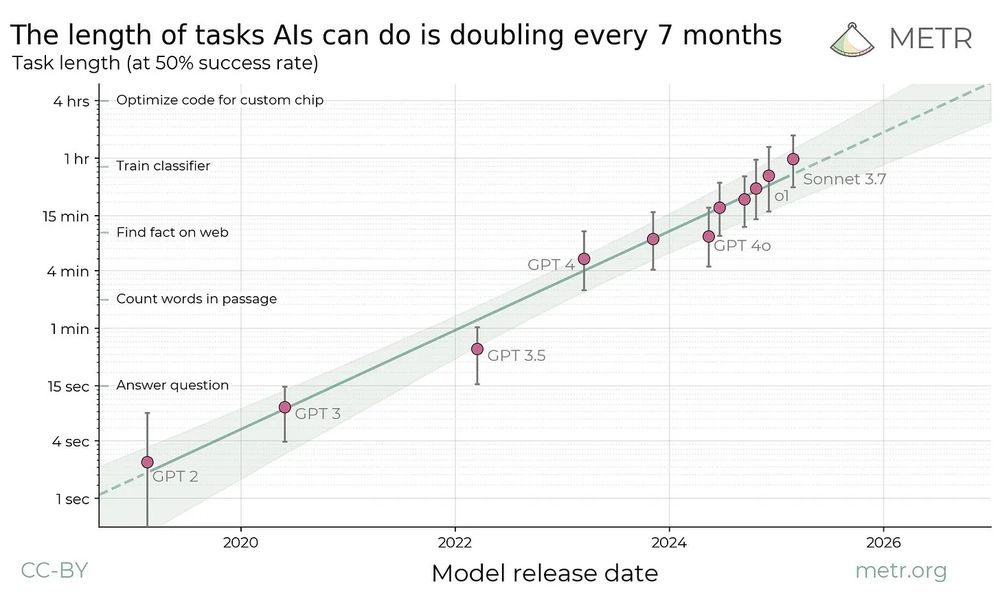

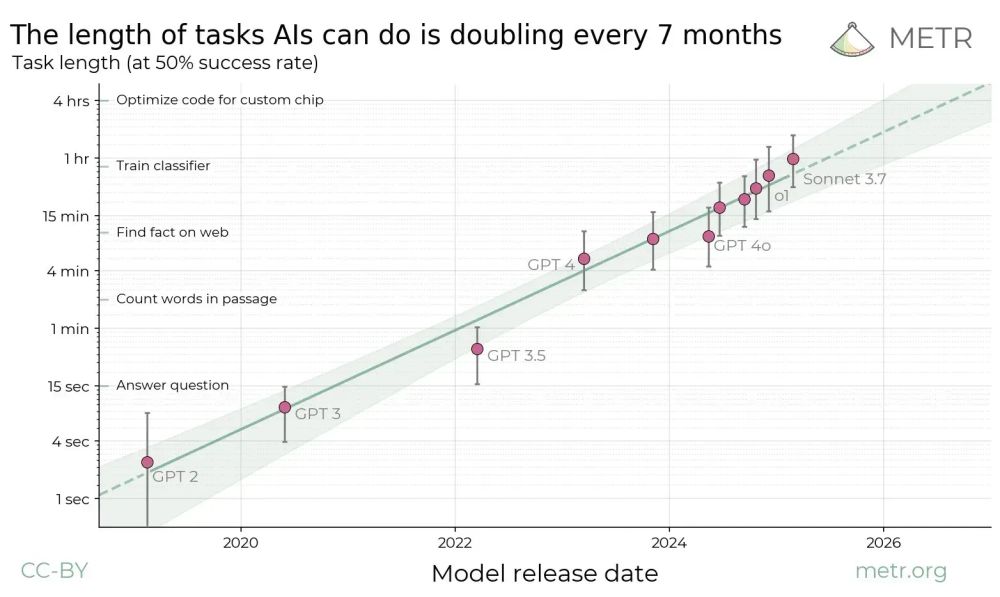

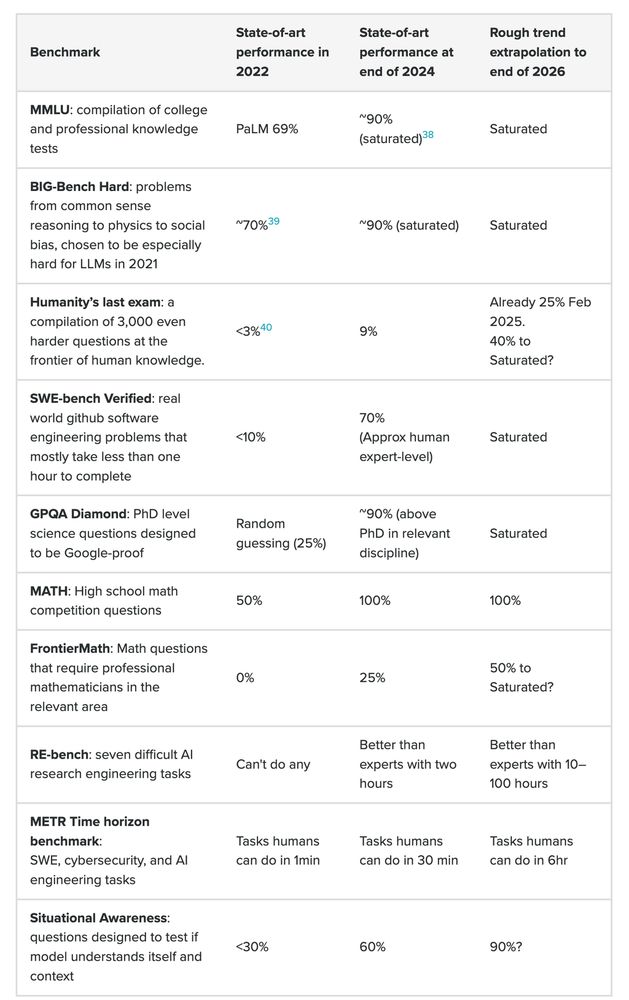

Models today can do tasks up to 1h.

But real jobs mainly consist of tasks taking days or weeks.

So AI can answer questions but can't do real jobs.

But that's about to change..

Models today can do tasks up to 1h.

But real jobs mainly consist of tasks taking days or weeks.

So AI can answer questions but can't do real jobs.

But that's about to change..

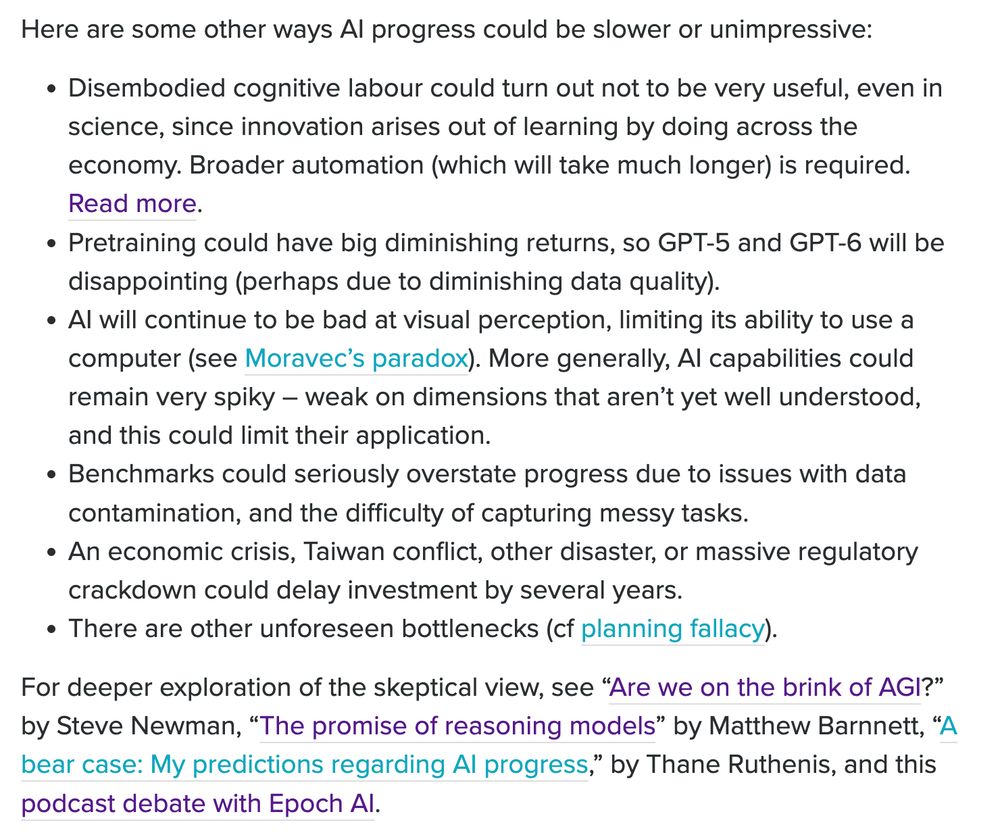

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

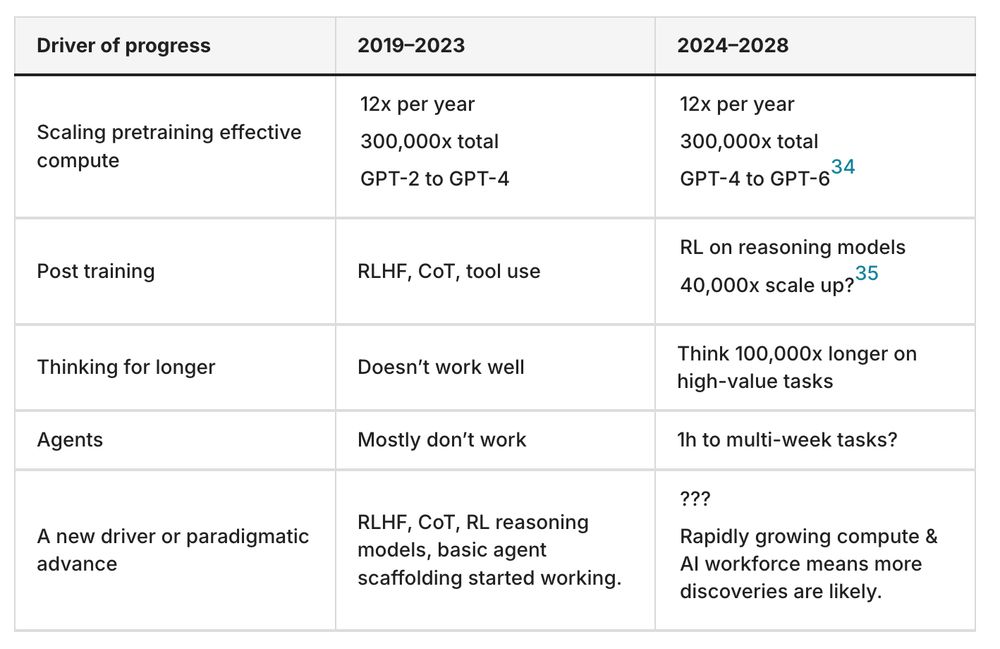

You can boil it down to whether this trend will continue:

Current AI methods might plateau on ill-defined, contextual, long-horizon tasks—which happens to be most knowledge work.

Without continuous breakthroughs, profit margins fall and investment dries up.

You can boil it down to whether this trend will continue:

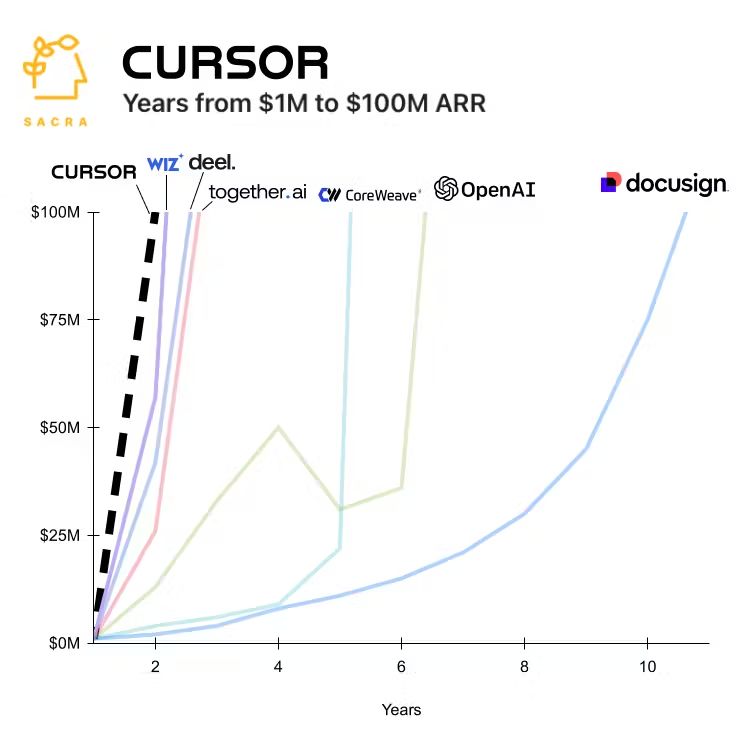

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.

• Software engineering & startups

• Scientific research

• AI development itself

These alone could drive massive economic impact and accelerate AI progress.

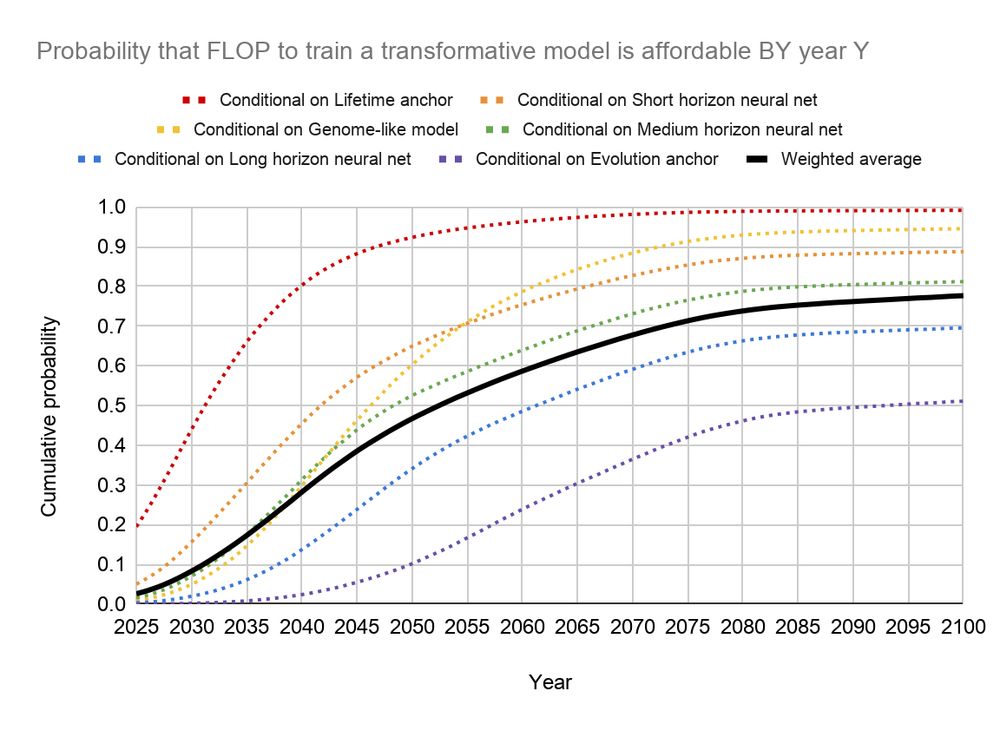

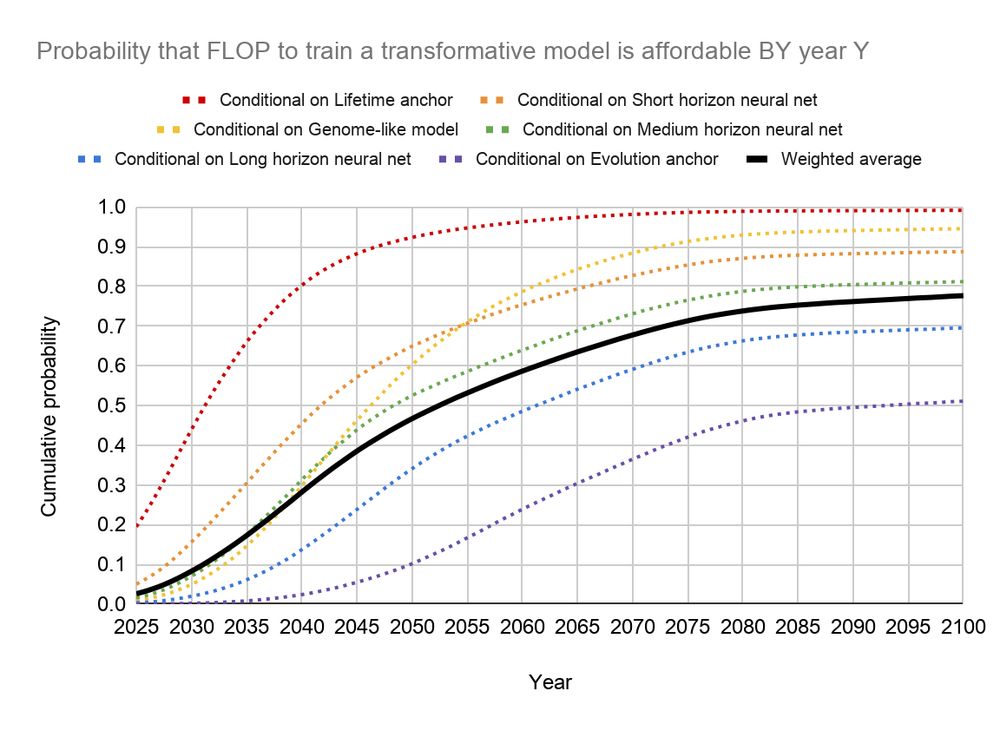

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

www.cold-takes.com/forecasting...

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

www.cold-takes.com/forecasting...

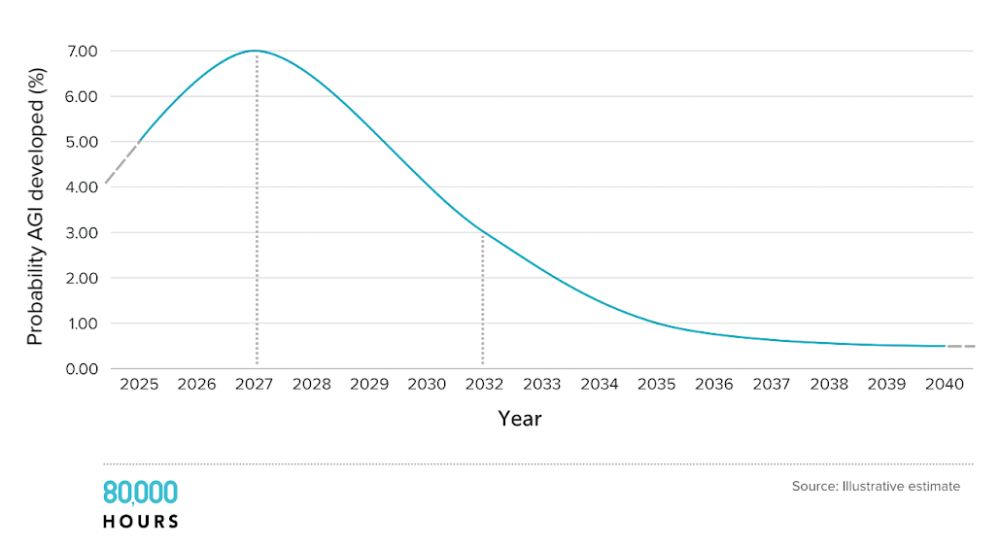

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/whe...

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/whe...

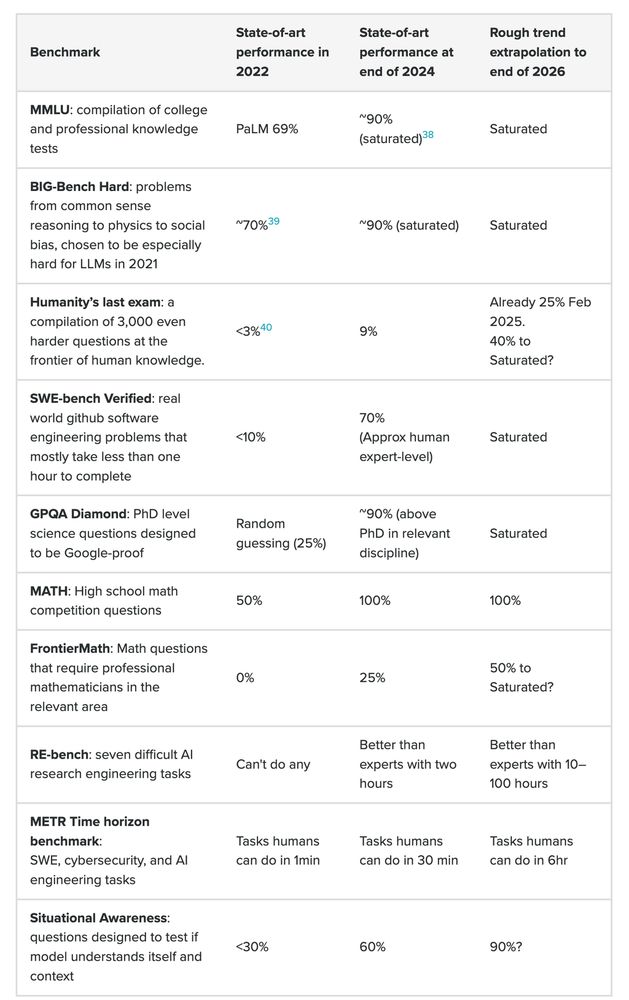

All the major benchmarks ↓

All the major benchmarks ↓

And with investment in compute and algorithms continuing to increase, new drivers are likely to be discovered.

And with investment in compute and algorithms continuing to increase, new drivers are likely to be discovered.

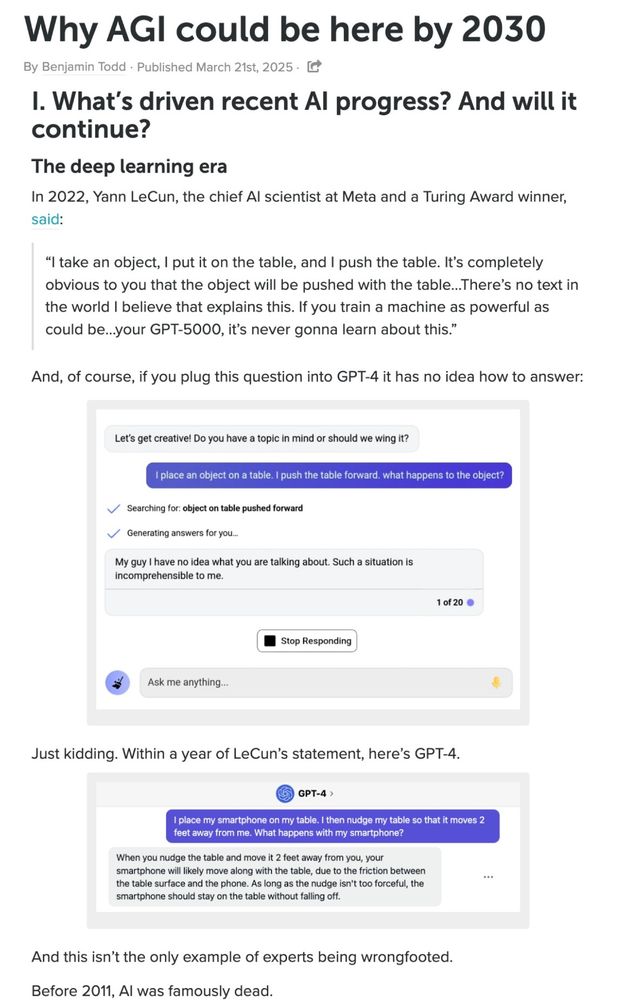

Is this just hype, or could they be right?

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵

Is this just hype, or could they be right?

I spent weeks writing this new in-depth primer on the best arguments for and against.

Starting with the case for...🧵

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

www.cold-takes.com/forecasting...

If algorithms approach even a fraction of human learning efficiency, we'd expect human-level capabilities in at least some domains.

www.cold-takes.com/forecasting...

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/whe...

AI and forecasting experts now place significant probability on AGI-level capabilities pre-2030.

I remember when 2045 was considered optimistic.

80000hours.org/2025/03/whe...

All the major benchmarks ↓

All the major benchmarks ↓