I feel spectral metrics can go a long way in unlocking LLM understanding+design. 🚀

I feel spectral metrics can go a long way in unlocking LLM understanding+design. 🚀

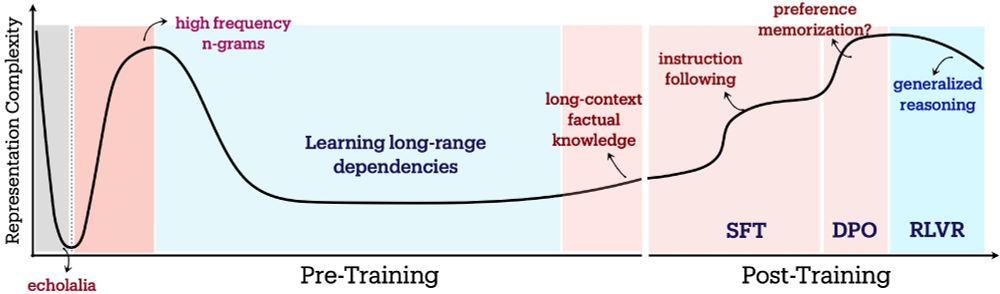

- Pretraining: Compress → Expand (Memorize) → Compress (Generalize).

- Post-training: SFT/DPO → Expand; RLVR → Consolidate.

Representation geometry offers insights into when models memorize vs. generalize! 🤓

🧵8/9

- Pretraining: Compress → Expand (Memorize) → Compress (Generalize).

- Post-training: SFT/DPO → Expand; RLVR → Consolidate.

Representation geometry offers insights into when models memorize vs. generalize! 🤓

🧵8/9

We show, both through theory and with simulations in a toy model, that these non-monotonic spectral changes occur due to gradient descent dynamics with cross-entropy loss under 2 conditions:

1. skewed token frequencies

2. representation bottlenecks

🧵6/9

We show, both through theory and with simulations in a toy model, that these non-monotonic spectral changes occur due to gradient descent dynamics with cross-entropy loss under 2 conditions:

1. skewed token frequencies

2. representation bottlenecks

🧵6/9

- SFT & DPO exhibit entropy-seeking expansion, favoring instruction memorization but reducing OOD robustness.📈

- RLVR exhibits compression-seeking consolidation, learning reward-aligned behaviors at the cost of reduced exploration.📉

🧵5/9

- SFT & DPO exhibit entropy-seeking expansion, favoring instruction memorization but reducing OOD robustness.📈

- RLVR exhibits compression-seeking consolidation, learning reward-aligned behaviors at the cost of reduced exploration.📉

🧵5/9

- Entropy-seeking: Correlates with short-sequence memorization (♾️-gram alignment).

- Compression-seeking: Correlates with dramatic gains in long-context factual reasoning, e.g. TriviaQA.

Curious about ♾️-grams?

See: bsky.app/profile/liuj...

🧵4/9

- Entropy-seeking: Correlates with short-sequence memorization (♾️-gram alignment).

- Compression-seeking: Correlates with dramatic gains in long-context factual reasoning, e.g. TriviaQA.

Curious about ♾️-grams?

See: bsky.app/profile/liuj...

🧵4/9

Warmup: Rapid compression, collapsing representation to dominant directions.

Entropy-seeking: Manifold expansion, adding info in non-dominant directions.📈

Compression-seeking: Anisotropic consolidation, selectively packing more info in dominant directions.📉

🧵3/9

Warmup: Rapid compression, collapsing representation to dominant directions.

Entropy-seeking: Manifold expansion, adding info in non-dominant directions.📈

Compression-seeking: Anisotropic consolidation, selectively packing more info in dominant directions.📉

🧵3/9

- Spectral Decay Rate, αReQ: Fraction of variance in non-dominant directions.

- RankMe: Effective Rank; #dims truly active.

⬇️αReQ ⇒ ⬆️RankMe ⇒ More complex!

🧵1/9

- Spectral Decay Rate, αReQ: Fraction of variance in non-dominant directions.

- RankMe: Effective Rank; #dims truly active.

⬇️αReQ ⇒ ⬆️RankMe ⇒ More complex!

🧵1/9

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

How does the complexity of this mapping change across LLM training? How does it relate to the model’s capabilities? 🤔

Announcing our #NeurIPS2025 📄 that dives into this.

🧵below

#AIResearch #MachineLearning #LLM

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

🦖Introducing Reptrix, a #Python library to evaluate representation quality metrics for neural nets: github.com/BARL-SSL/rep...

🧵👇[1/6]

#DeepLearning

@tyrellturing.bsky.social for supporting me through this amazing journey! 🙏

Big thanks to all members of the LiNC lab, and colleagues at mcgill University and @mila-quebec.bsky.social. ❤️😁

@tyrellturing.bsky.social for supporting me through this amazing journey! 🙏

Big thanks to all members of the LiNC lab, and colleagues at mcgill University and @mila-quebec.bsky.social. ❤️😁

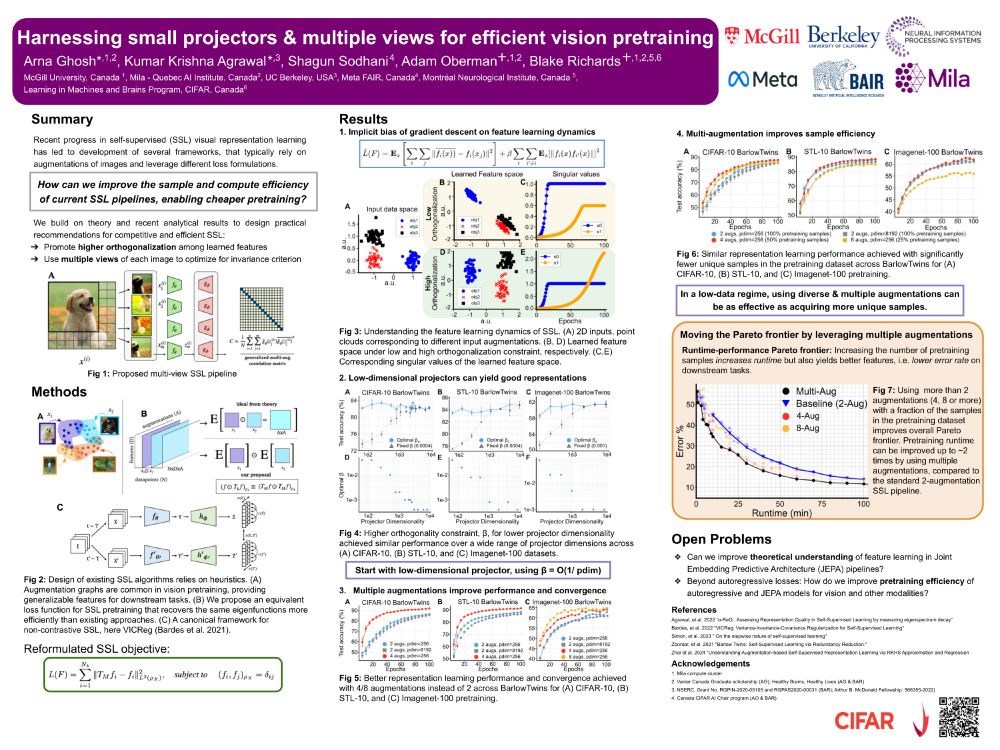

4 views 50% data 🤝 2 views 100% data. 📊

4 views 50% data 🤝 2 views 100% data. 📊

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8

Training time: Weeks

Dataset size: Millions of images

Compute costs: 💸💸💸

Our #NeurIPS2024 poster makes SSL pipelines 2x faster and achieves similar accuracy at 50% pretraining cost! 💪🏼✨

🧵 1/8