https://ariesta.id

https://linkedin.com/in/alvinariesta/

They are often far worse at getting AI to do stuff than those with a liberal arts or social science bent. LLMs are built from the vast corpus human expression, and knowing the history & obscure corners of human works lets you do far more with AI & get its limits.

They are often far worse at getting AI to do stuff than those with a liberal arts or social science bent. LLMs are built from the vast corpus human expression, and knowing the history & obscure corners of human works lets you do far more with AI & get its limits.

marimo is not a "notebook". It's a "published book", presentable and reliable to be shared. Jupyter is still the best notebook, especially on Google Colab.

Why? For me just 1 problem in marimo: unable to redefine variables without some code gymnastics (docs.marimo.io/guides/under...)

marimo is not a "notebook". It's a "published book", presentable and reliable to be shared. Jupyter is still the best notebook, especially on Google Colab.

Why? For me just 1 problem in marimo: unable to redefine variables without some code gymnastics (docs.marimo.io/guides/under...)

I got the 2T one running locally on my Mac after porting it to MLX - notes on that here: simonwillison.net/2025/Jun/7/c...

I got the 2T one running locally on my Mac after porting it to MLX - notes on that here: simonwillison.net/2025/Jun/7/c...

One thing that struck me: they tested LLama 3.1 405B and it averaged 3,353 joules per prompt. That is the equivalent of 2 minutes 50 seconds of human brain activity. www.technologyreview.com/2025/05/20/1...

One thing that struck me: they tested LLama 3.1 405B and it averaged 3,353 joules per prompt. That is the equivalent of 2 minutes 50 seconds of human brain activity. www.technologyreview.com/2025/05/20/1...

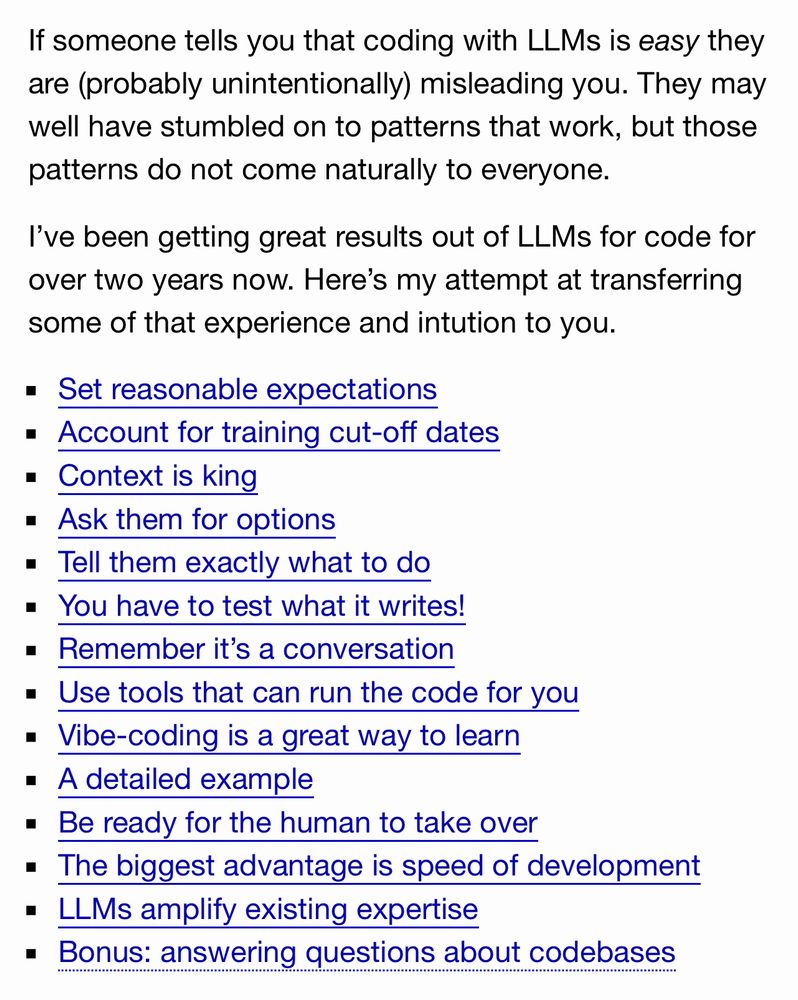

I usually forgot to set expectations too, after not interacting with LLM for a while

I usually forgot to set expectations too, after not interacting with LLM for a while

Imagine if they are truly open and people can connect the search indexing with their own source priority list

Imagine if they are truly open and people can connect the search indexing with their own source priority list

- large context, image, video: Gemini via AI Studio

- code: Claude (web)

- search: DeepSeek web, Perplexity

- writing: Llama (GPU rent -- just because I have unused credits)

- large context, image, video: Gemini via AI Studio

- code: Claude (web)

- search: DeepSeek web, Perplexity

- writing: Llama (GPU rent -- just because I have unused credits)

importai.substack.com/p/import-ai-...

![[…] in the era where these AI systems are true 'everything machines', people will out-compete one another by being increasingly bold and agentic (pun intended!) in how they use these systems, rather than in developing specific technical skills to interface with the systems.

We should all intuitively understand that none of this will be fair. Curiosity and the mindset of being curious and trying a lot of stuff is neither evenly distributed or generally nurtured. Therefore, I'm coming around to the idea that one of the greatest risks lying ahead of us will be the social disruptions that arrive when the new winners of the AI revolution are made - and the winners will be those people who have exercised a whole bunch of curiosity with the AI systems available to them.

- Jack Clark](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:kft6lu4trxowqmter2b6vg6z/bafkreiai5uemf27nso53xg7pdkf43ifz2cnid35phk3twpcsj7pbgo3ogy@jpeg)

importai.substack.com/p/import-ai-...

Outrightly dismissing it is not productive

Outrightly dismissing it is not productive

- people said O1's better

- O1 is closed, R1 is open weight

- R1 is MIT-licensed, can use it to train/finetune other models

- cannot see O1 thoughts, can see R1's

api-docs.deepseek.com/news/news250...

- people said O1's better

- O1 is closed, R1 is open weight

- R1 is MIT-licensed, can use it to train/finetune other models

- cannot see O1 thoughts, can see R1's

api-docs.deepseek.com/news/news250...

I hoped to get some time off from reading emails, but then I made the mistake of turning on Google Scholar alerts for the researchers I follow

I hoped to get some time off from reading emails, but then I made the mistake of turning on Google Scholar alerts for the researchers I follow

Learning Docker is a big hurdle though. New post:

ariesta.id/blog/2025/01...

Learning Docker is a big hurdle though. New post:

ariesta.id/blog/2025/01...

ariesta.id/blog/2025/01...

ariesta.id/blog/2025/01...

inspired by simonwillison.net/2024/Dec/22/...

inspired by simonwillison.net/2024/Dec/22/...

Yes looks is last priority

github.com/ariesta-id/t...

Yes looks is last priority

github.com/ariesta-id/t...

You should explore in areas of your expertise to try to figure it out for your use cases.

You should explore in areas of your expertise to try to figure it out for your use cases.