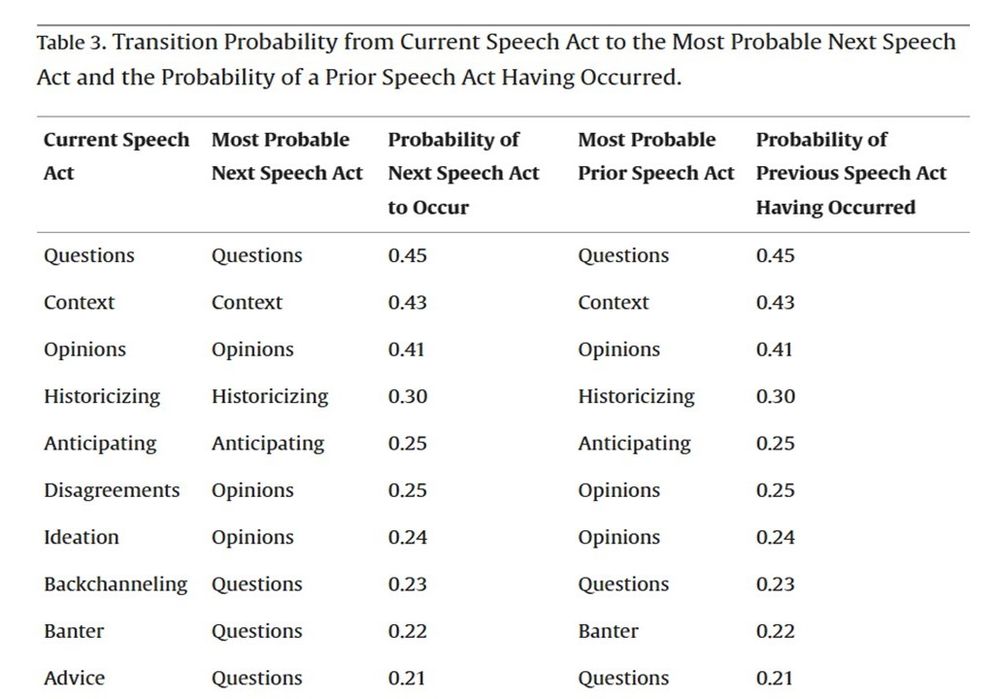

Monique Santoso coded 9,000 speech acts to develop Virtual Reality Interaction Dynamics Scheme, 10 speech acts (e.g., disagreements, context-dependent commentary). Prior speech acts and current nonverbal behavior predict group action.

vhil.stanford.edu/publications...

Monique Santoso coded 9,000 speech acts to develop Virtual Reality Interaction Dynamics Scheme, 10 speech acts (e.g., disagreements, context-dependent commentary). Prior speech acts and current nonverbal behavior predict group action.

vhil.stanford.edu/publications...

vhil.stanford.edu/publications...

vhil.stanford.edu/publications...

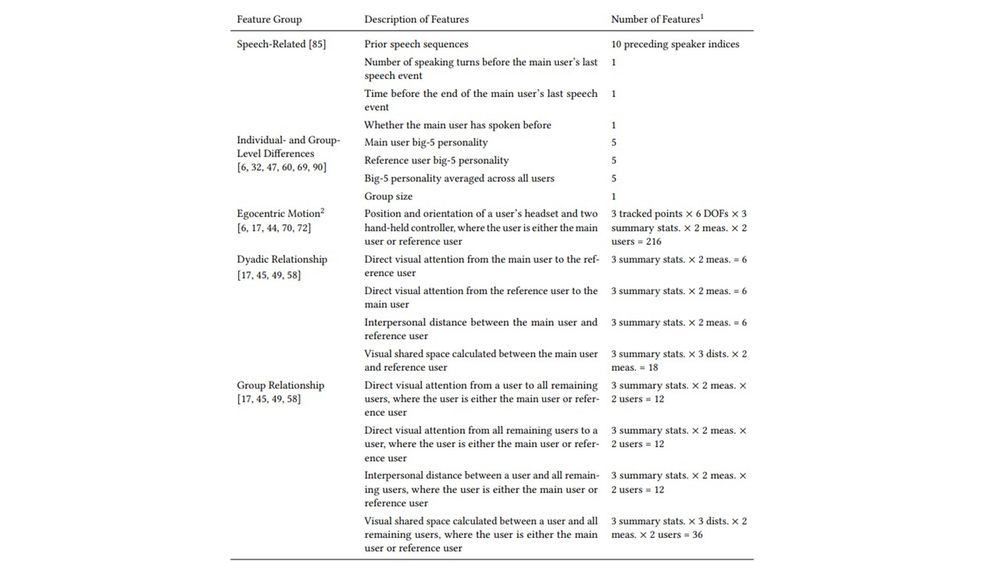

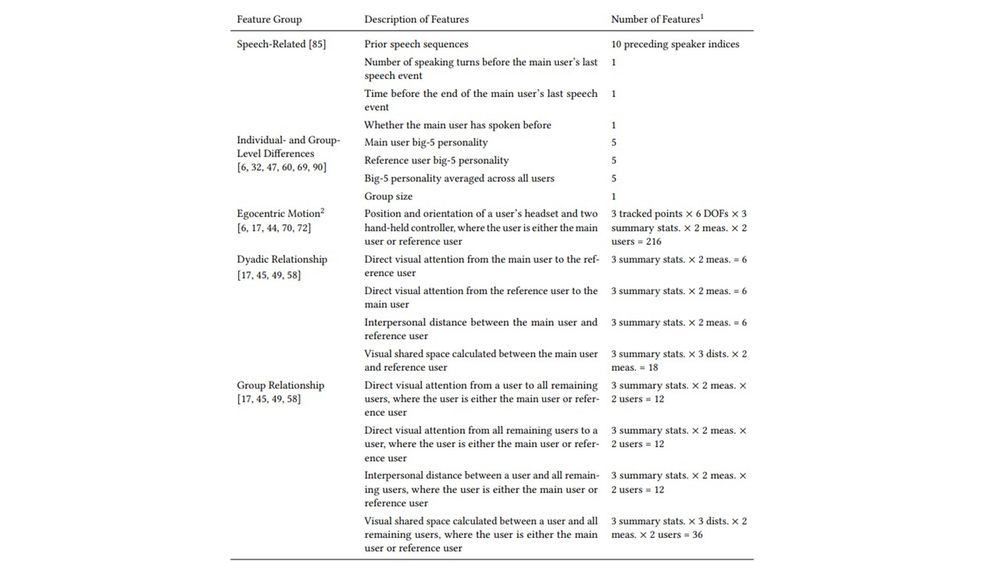

@PortiaWang.bsky.social analyzed a VR dataset of 77 sessions, 1660 minutes of group meetings over 4 weeks. Verbal & nonverbal history captured at millisecond level predicted turn-taking at nearly 30% over chance. To appear @acm-cscw.bsky.social.

vhil.stanford.edu/publications...

@PortiaWang.bsky.social analyzed a VR dataset of 77 sessions, 1660 minutes of group meetings over 4 weeks. Verbal & nonverbal history captured at millisecond level predicted turn-taking at nearly 30% over chance. To appear @acm-cscw.bsky.social.

vhil.stanford.edu/publications...

@PortiaWang.bsky.social analyzed a VR dataset of 77 sessions, 1660 minutes of group meetings over 4 weeks. Verbal & nonverbal history captured at millisecond level predicted turn-taking at nearly 30% over chance. To appear @acm-cscw.bsky.social.

vhil.stanford.edu/publications...