Anima Anandkumar

@anima-anandkumar.bsky.social

AI Pioneer, AI+Science, Professor at Caltech, Former Senior Director of AI at NVIDIA, Former Principal Scientist at AWS AI.

Join livestream and listen to talks at @caltech.edu ai+science conference aiscienceconference.caltech.edu

AI+Science Conference

The California Institute of Technology and the University of Chicago are centers of gravity for the study, application, and use of AI and Machine Learning to enable scientific discovery across the physical and biological sciences, advancing core AI principles and training a new generation of interdisciplinary scientists. To both advance this scientific and technical pursuit and demonstrate the leadership of Caltech and UChicago in this space, we will host the The Caltech and University of Chicago Conference on AI+Science, Sponsored by the Margot and Tom Pritzker Foundation, at Caltech from November 10-11, 2025. This event will bring together an elite and diverse cohort of leading researchers in core AI and domain sciences to lead conversations and drive partnerships that will shape future inquiry, industry investment, and entrepreneurial opportunities.

aiscienceconference.caltech.edu

November 10, 2025 at 5:43 PM

Join livestream and listen to talks at @caltech.edu ai+science conference aiscienceconference.caltech.edu

Reposted by Anima Anandkumar

Join @aratip.bsky.social, Vivek Vishwanathan & @anima-anandkumar.bsky.social for UC Berkeley’s #TechPolicyWeek!

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

Bigger, Better Ambitions for AI

How can we advance efforts to harness AI to deliver positive impacts to people's lives?

www.eventbrite.com

October 11, 2025 at 10:50 PM

Join @aratip.bsky.social, Vivek Vishwanathan & @anima-anandkumar.bsky.social for UC Berkeley’s #TechPolicyWeek!

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

“Bigger, Better Ambitions for AI” — exploring how #AI can drive positive impact.

Oct 20 | 3–4:15pm | 2400 Ridge Rd

@BerkeleyISchool.bsky.social @GoldmanSchool.bsky.social

I am thrilled to see Omar Yaghi win the Nobel Prize in Chemistry today. I have had the privilege to interact with him and collaborate with him. This is a paper from a couple of years ago using generative models for MOFs with @ucberkeleyofficial.bsky.social group. pubs.acs.org/doi/10.1021/...

Shaping the Water-Harvesting Behavior of Metal–Organic Frameworks Aided by Fine-Tuned GPT Models

We construct a data set of metal–organic framework (MOF) linkers and employ a fine-tuned GPT assistant to propose MOF linker designs by mutating and modifying the existing linker structures. This strategy allows the GPT model to learn the intricate language of chemistry in molecular representations, thereby achieving an enhanced accuracy in generating linker structures compared with its base models. Aiming to highlight the significance of linker design strategies in advancing the discovery of water-harvesting MOFs, we conducted a systematic MOF variant expansion upon state-of-the-art MOF-303 utilizing a multidimensional approach that integrates linker extension with multivariate tuning strategies. We synthesized a series of isoreticular aluminum MOFs, termed Long-Arm MOFs (LAMOF-1 to LAMOF-10), featuring linkers that bear various combinations of heteroatoms in their five-membered ring moiety, replacing pyrazole with either thiophene, furan, or thiazole rings or a combination of two. Beyond their consistent and robust architecture, as demonstrated by permanent porosity and thermal stability, the LAMOF series offers a generalizable synthesis strategy. Importantly, these 10 LAMOFs establish new benchmarks for water uptake (up to 0.64 g g–1) and operational humidity ranges (between 13 and 53%), thereby expanding the diversity of water-harvesting MOFs.

pubs.acs.org

October 8, 2025 at 7:15 PM

I am thrilled to see Omar Yaghi win the Nobel Prize in Chemistry today. I have had the privilege to interact with him and collaborate with him. This is a paper from a couple of years ago using generative models for MOFs with @ucberkeleyofficial.bsky.social group. pubs.acs.org/doi/10.1021/...

Reposted by Anima Anandkumar

Our new paper on AI-generated TrpBs with @anima-anandkumar.bsky.social. GenSLM generated very useful promiscuous TrpB #enzymes, bypassing a lot of #directedevolution! great work by the whole team, especially Theophile Lambert. www.biorxiv.org/content/10.1...

www.biorxiv.org

September 4, 2025 at 2:58 PM

Our new paper on AI-generated TrpBs with @anima-anandkumar.bsky.social. GenSLM generated very useful promiscuous TrpB #enzymes, bypassing a lot of #directedevolution! great work by the whole team, especially Theophile Lambert. www.biorxiv.org/content/10.1...

Very pleased to see our AI model GenSLM designing novel and versatile enzymes in a challenging setting in

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

www.biorxiv.org

September 3, 2025 at 7:31 PM

Very pleased to see our AI model GenSLM designing novel and versatile enzymes in a challenging setting in

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

@francesarnold.bsky.social lab in the tryptophan synthase (TrpB) family. www.biorxiv.org/content/10.1...

AI can create novel enzymes outperformed both natural and laboratory-optimized TrpBs.

How do we build AI for science? Augment with AI or replace with AI? Augment with AI involves keeping existing numerical simulations. In our latest paper, we show end-to-end learning is faster significantly and also wins in data efficiency, which is counterintuitive. arxiv.org/pdf/2408.05177 #ai

September 2, 2025 at 12:37 AM

How do we build AI for science? Augment with AI or replace with AI? Augment with AI involves keeping existing numerical simulations. In our latest paper, we show end-to-end learning is faster significantly and also wins in data efficiency, which is counterintuitive. arxiv.org/pdf/2408.05177 #ai

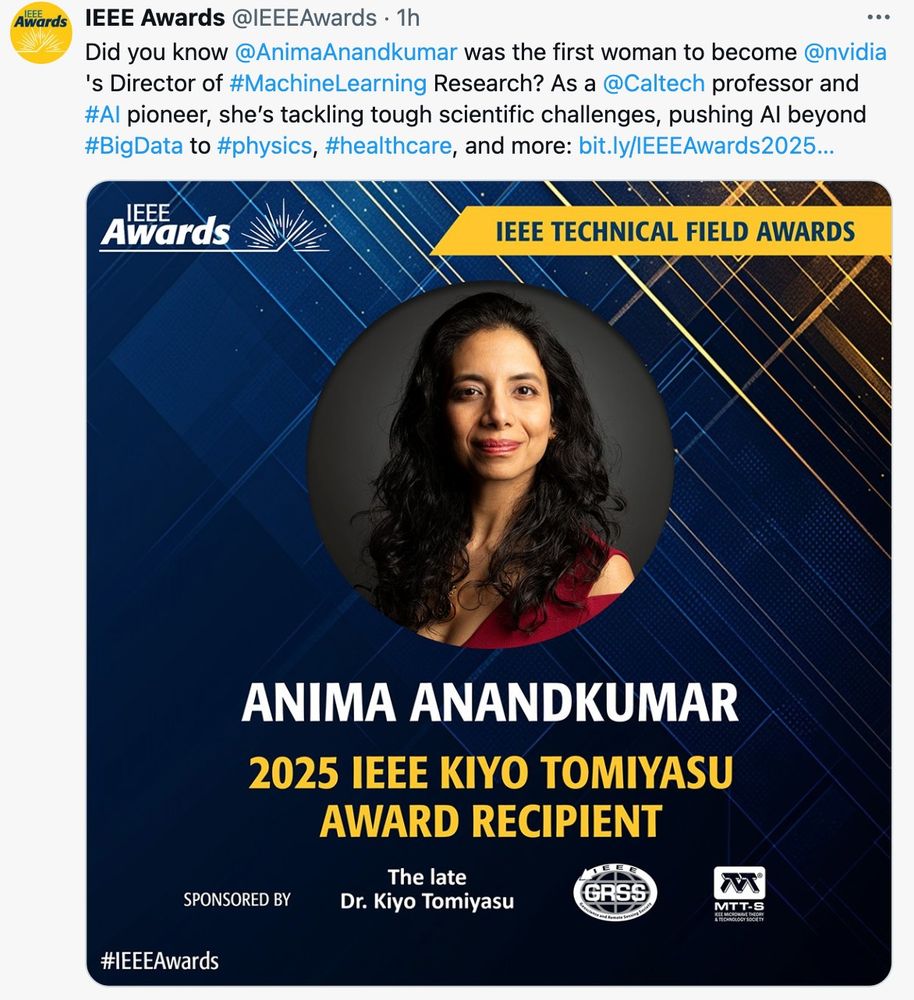

Thank you @cvprconference.bsky.social for hosting my

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

June 16, 2025 at 3:03 AM

Thank you @cvprconference.bsky.social for hosting my

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

IEEE Kiyo Tomiyasu award for bringing AI to scientific domains with Neural Operators and physics-informed learning. The future of science is AI+Science!

corporate-awards.ieee.org/award/ieee-k...

Reposted by Anima Anandkumar

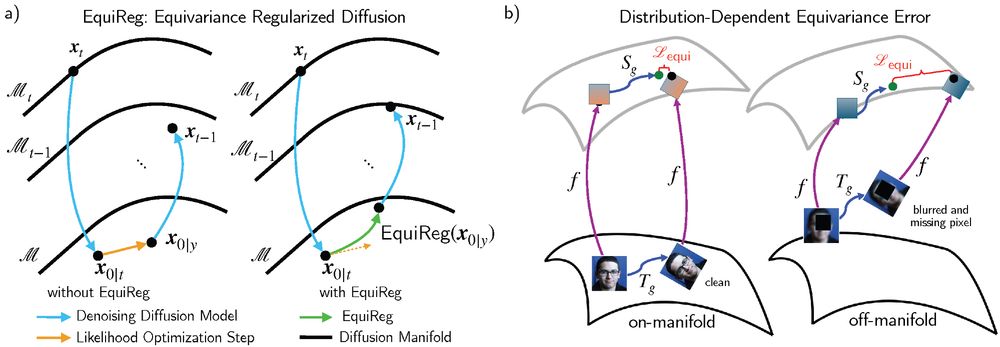

🚨We propose EquiReg, a generalized regularization framework that uses symmetry in generative diffusion models to improve solutions to inverse problems. arxiv.org/abs/2505.22973

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

June 12, 2025 at 3:47 PM

🚨We propose EquiReg, a generalized regularization framework that uses symmetry in generative diffusion models to improve solutions to inverse problems. arxiv.org/abs/2505.22973

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

@aditijc.bsky.social, Rayhan Zirvi, Abbas Mammadov, @jiacheny.bsky.social, Chuwei Wang, @anima-anandkumar.bsky.social 1/

Thank you @caltech.edu for including me in the history of AI. It starts with Carver Mead, John Hopfield and Richard Feynman teaching a course on physics of computation. Not many are aware that the main AI conference, NeurIPS, started at @caltech.edu

magazine.caltech.edu/post/ai-mach...

magazine.caltech.edu/post/ai-mach...

The Roots of Neural Network: How Caltech Research Paved the Way to Modern AI — Caltech Magazine

Tracing the roots of neural networks, the building blocks of modern AI, at Caltech. By Whitney Clavin

magazine.caltech.edu

June 10, 2025 at 5:34 PM

Thank you @caltech.edu for including me in the history of AI. It starts with Carver Mead, John Hopfield and Richard Feynman teaching a course on physics of computation. Not many are aware that the main AI conference, NeurIPS, started at @caltech.edu

magazine.caltech.edu/post/ai-mach...

magazine.caltech.edu/post/ai-mach...

Reposted by Anima Anandkumar

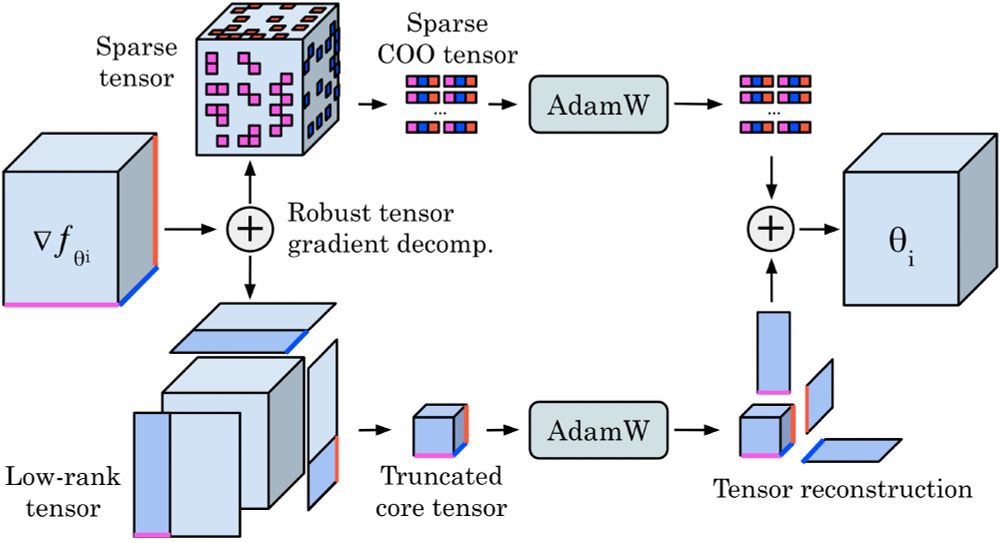

Check out our new preprint 𝐓𝐞𝐧𝐬𝐨𝐫𝐆𝐑𝐚𝐃.

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

June 3, 2025 at 3:17 AM

Check out our new preprint 𝐓𝐞𝐧𝐬𝐨𝐫𝐆𝐑𝐚𝐃.

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

We use a robust decomposition of the gradient tensors into low-rank + sparse parts to reduce optimizer memory for Neural Operators by up to 𝟕𝟓%, while matching the performance of Adam, even on turbulent Navier–Stokes (Re 10e5).

Reposted by Anima Anandkumar

Thanks to my co-authors David Pitt, Robert Joseph George, Jiawwei Zhao, Cheng Luo, Yuandong Tian, Jean Kossaifi, @anima-anandkumar.bsky.social, and @caltech.edu for hosting me this spring!

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

TensorGRaD: Tensor Gradient Robust Decomposition for Memory-Efficient Neural Operator Training

Scientific problems require resolving multi-scale phenomena across different resolutions and learning solution operators in infinite-dimensional function spaces. Neural operators provide a powerful fr...

arxiv.org

June 3, 2025 at 3:17 AM

Thanks to my co-authors David Pitt, Robert Joseph George, Jiawwei Zhao, Cheng Luo, Yuandong Tian, Jean Kossaifi, @anima-anandkumar.bsky.social, and @caltech.edu for hosting me this spring!

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

Paper: arxiv.org/abs/2501.02379

Code: github.com/neuraloperat...

It was an honor to be part of Google IO Dialogues stage and talk about AI+Science.

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

Science in the age of AI

YouTube video by Google for Developers

youtu.be

June 1, 2025 at 6:21 PM

It was an honor to be part of Google IO Dialogues stage and talk about AI+Science.

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

AI needs to understand the physical world to make new scientific discoveries.

LLMs come up with new ideas, but bottleneck is testing in real world.

Physics-informed learning is needed

youtu.be/NYtQuneZMXc?...

In a recent interview I talk about what it takes for AI to make new scientific discoveries. tldr: it won’t be just LLMs. www.newindiaabroad.com/english/tech...

Indian American professor Anima Anandkumar on developing AI for new scientific discoveries

Learn how Indian American professor Anima Anandkumar is revolutionizing the world of artificial intelligence to drive new scientific discoveries. Explore her cutting-edge research and innovative appro...

www.newindiaabroad.com

May 25, 2025 at 11:26 PM

In a recent interview I talk about what it takes for AI to make new scientific discoveries. tldr: it won’t be just LLMs. www.newindiaabroad.com/english/tech...

Thank you EO for coming to @caltech.edu interviewing me on #ai I talk about the need to keep being curious and use AI as a tool, rather than being afraid of AI. I talk about AI for scientific modeling and discovery, and training the first high-resolution AI-based weather model. youtu.be/FIxLJVthW6I

Caltech AI Professor: The One Skill AI Can't Replace | Anima Anandkumar

YouTube video by EO

youtu.be

May 4, 2025 at 8:41 PM

Thank you EO for coming to @caltech.edu interviewing me on #ai I talk about the need to keep being curious and use AI as a tool, rather than being afraid of AI. I talk about AI for scientific modeling and discovery, and training the first high-resolution AI-based weather model. youtu.be/FIxLJVthW6I

Reposted by Anima Anandkumar

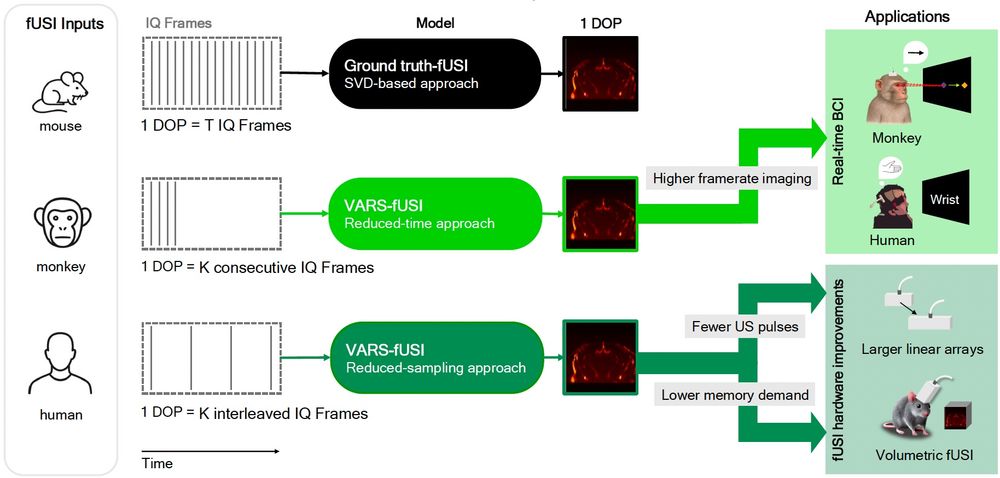

We have released VARS-fUSI: Variable sampling for fast and efficient functional ultrasound imaging (fUSI) using neural operators.

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

April 28, 2025 at 5:55 PM

We have released VARS-fUSI: Variable sampling for fast and efficient functional ultrasound imaging (fUSI) using neural operators.

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

The first deep learning fUSI method to allow for different sampling durations and rates during training and inference. biorxiv.org/content/10.1... 1/

Reposted by Anima Anandkumar

Rayhan Zirvi is presenting our paper "Diffusion State-Guided Projected Gradient for Inverse Problems" at #ICLR2025! Joint work with @anima-anandkumar.bsky.social 1/

paper: openreview.net/pdf?id=kRBQw...

code: github.com/Anima-Lab/Di...

website: diffstategrad.github.io

paper: openreview.net/pdf?id=kRBQw...

code: github.com/Anima-Lab/Di...

website: diffstategrad.github.io

April 24, 2025 at 4:58 AM

Rayhan Zirvi is presenting our paper "Diffusion State-Guided Projected Gradient for Inverse Problems" at #ICLR2025! Joint work with @anima-anandkumar.bsky.social 1/

paper: openreview.net/pdf?id=kRBQw...

code: github.com/Anima-Lab/Di...

website: diffstategrad.github.io

paper: openreview.net/pdf?id=kRBQw...

code: github.com/Anima-Lab/Di...

website: diffstategrad.github.io

Reposted by Anima Anandkumar

#WomensHistoryMonth: Honoring trailblazing #WomenOfAI whose research has made an impact on the current #AI/ML revolution incl. @anima-anandkumar.bsky.social @timnitgebru.bsky.social @mmitchell.bsky.social @deviparikh.bsky.social @ajlunited.bsky.social @yejinchoinka.bsky.social @drfeifei.bsky.social

March 30, 2025 at 7:33 PM

#WomensHistoryMonth: Honoring trailblazing #WomenOfAI whose research has made an impact on the current #AI/ML revolution incl. @anima-anandkumar.bsky.social @timnitgebru.bsky.social @mmitchell.bsky.social @deviparikh.bsky.social @ajlunited.bsky.social @yejinchoinka.bsky.social @drfeifei.bsky.social

Reposted by Anima Anandkumar

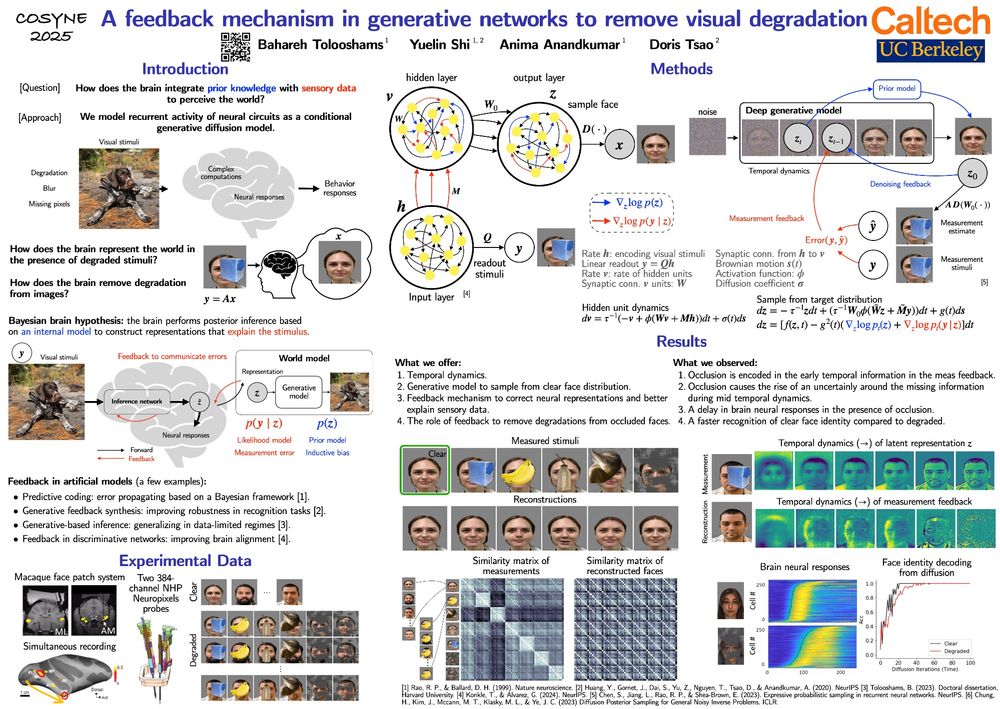

How does the brain integrate prior knowledge with sensory data to perceive the world?

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2

March 27, 2025 at 8:59 PM

How does the brain integrate prior knowledge with sensory data to perceive the world?

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2

Come check out our poster [1-090] at #cosyne2025:

"A feedback mechanism in generative networks to remove visual degradation," joint work with Yuelin Shi, @anima-anandkumar.bsky.social, and Doris Tsao. 1/2

Thank you, IEEE, for the honor! AI+Science is here to stay. I started working on this seriously after I joined @caltech.edu in 2017. We grounded our work in principled foundations, such as Neural Operators and physics-informed learning, for accelerating modeling and making scientific discoveries.

March 18, 2025 at 6:17 PM

Thank you, IEEE, for the honor! AI+Science is here to stay. I started working on this seriously after I joined @caltech.edu in 2017. We grounded our work in principled foundations, such as Neural Operators and physics-informed learning, for accelerating modeling and making scientific discoveries.

Reposted by Anima Anandkumar

LeanAgent: Lifelong learning for formal theorem proving. ~ Adarsh Kumarappan, Mo Tiwari, Peiyang Song, Robert Joseph George, Chaowei Xiao, Anima Anandkumar. arxiv.org/abs/2410.06209 #LLMs #ITP #LeanProver #Math

LeanAgent: Lifelong Learning for Formal Theorem Proving

Large Language Models (LLMs) have been successful in mathematical reasoning tasks such as formal theorem proving when integrated with interactive proof assistants like Lean. Existing approaches involv...

arxiv.org

March 13, 2025 at 7:42 AM

LeanAgent: Lifelong learning for formal theorem proving. ~ Adarsh Kumarappan, Mo Tiwari, Peiyang Song, Robert Joseph George, Chaowei Xiao, Anima Anandkumar. arxiv.org/abs/2410.06209 #LLMs #ITP #LeanProver #Math

We are excited to share our #ICLR2025 paper on LeanAgent: the first lifelong learning agent for formal theorem proving in Lean. It achieves exceptional scores in stability and backward transfer.

arxiv.org/abs/2410.06209 github.com/lean-dojo/Le...

arxiv.org/abs/2410.06209 github.com/lean-dojo/Le...

LeanAgent: Lifelong Learning for Formal Theorem Proving

Large Language Models (LLMs) have been successful in mathematical reasoning tasks such as formal theorem proving when integrated with interactive proof assistants like Lean. Existing approaches involv...

arxiv.org

March 12, 2025 at 9:42 PM

We are excited to share our #ICLR2025 paper on LeanAgent: the first lifelong learning agent for formal theorem proving in Lean. It achieves exceptional scores in stability and backward transfer.

arxiv.org/abs/2410.06209 github.com/lean-dojo/Le...

arxiv.org/abs/2410.06209 github.com/lean-dojo/Le...

Thank you for Time 100 AI impact award time.com/7212504/time...

February 28, 2025 at 5:57 PM

Thank you for Time 100 AI impact award time.com/7212504/time...

2024 was a pivotal year for ai+science. Here’s my research summary tensorlab.cms.caltech.edu/users/anima/...

Anima AI + Science Lab

tensorlab.cms.caltech.edu

January 2, 2025 at 6:42 AM

2024 was a pivotal year for ai+science. Here’s my research summary tensorlab.cms.caltech.edu/users/anima/...

Excited to present our work on CoDA-NO at #NeurIPS2024 We develop a novel neural operator architecture designed to solve coupled partial differential equations (PDEs) in multiphysics systems. Paper: arxiv.org/abs/2403.12553

Pretraining Codomain Attention Neural Operators for Solving Multiphysics PDEs

Existing neural operator architectures face challenges when solving multiphysics problems with coupled partial differential equations (PDEs) due to complex geometries, interactions between physical va...

arxiv.org

December 9, 2024 at 5:13 AM

Excited to present our work on CoDA-NO at #NeurIPS2024 We develop a novel neural operator architecture designed to solve coupled partial differential equations (PDEs) in multiphysics systems. Paper: arxiv.org/abs/2403.12553

Hello blue sky!

November 20, 2024 at 6:05 PM

Hello blue sky!