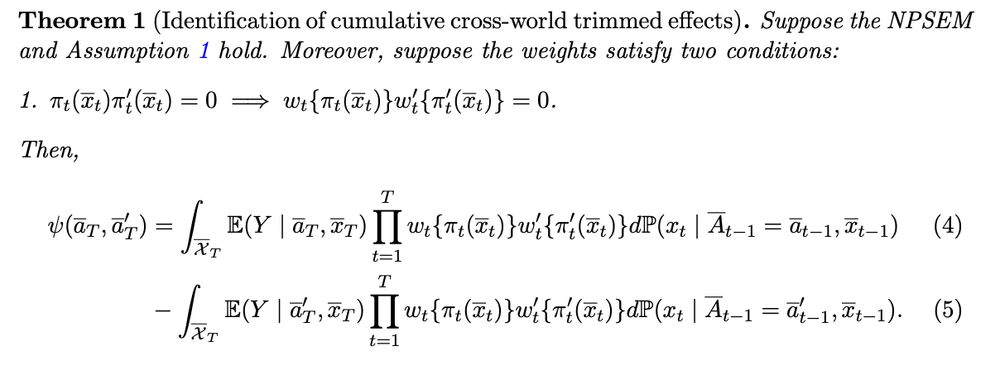

- ID: Need strong seq. rand., but still possible w/out positivity

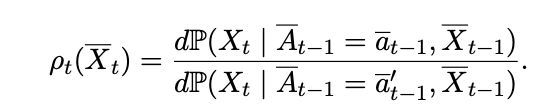

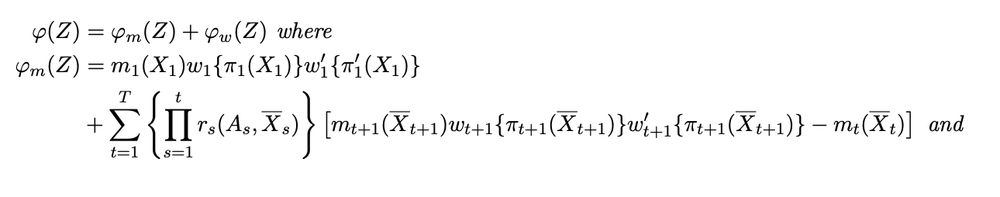

- Est: new EIF for doubly robust estimator involves additional term w/ covariate density ratio across the target regimes

- ID: Need strong seq. rand., but still possible w/out positivity

- Est: new EIF for doubly robust estimator involves additional term w/ covariate density ratio across the target regimes

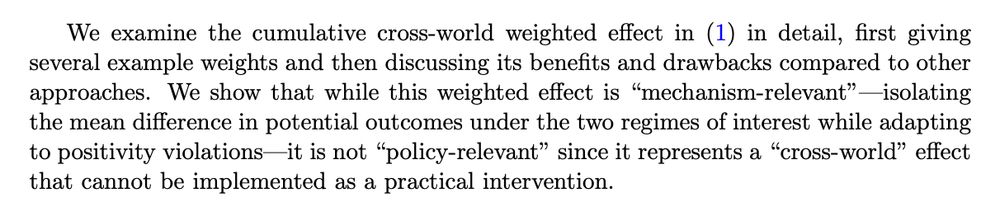

- "Cross-world"

- "Mechanism-relevant" (they target mean diff in POs we care about)

- **Not** "policy-relevant" (they're not implementable)

This tradeoff arises elsewhere (mediation, censoring by death). Ours is another example:

What you want to know != what you can implement

- "Cross-world"

- "Mechanism-relevant" (they target mean diff in POs we care about)

- **Not** "policy-relevant" (they're not implementable)

This tradeoff arises elsewhere (mediation, censoring by death). Ours is another example:

What you want to know != what you can implement

Some notes vv

Some notes vv

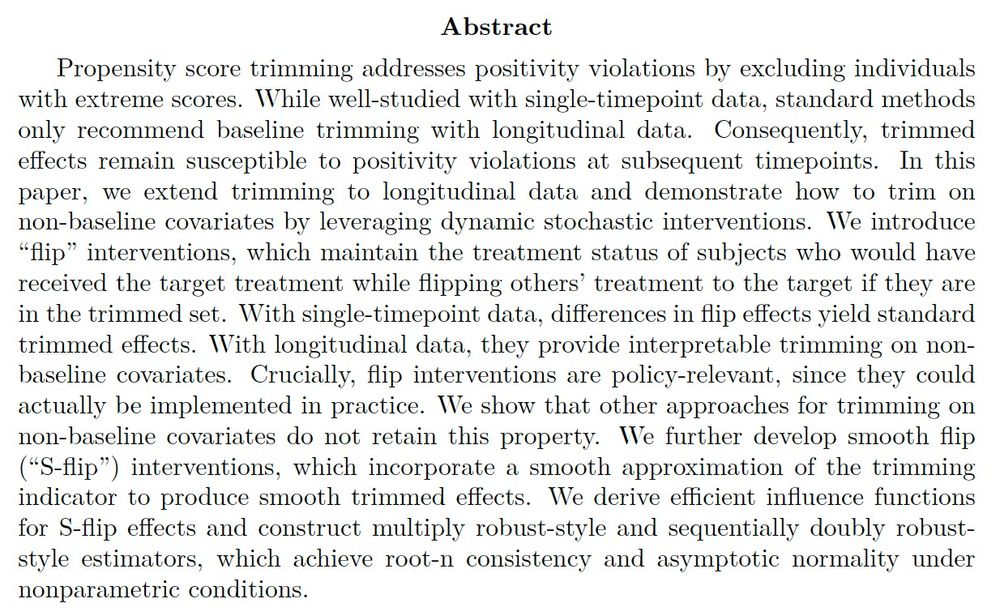

"Longitudinal trimming and smooth trimming with flip and S-flip interventions"

Prelim draft: alecmcclean.github.io/files/LSTTEs...

"Longitudinal trimming and smooth trimming with flip and S-flip interventions"

Prelim draft: alecmcclean.github.io/files/LSTTEs...

This was fun work with Edward and Zach Branson (sites.google.com/site/zjbrans...) and was a great project to finish my PhD!

9/9

This was fun work with Edward and Zach Branson (sites.google.com/site/zjbrans...) and was a great project to finish my PhD!

9/9

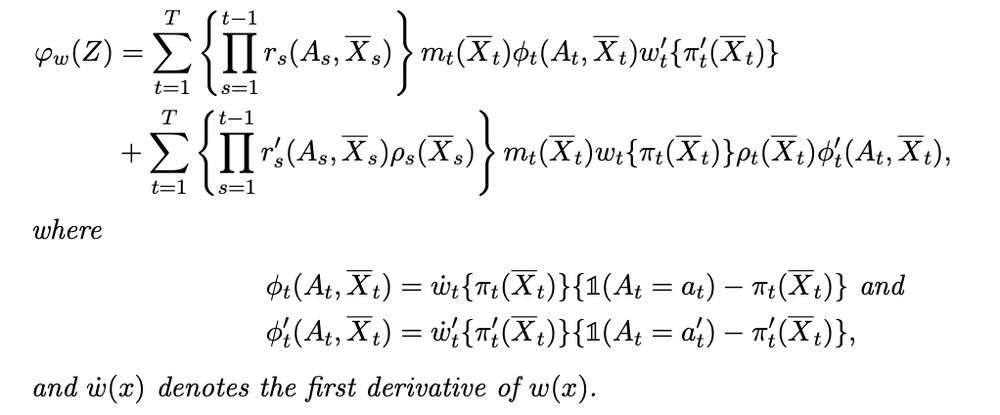

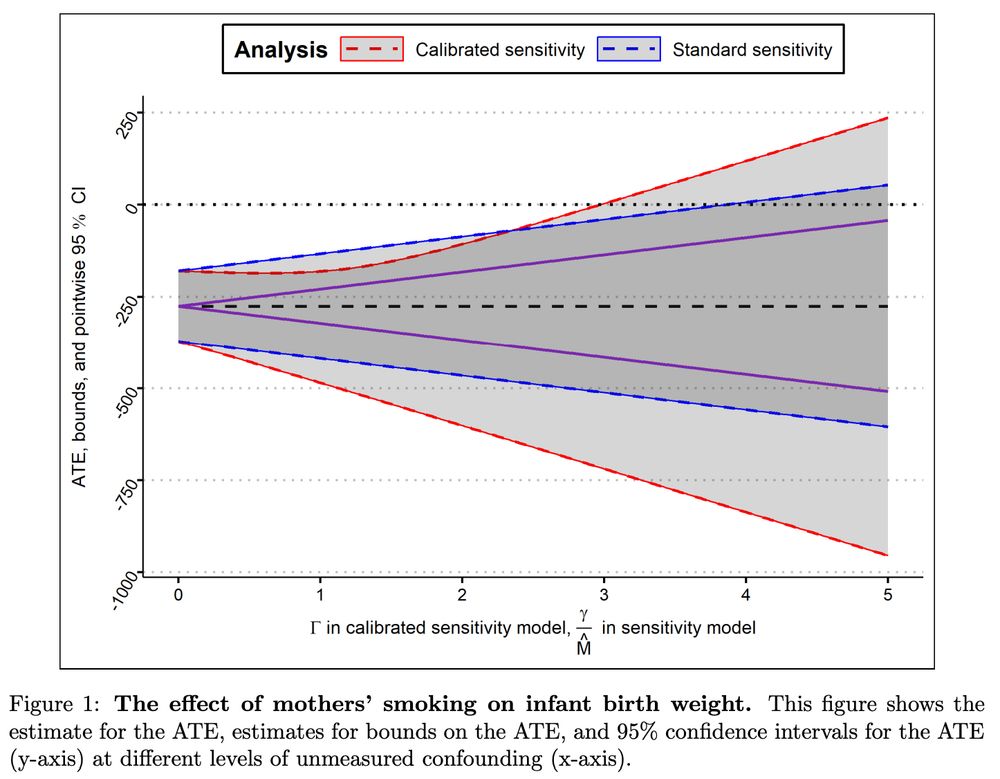

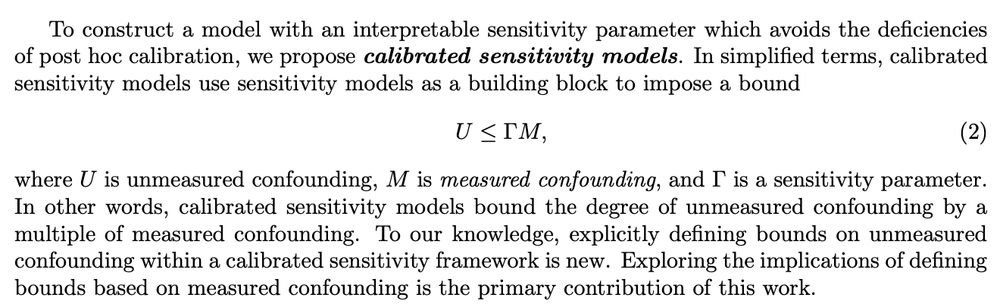

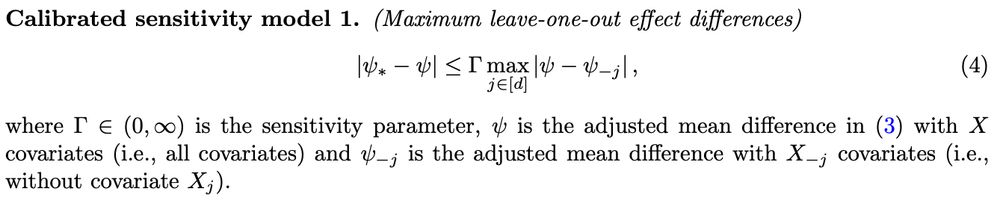

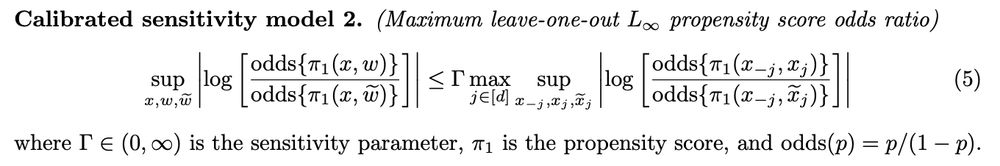

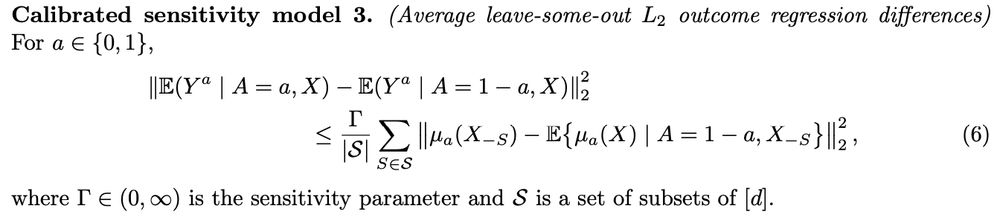

U <= GM

where G is sensitivity parameter.

We outline many choices for U and M and develop three specific models. Then identify bounds on ATE and give estimators that account for uncertainty in estimating M.

8/9

U <= GM

where G is sensitivity parameter.

We outline many choices for U and M and develop three specific models. Then identify bounds on ATE and give estimators that account for uncertainty in estimating M.

8/9

Sensitivity analyses look at how unmeasured confounders (U) alter causal effect estimates (when, eg, trtment not random). To understand U, we can calibrate by estimating analogous ~measured~ conf. (M) by leaving out variables from data

6/9

Sensitivity analyses look at how unmeasured confounders (U) alter causal effect estimates (when, eg, trtment not random). To understand U, we can calibrate by estimating analogous ~measured~ conf. (M) by leaving out variables from data

6/9

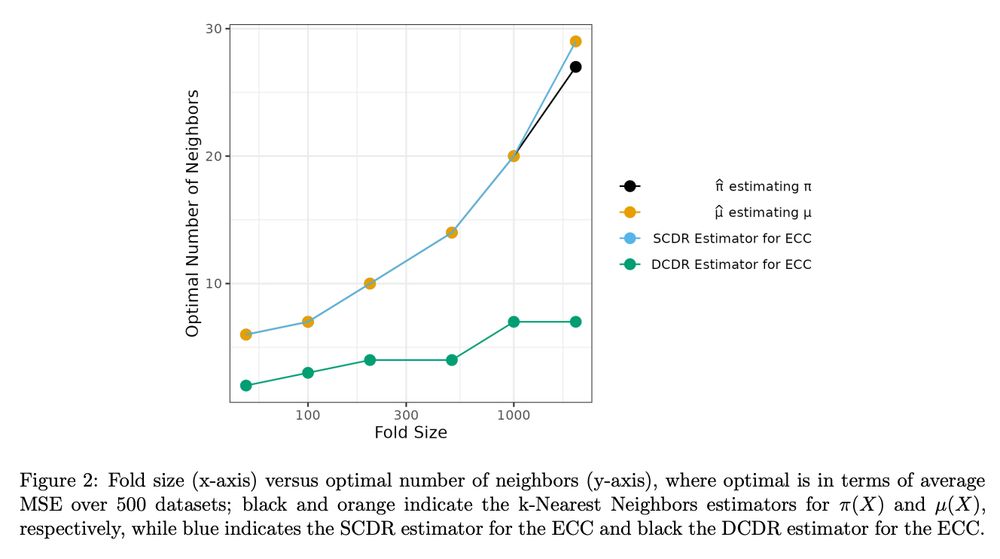

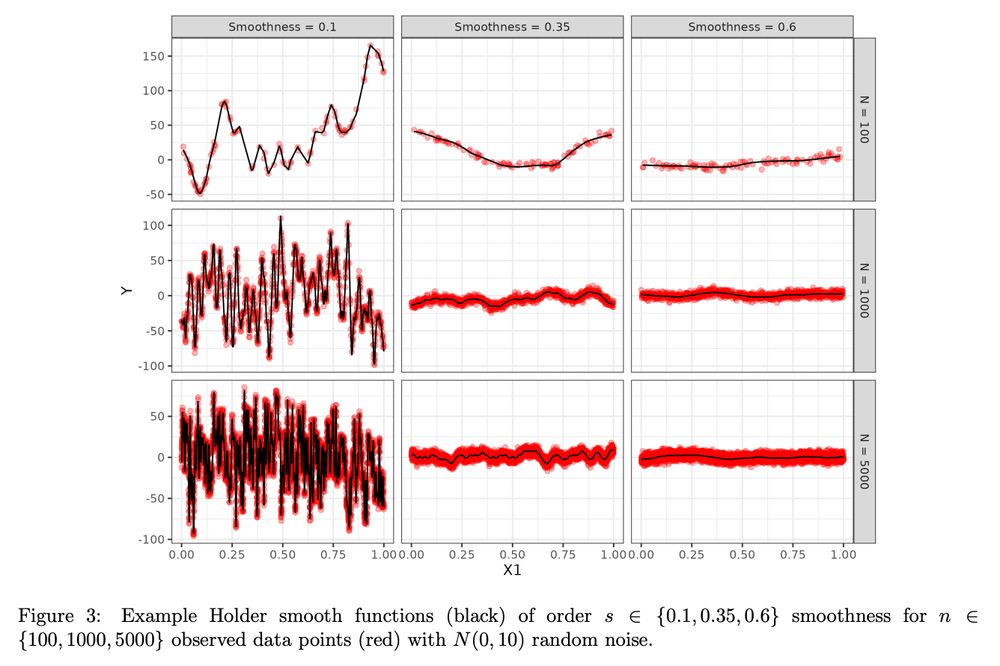

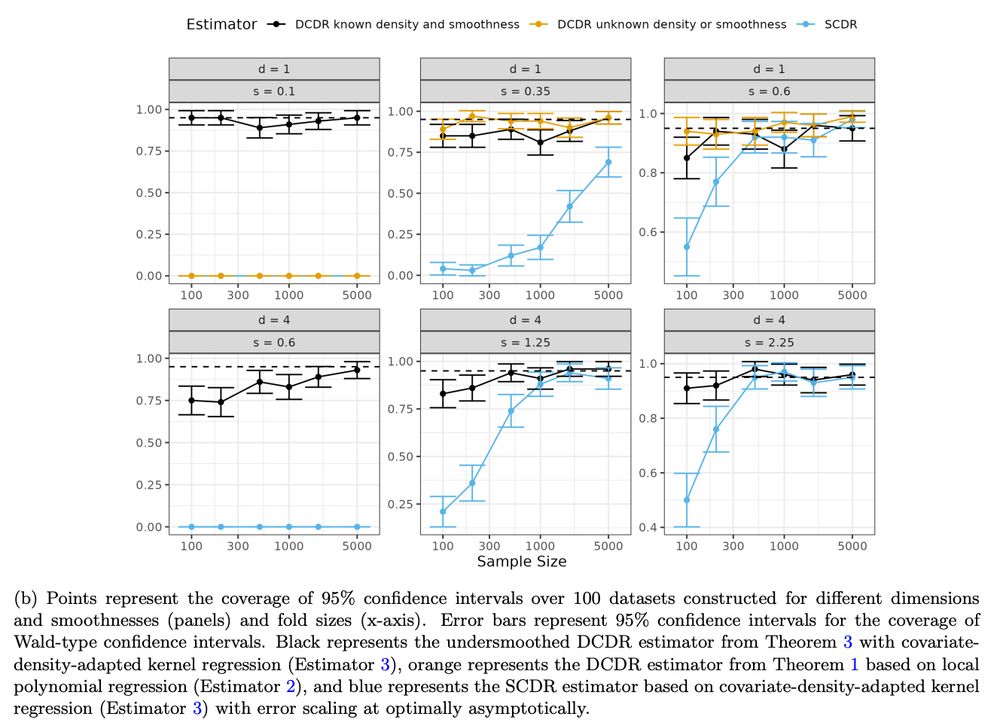

4. Simulations illustrating 1-3. Manufacturing Holder smooth fns was an interesting challenge!

4/9

4. Simulations illustrating 1-3. Manufacturing Holder smooth fns was an interesting challenge!

4/9

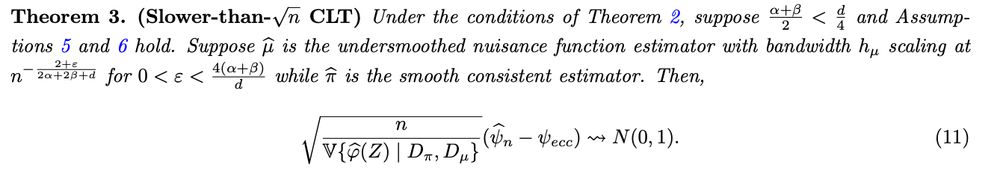

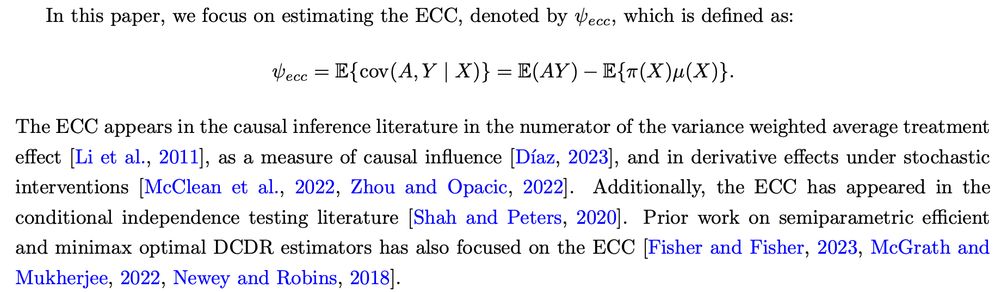

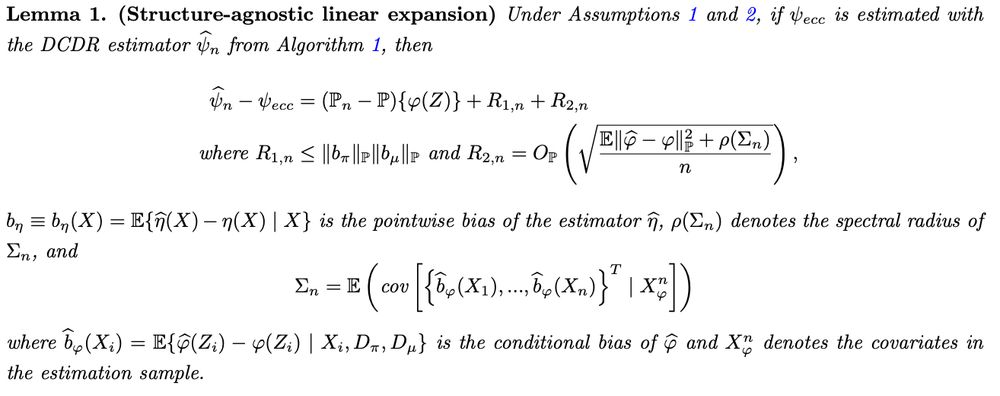

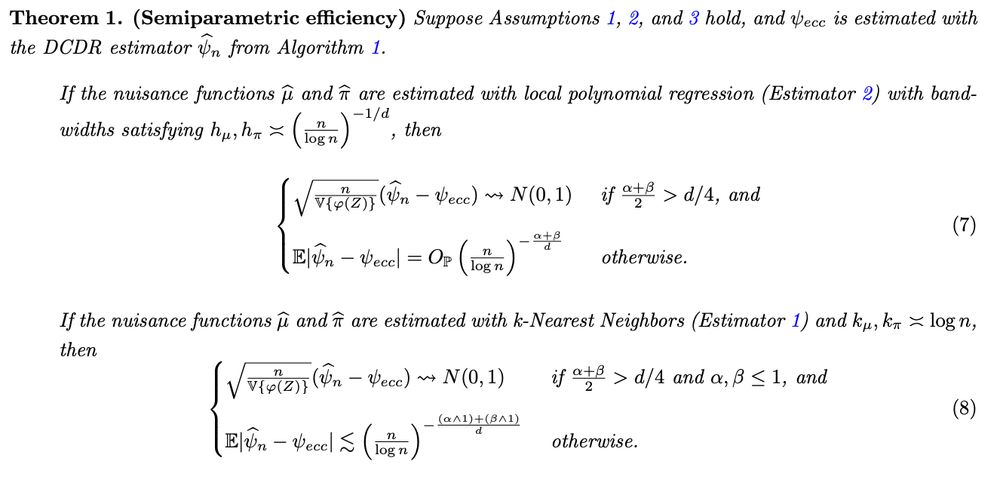

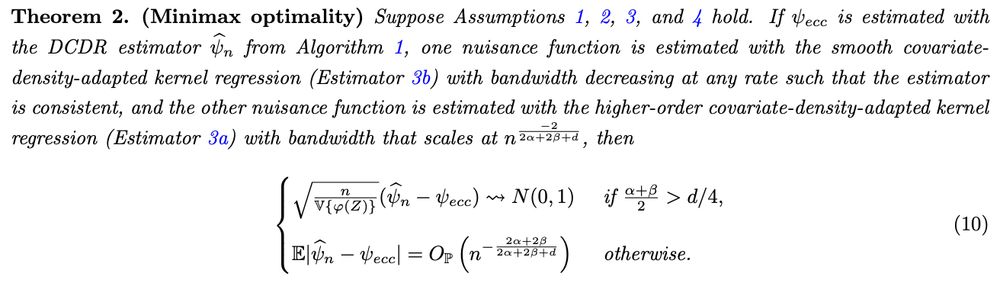

1. Structure-agnostic linear expansion for DCDR est. Nuis func est. bias more important than var.

2. Rates with local lin smoothers for nfs under holder smoothness. Semiparametric efficiency and minimax optimality possible

3/9

1. Structure-agnostic linear expansion for DCDR est. Nuis func est. bias more important than var.

2. Rates with local lin smoothers for nfs under holder smoothness. Semiparametric efficiency and minimax optimality possible

3/9

The DCDR estimator is quite new (2018, arxiv.org/abs/1801.09138). It splits training data and trains nuisance fns on independent folds

It can get faster conv rates than usual DR estimator, which trains nuis funcs on same sample.

We analyze the DCDR est. in detail!

2/9

The DCDR estimator is quite new (2018, arxiv.org/abs/1801.09138). It splits training data and trains nuisance fns on independent folds

It can get faster conv rates than usual DR estimator, which trains nuis funcs on same sample.

We analyze the DCDR est. in detail!

2/9