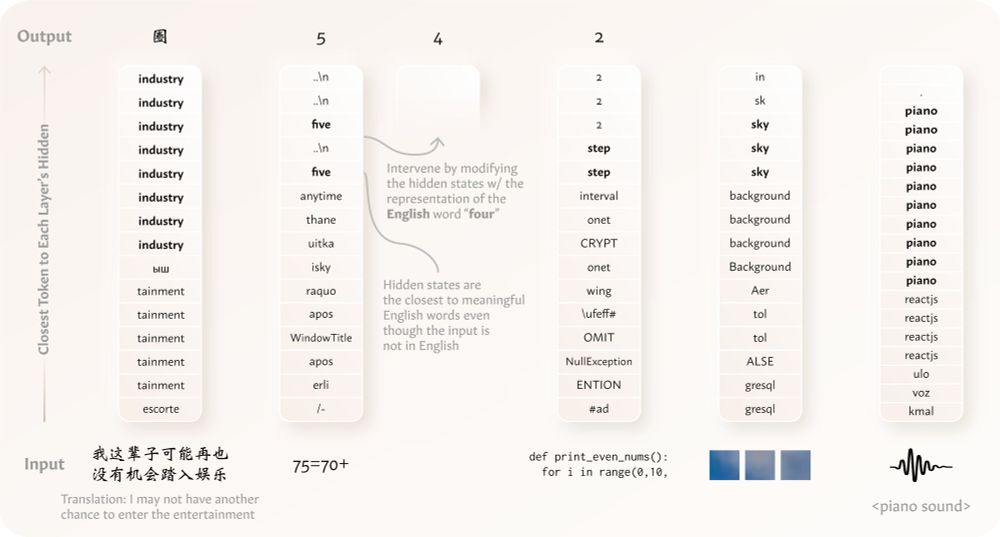

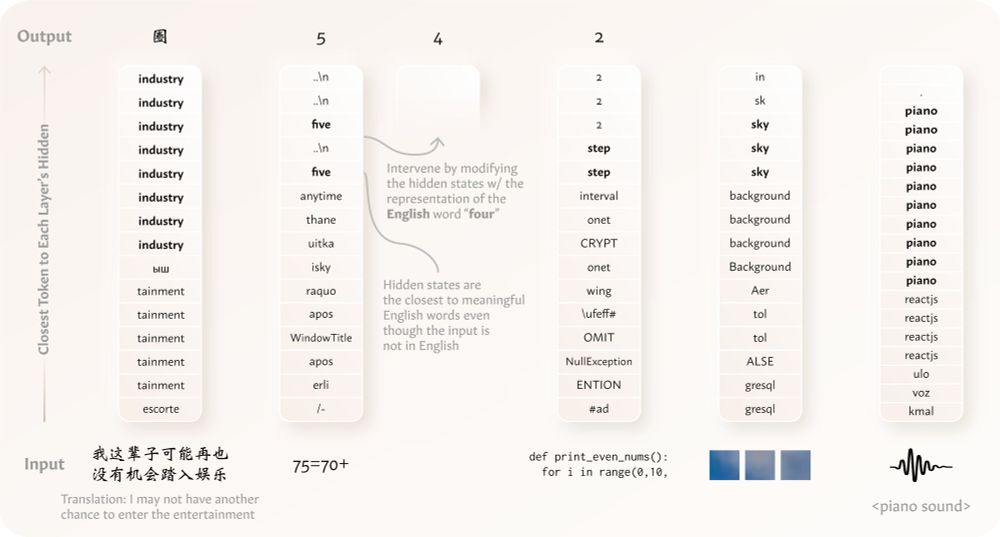

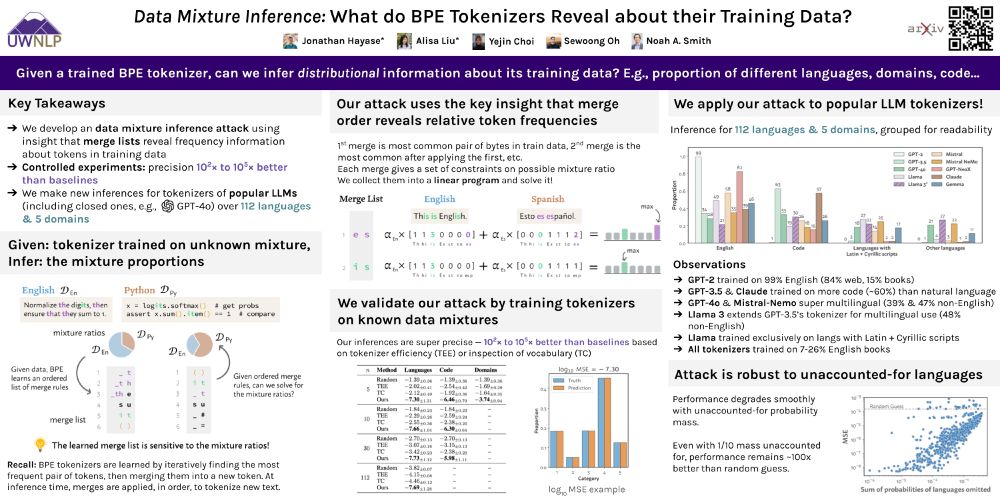

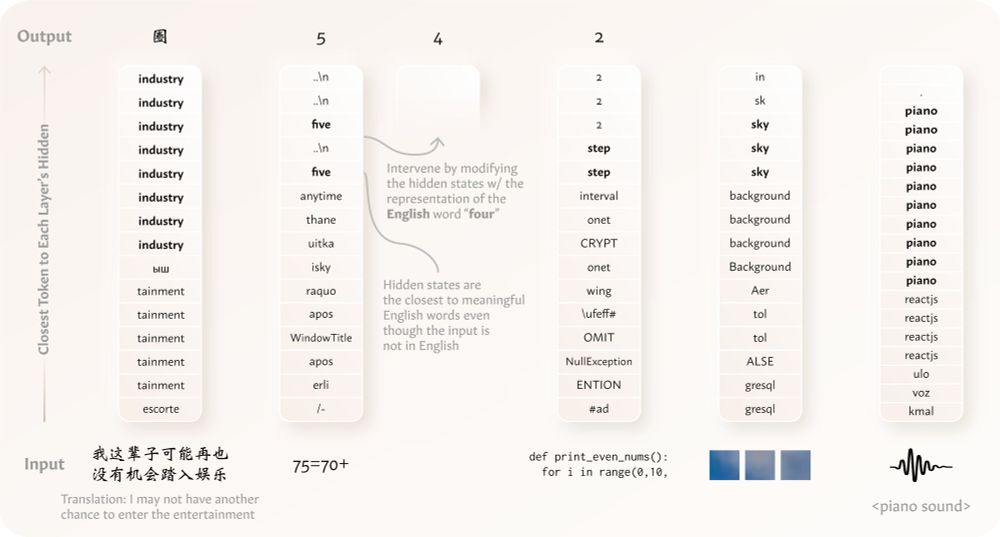

A new study shows LLMs represent different data types based on their underlying meaning & reason about data in their dominant language: bit.ly/3QrZvyy

A new study shows LLMs represent different data types based on their underlying meaning & reason about data in their dominant language: bit.ly/3QrZvyy

🔗 arxiv.org/abs/2407.16607

🔗 arxiv.org/abs/2407.16607

We release ASL STEM Wiki: the first signing dataset of STEM articles!

📰 254 Wikipedia articles

📹 ~300 hours of ASL interpretations

👋 New task: automatic sign suggestion to make STEM education more accessible

microsoft.com/en-us/resear...

🧵 #EMNLP2024

We release ASL STEM Wiki: the first signing dataset of STEM articles!

📰 254 Wikipedia articles

📹 ~300 hours of ASL interpretations

👋 New task: automatic sign suggestion to make STEM education more accessible

microsoft.com/en-us/resear...

🧵 #EMNLP2024