AKA @akamarkman

It happened to my colleague:

www.washingtonpost.com/technology/2...

It happened to my colleague:

www.washingtonpost.com/technology/2...

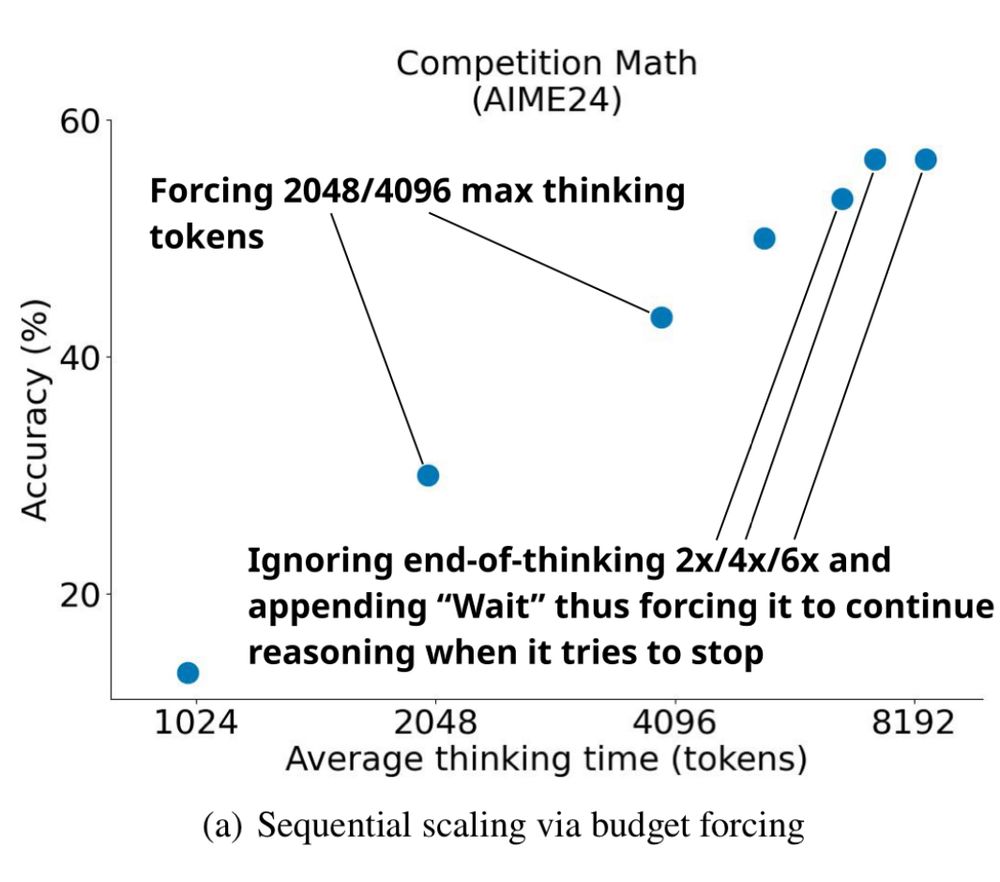

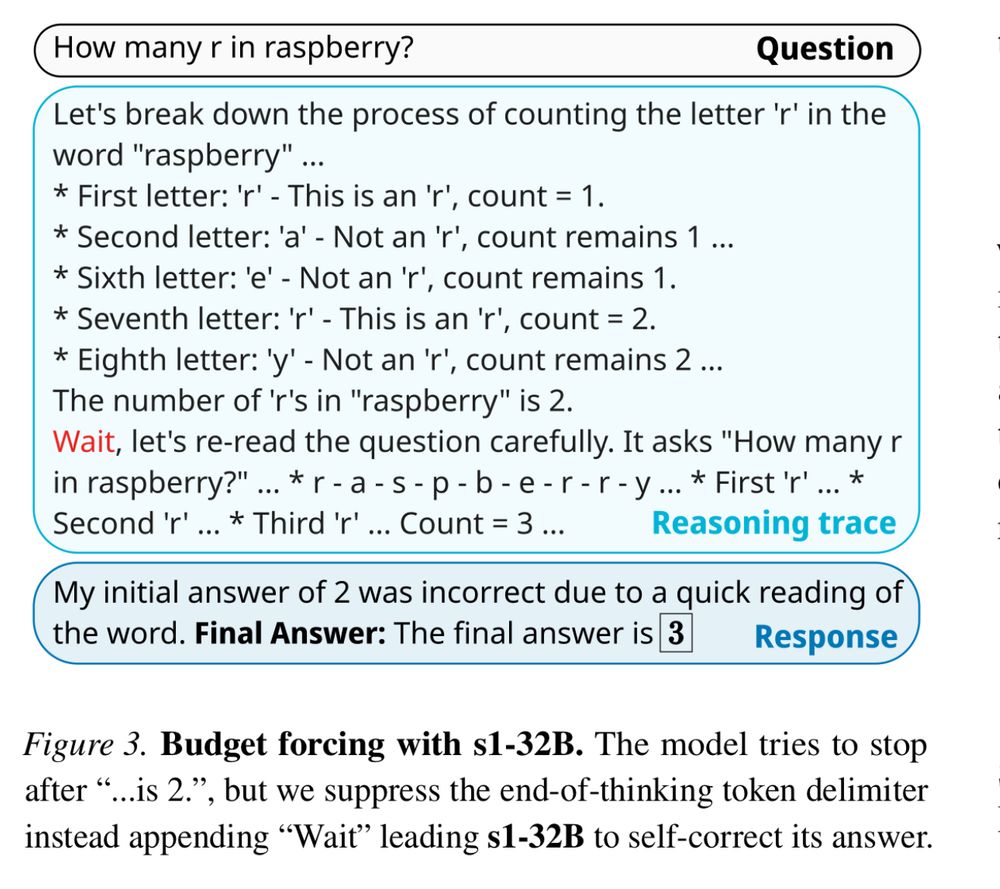

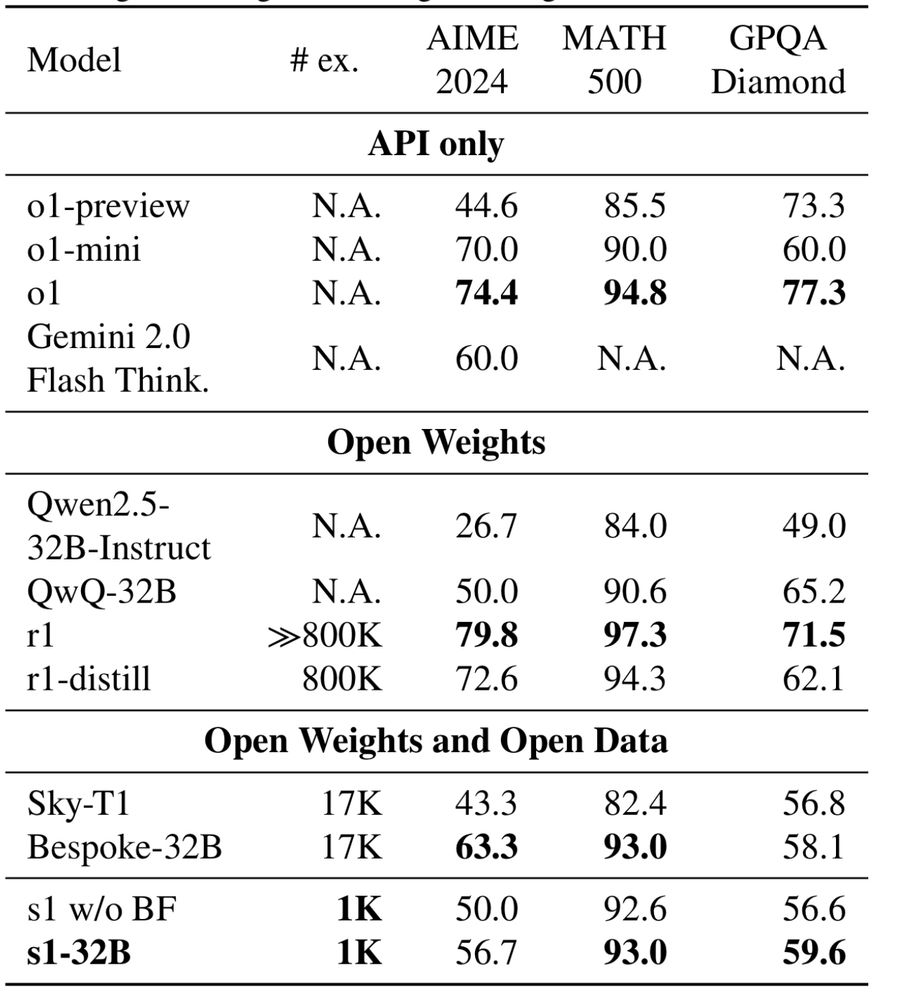

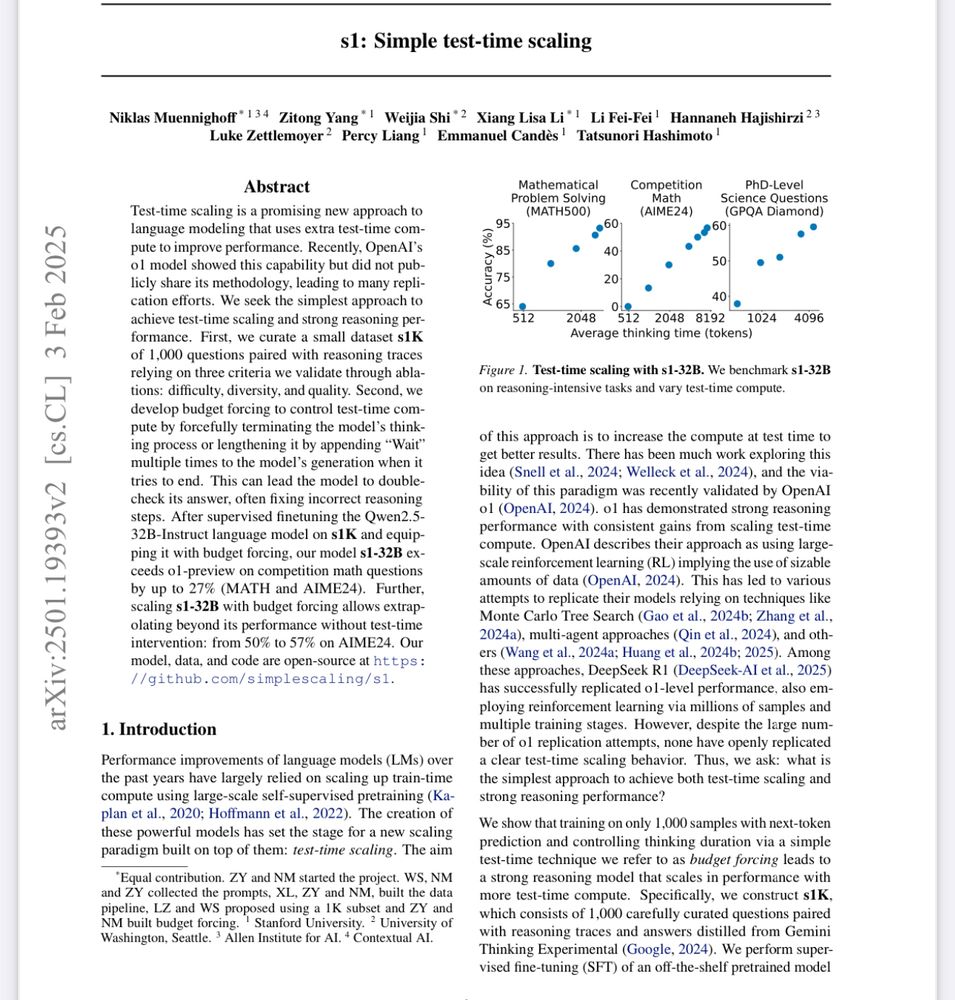

They used just 1,000 carefully curated reasoning examples & a trick where if the model tries to stop thinking, they append "Wait" to force it to continue. Near o1 at math. arxiv.org/pdf/2501.19393

They used just 1,000 carefully curated reasoning examples & a trick where if the model tries to stop thinking, they append "Wait" to force it to continue. Near o1 at math. arxiv.org/pdf/2501.19393