Currently: Research Scientist at Apple

Previously: Princeton CS PhD, Yale S&DS BSc, MSR FATE & TTIC Intern

https://sunniesuhyoung.github.io/

In our #CHI2025 paper, we explore these questions through two user studies.

1/7

This work is also the last chapter of my dissertation, so the recognition feels more special🏅🎓😊

🎉 to the team @jennwv.bsky.social @qveraliao.bsky.social @tanialombrozo.bsky.social Olga Russakovsky

Next year's conference will be held in Montreal, Canada 🇨🇦

Su Lin Blodgett and Zeerak Talat will be General Chairs, and Michael Madaio will be PC Chair 🎉

(thanks to MindView for the photo!)

Next year's conference will be held in Montreal, Canada 🇨🇦

Su Lin Blodgett and Zeerak Talat will be General Chairs, and Michael Madaio will be PC Chair 🎉

(thanks to MindView for the photo!)

Read all of the published papers here: dl.acm.org/doi/proceedi...

Read all of the published papers here: dl.acm.org/doi/proceedi...

Click the pin 📌 in the upper right hand corner to keep this feed quickly accessible.

bsky.app/profile/mari...

Click the pin 📌 in the upper right hand corner to keep this feed quickly accessible.

bsky.app/profile/mari...

📢 Next I'll join Apple as a research scientist in the Responsible AI team led by @jeffreybigham.com!

📢 Next I'll join Apple as a research scientist in the Responsible AI team led by @jeffreybigham.com!

What I've been up to: job market & research (responsible AI, human-centered evaluation, overreliance, anthropomorphism) 🤓

What I've been up to: job market & research (responsible AI, human-centered evaluation, overreliance, anthropomorphism) 🤓

🔗 Project Page: ind1010.github.io/interactive_XAI

📄 Extended Abstract: arxiv.org/abs/2504.10745

🔗 Project Page: ind1010.github.io/interactive_XAI

📄 Extended Abstract: arxiv.org/abs/2504.10745

This work is also the last chapter of my dissertation, so the recognition feels more special🏅🎓😊

🎉 to the team @jennwv.bsky.social @qveraliao.bsky.social @tanialombrozo.bsky.social Olga Russakovsky

In our #CHI2025 paper, we explore these questions through two user studies.

1/7

This work is also the last chapter of my dissertation, so the recognition feels more special🏅🎓😊

🎉 to the team @jennwv.bsky.social @qveraliao.bsky.social @tanialombrozo.bsky.social Olga Russakovsky

learn.microsoft.com/en-us/ai/pla...

learn.microsoft.com/en-us/ai/pla...

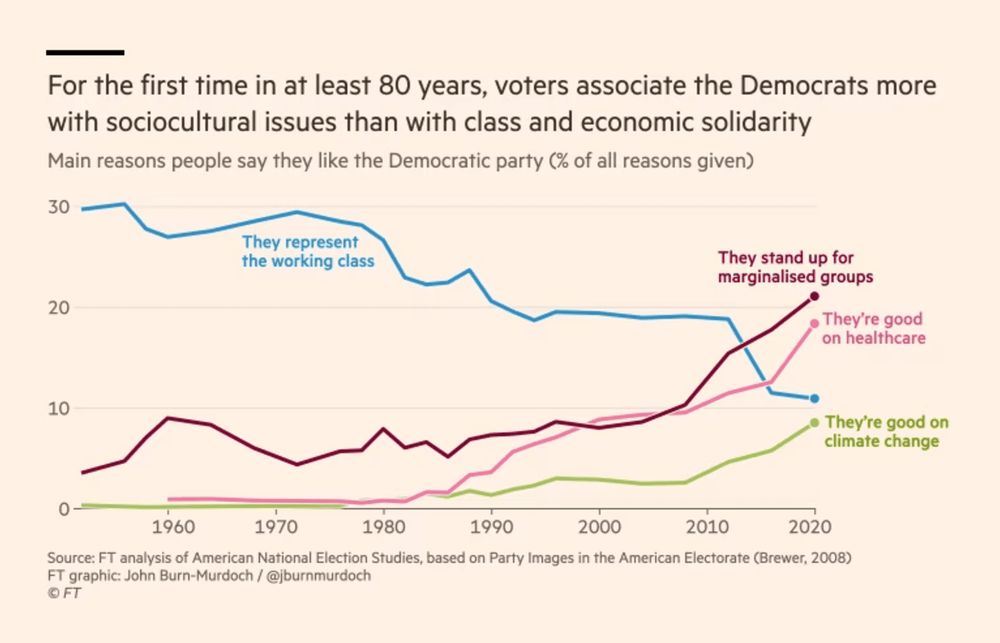

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

A 🧵 on a topic I find many students struggle with: "why do their 📊 look more professional than my 📊?"

It's *lots* of tiny decisions that aren't the defaults in many libraries, so let's break down 1 simple graph by @jburnmurdoch.bsky.social

🔗 www.ft.com/content/73a1...

In our #CHI2025 paper, we explore these questions through two user studies.

1/7

In our #CHI2025 paper, we explore these questions through two user studies.

1/7

arxiv.org/abs/2502.19190

arxiv.org/abs/2502.19190

In our #CHI2025 paper, we explore these questions through two user studies.

1/7

Details: xai4cv.github.io/workshop_cvp...

Submission Site: cmt3.research.microsoft.com/XAI4CV2025

@cvprconference.bsky.social @xai-research.bsky.social

Details: xai4cv.github.io/workshop_cvp...

Submission Site: cmt3.research.microsoft.com/XAI4CV2025

@cvprconference.bsky.social @xai-research.bsky.social

In our #CHI2025 paper, we explore these questions through two user studies.

1/7

In our #CHI2025 paper, we explore these questions through two user studies.

1/7