Sergio Izquierdo

@sizquierdo.bsky.social

PhD candidate at University of Zaragoza.

Previously intern at Niantic Labs and Skydio.

Working on 3D reconstruction and Deep Learning.

serizba.github.io

Previously intern at Niantic Labs and Skydio.

Working on 3D reconstruction and Deep Learning.

serizba.github.io

Reposted by Sergio Izquierdo

One concern that I have as an AI researcher when publishing code is that it can potentially be used in dual-use applications.

To solve this, we propose Civil Software Licenses. They prevent dual-use while being minimal in the restrictions they impose:

civil-software-licenses.github.io

To solve this, we propose Civil Software Licenses. They prevent dual-use while being minimal in the restrictions they impose:

civil-software-licenses.github.io

Civil Software Licenses

civil-software-licenses.github.io

July 31, 2025 at 5:36 PM

One concern that I have as an AI researcher when publishing code is that it can potentially be used in dual-use applications.

To solve this, we propose Civil Software Licenses. They prevent dual-use while being minimal in the restrictions they impose:

civil-software-licenses.github.io

To solve this, we propose Civil Software Licenses. They prevent dual-use while being minimal in the restrictions they impose:

civil-software-licenses.github.io

Presenting today at #CVPR poster 81.

Code is available at github.com/nianticlabs/...

Want to try it on an iPhone video? On Android? On any other sequence you have? We got you covered. Check the repo.

Code is available at github.com/nianticlabs/...

Want to try it on an iPhone video? On Android? On any other sequence you have? We got you covered. Check the repo.

June 14, 2025 at 2:25 PM

Presenting today at #CVPR poster 81.

Code is available at github.com/nianticlabs/...

Want to try it on an iPhone video? On Android? On any other sequence you have? We got you covered. Check the repo.

Code is available at github.com/nianticlabs/...

Want to try it on an iPhone video? On Android? On any other sequence you have? We got you covered. Check the repo.

Presenting it now at #CVPR

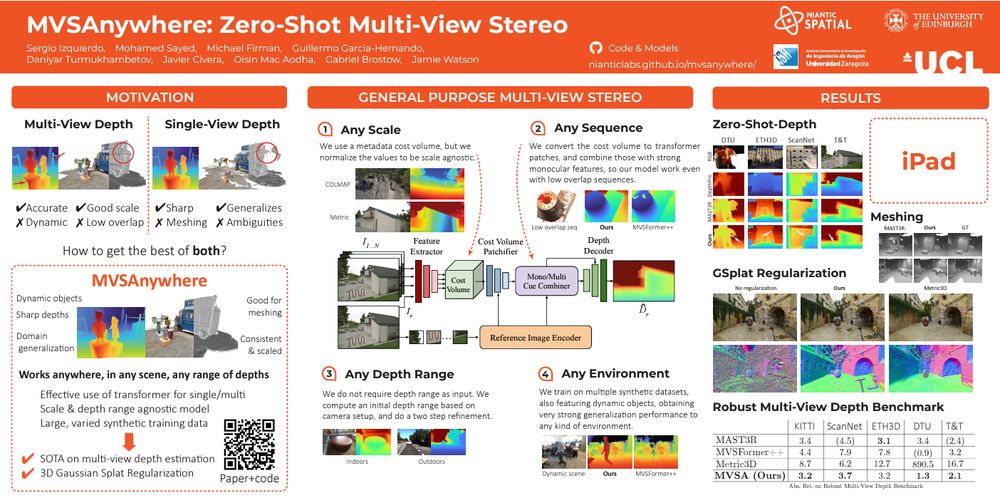

MVSAnywhere: Zero-Shot Multi-View Stereo

Looking for a multi-view stereo depth estimation model which works anywhere, in any scene, with any range of depths?

If so, stop by our poster #81 today in the morning session (10:30 to 12:20) at #CVPR2025.

Looking for a multi-view stereo depth estimation model which works anywhere, in any scene, with any range of depths?

If so, stop by our poster #81 today in the morning session (10:30 to 12:20) at #CVPR2025.

June 14, 2025 at 2:24 PM

Presenting it now at #CVPR

Happy to be one of them

Behind every great conference is a team of dedicated reviewers. Congratulations to this year’s #CVPR2025 Outstanding Reviewers!

cvpr.thecvf.com/Conferences/...

cvpr.thecvf.com/Conferences/...

May 15, 2025 at 10:45 AM

Happy to be one of them

🔍Looking for a multi-view depth method that just works?

We're excited to share MVSAnywhere, which we will present at #CVPR2025. MVSAnywhere produces sharp depths, generalizes and is robust to all kind of scenes, and it's scale agnostic.

More info:

nianticlabs.github.io/mvsanywhere/

We're excited to share MVSAnywhere, which we will present at #CVPR2025. MVSAnywhere produces sharp depths, generalizes and is robust to all kind of scenes, and it's scale agnostic.

More info:

nianticlabs.github.io/mvsanywhere/

March 31, 2025 at 12:52 PM

🔍Looking for a multi-view depth method that just works?

We're excited to share MVSAnywhere, which we will present at #CVPR2025. MVSAnywhere produces sharp depths, generalizes and is robust to all kind of scenes, and it's scale agnostic.

More info:

nianticlabs.github.io/mvsanywhere/

We're excited to share MVSAnywhere, which we will present at #CVPR2025. MVSAnywhere produces sharp depths, generalizes and is robust to all kind of scenes, and it's scale agnostic.

More info:

nianticlabs.github.io/mvsanywhere/

Reposted by Sergio Izquierdo

MASt3R-SLAM code release!

github.com/rmurai0610/M...

Try it out on videos or with a live camera

Work with

@ericdexheimer.bsky.social*,

@ajdavison.bsky.social (*Equal Contribution)

github.com/rmurai0610/M...

Try it out on videos or with a live camera

Work with

@ericdexheimer.bsky.social*,

@ajdavison.bsky.social (*Equal Contribution)

Introducing MASt3R-SLAM, the first real-time monocular dense SLAM with MASt3R as a foundation.

Easy to use like DUSt3R/MASt3R, from an uncalibrated RGB video it recovers accurate, globally consistent poses & a dense map.

With @ericdexheimer.bsky.social* @ajdavison.bsky.social (*Equal Contribution)

Easy to use like DUSt3R/MASt3R, from an uncalibrated RGB video it recovers accurate, globally consistent poses & a dense map.

With @ericdexheimer.bsky.social* @ajdavison.bsky.social (*Equal Contribution)

February 25, 2025 at 5:23 PM

MASt3R-SLAM code release!

github.com/rmurai0610/M...

Try it out on videos or with a live camera

Work with

@ericdexheimer.bsky.social*,

@ajdavison.bsky.social (*Equal Contribution)

github.com/rmurai0610/M...

Try it out on videos or with a live camera

Work with

@ericdexheimer.bsky.social*,

@ajdavison.bsky.social (*Equal Contribution)

Reposted by Sergio Izquierdo

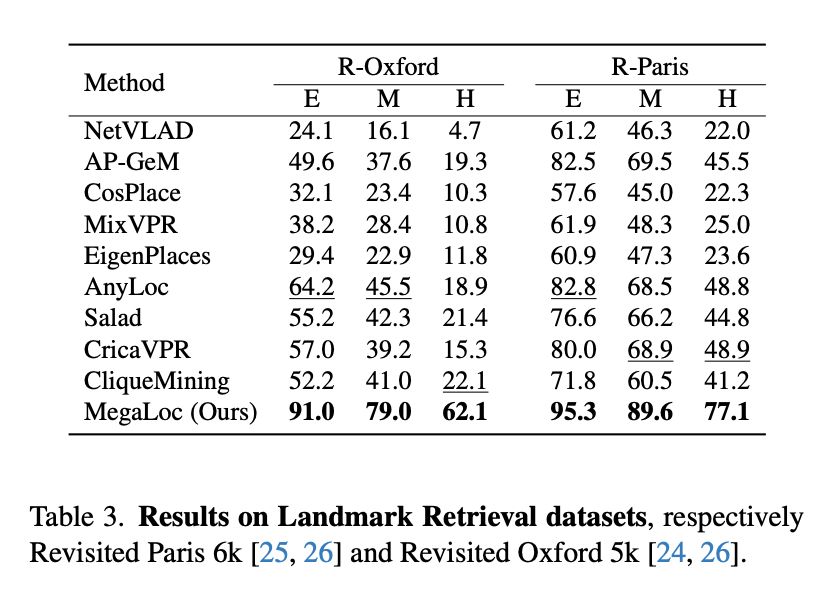

MegaLoc: One Retrieval to Place Them All

@berton-gabri.bsky.social Carlo Masone

tl;dr: DINOv2-SALAD, trained on all available VPR datasets works very well.

Code should at github.com/gmberton/Meg..., but not yet

arxiv.org/abs/2502.17237

@berton-gabri.bsky.social Carlo Masone

tl;dr: DINOv2-SALAD, trained on all available VPR datasets works very well.

Code should at github.com/gmberton/Meg..., but not yet

arxiv.org/abs/2502.17237

February 25, 2025 at 10:03 AM

MegaLoc: One Retrieval to Place Them All

@berton-gabri.bsky.social Carlo Masone

tl;dr: DINOv2-SALAD, trained on all available VPR datasets works very well.

Code should at github.com/gmberton/Meg..., but not yet

arxiv.org/abs/2502.17237

@berton-gabri.bsky.social Carlo Masone

tl;dr: DINOv2-SALAD, trained on all available VPR datasets works very well.

Code should at github.com/gmberton/Meg..., but not yet

arxiv.org/abs/2502.17237