Anything multiscale itterative nonlinear

Blue sky has much better research feed than X yet I have to scroll through so much random materials to get each piec 😓

And I can't block people as the best writers repost tone of this stuff

Blue sky has much better research feed than X yet I have to scroll through so much random materials to get each piec 😓

And I can't block people as the best writers repost tone of this stuff

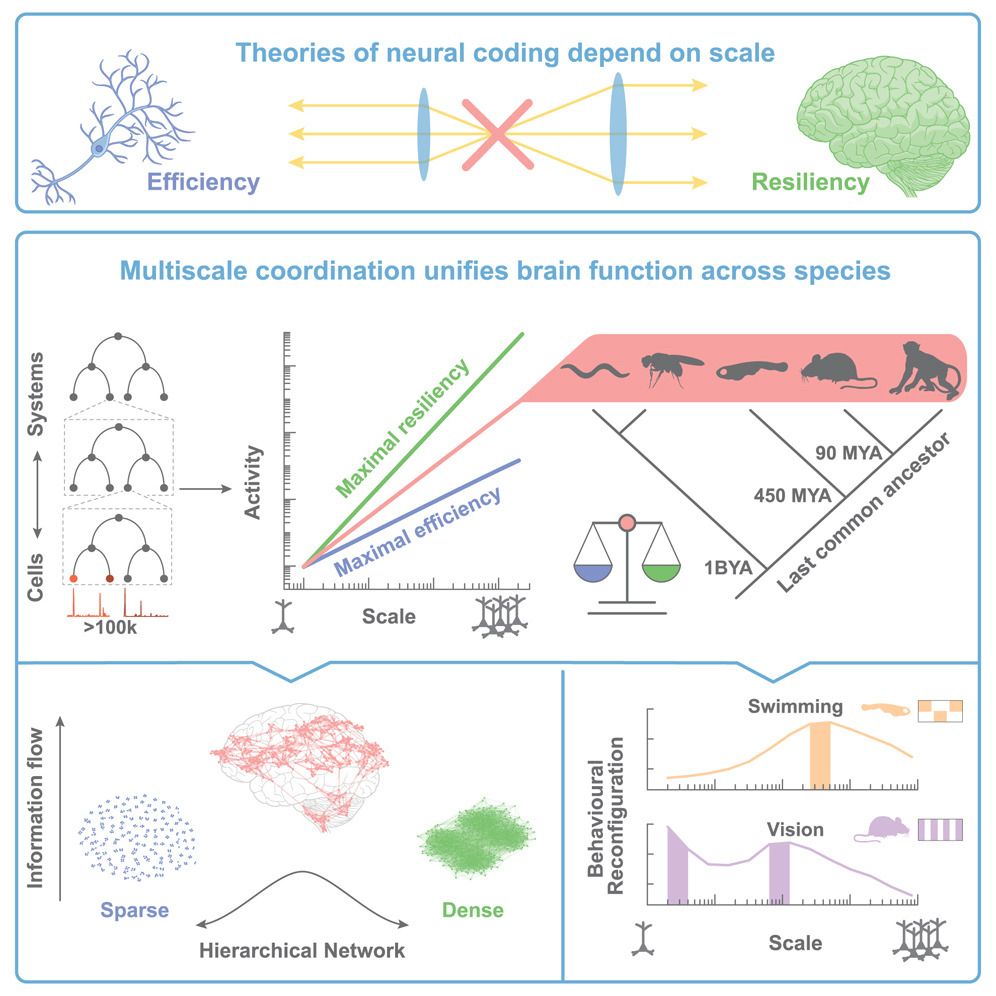

That's a simplification of really elegant theory but the principles shown can have multiple types of implementation (as shown) some even more elegant (for me) so not example but latent based

So many possibilities in this approach

We may have an answer: integration of learned priors through feedback. New paper with @kenmiller.bsky.social! 🧵

That's a simplification of really elegant theory but the principles shown can have multiple types of implementation (as shown) some even more elegant (for me) so not example but latent based

So many possibilities in this approach

It was so bad my brain nearly freezed and the only moment shown as engaging was due to me tripping cable during disgust 🥲

Gemini 2.5 is quite good at interpretation from such visualisations vid

*BIS-BAS

It was so bad my brain nearly freezed and the only moment shown as engaging was due to me tripping cable during disgust 🥲

Gemini 2.5 is quite good at interpretation from such visualisations vid

*BIS-BAS

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

Valve is going toward neuropixels 2x4mm &18kHz

www.roadtovr.com/valve-founde...

Valve is going toward neuropixels 2x4mm &18kHz

www.roadtovr.com/valve-founde...

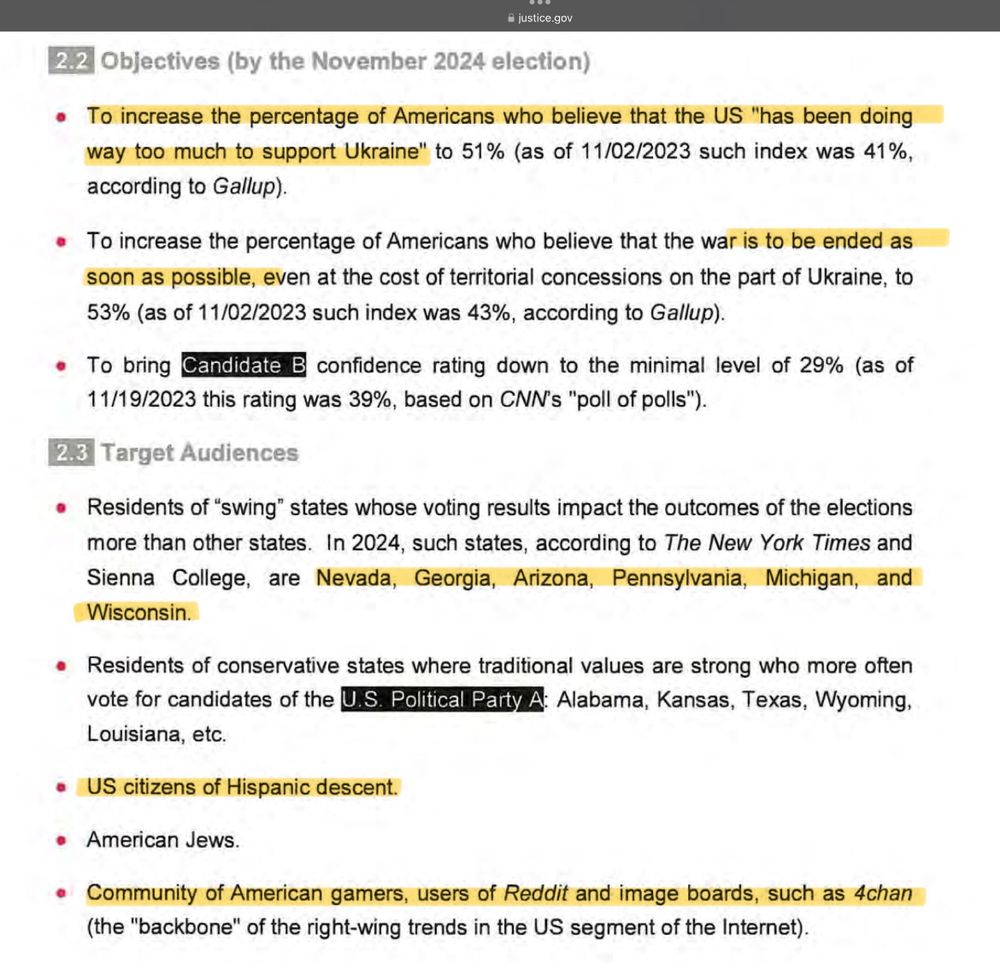

The most expensive would be the code

Then AI API

but even on USA scale that's pennies as even smallest models can match the quality of this campaign

Worst is that it could be escalated, improved and hidden better

Meet project "Good Old USA" the now unsealed DoJ file on the Russian influence in the US to sway opinion on the war in Ukraine.

Something Trump has bought into hook-line and sinker.

It was held under seal, because we got the literal playbook revealing their methods

The most expensive would be the code

Then AI API

but even on USA scale that's pennies as even smallest models can match the quality of this campaign

Worst is that it could be escalated, improved and hidden better

Main conclusion?

Read books!

Preferably hard demanding ones written befor social media

Regularly as a detoxification of simplified skipped thinking and deciding

...

Main conclusion?

Read books!

Preferably hard demanding ones written befor social media

Regularly as a detoxification of simplified skipped thinking and deciding

...

Honey 🍯 for my brain 🧠🙏

This has been my most favourite (and toughest) work to date.

Please help share around!!

www.cell.com/cell/abstrac...

(Reach out if you can’t access)

Honey 🍯 for my brain 🧠🙏

leehanchung.github.io/blogs/2024/1...

I'm starting to think that we are reinventing beamsearch in a more complex way eluding ourselfs that there is a deeper theory behind 🙃

But there is so much more...

leehanchung.github.io/blogs/2024/1...

I'm starting to think that we are reinventing beamsearch in a more complex way eluding ourselfs that there is a deeper theory behind 🙃

But there is so much more...

They not only need to see the text they need to concentrate on its meaning,

Ability to read longer texts is dying

but due to the fact we measure ~emotions we can see what people react to

So the answer is recommender system for txt

They not only need to see the text they need to concentrate on its meaning,

Ability to read longer texts is dying

but due to the fact we measure ~emotions we can see what people react to

So the answer is recommender system for txt

Sample matches speed of reading and reactions of participant.

Colours represent standardized BIS-BAS reactions of single person.

The hardest thing is to keep participants interested in this materials 😅

txt in Polish

Sample matches speed of reading and reactions of participant.

Colours represent standardized BIS-BAS reactions of single person.

The hardest thing is to keep participants interested in this materials 😅

txt in Polish