RJ Antonello

@rjantonello.bsky.social

Postdoc in the Mesgarani Lab. Studying how we can use AI to understand language processing in the brain.

Reposted by RJ Antonello

Excited to share our work on mechanisms of naturalistic audiovisual processing in the human brain 🧠🎬!!

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

www.biorxiv.org

November 7, 2025 at 4:01 PM

Excited to share our work on mechanisms of naturalistic audiovisual processing in the human brain 🧠🎬!!

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

Reposted by RJ Antonello

Introducing CorText: a framework that fuses brain data directly into a large language model, allowing for interactive neural readout using natural language.

tl;dr: you can now chat with a brain scan 🧠💬

1/n

tl;dr: you can now chat with a brain scan 🧠💬

1/n

November 3, 2025 at 3:17 PM

Introducing CorText: a framework that fuses brain data directly into a large language model, allowing for interactive neural readout using natural language.

tl;dr: you can now chat with a brain scan 🧠💬

1/n

tl;dr: you can now chat with a brain scan 🧠💬

1/n

Reposted by RJ Antonello

🧠 New at #NeurIPS2025!

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

October 30, 2025 at 10:25 PM

🧠 New at #NeurIPS2025!

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

Reposted by RJ Antonello

As our lab started to build encoding 🧠 models, we were trying to figure out best practices in the field. So @neurotaha.bsky.social

built a library to easily compare design choices & model features across datasets!

We hope it will be useful to the community & plan to keep expanding it!

1/

built a library to easily compare design choices & model features across datasets!

We hope it will be useful to the community & plan to keep expanding it!

1/

🚨 Paper alert:

To appear in the DBM Neurips Workshop

LITcoder: A General-Purpose Library for Building and Comparing Encoding Models

📄 arxiv: arxiv.org/abs/2509.091...

🔗 project: litcoder-brain.github.io

To appear in the DBM Neurips Workshop

LITcoder: A General-Purpose Library for Building and Comparing Encoding Models

📄 arxiv: arxiv.org/abs/2509.091...

🔗 project: litcoder-brain.github.io

September 29, 2025 at 5:33 PM

As our lab started to build encoding 🧠 models, we were trying to figure out best practices in the field. So @neurotaha.bsky.social

built a library to easily compare design choices & model features across datasets!

We hope it will be useful to the community & plan to keep expanding it!

1/

built a library to easily compare design choices & model features across datasets!

We hope it will be useful to the community & plan to keep expanding it!

1/

In our new paper, we explore how we can build encoding models that are both powerful and understandable. Our model uses an LLM to answer 35 questions about a sentence's content. The answers linearly contribute to our prediction of how the brain will respond to that sentence. 1/6

August 18, 2025 at 9:42 AM

In our new paper, we explore how we can build encoding models that are both powerful and understandable. Our model uses an LLM to answer 35 questions about a sentence's content. The answers linearly contribute to our prediction of how the brain will respond to that sentence. 1/6

Reposted by RJ Antonello

New paper with @mujianing.bsky.social & @prestonlab.bsky.social! We propose a simple model for human memory of narratives: we uniformly sample incoming information at a constant rate. This explains behavioral data much better than variable-rate sampling triggered by event segmentation or surprisal.

Efficient uniform sampling explains non-uniform memory of narrative stories https://www.biorxiv.org/content/10.1101/2025.07.31.667952v1

August 1, 2025 at 4:45 PM

New paper with @mujianing.bsky.social & @prestonlab.bsky.social! We propose a simple model for human memory of narratives: we uniformly sample incoming information at a constant rate. This explains behavioral data much better than variable-rate sampling triggered by event segmentation or surprisal.

Reposted by RJ Antonello

🚨Paper alert!🚨

TL;DR first: We used a pre-trained deep neural network to model fMRI data and to generate images predicted to elicit a large response for each many different parts of the brain. We aggregate these into an awesome interactive brain viewer: piecesofmind.psyc.unr.edu/activation_m...

TL;DR first: We used a pre-trained deep neural network to model fMRI data and to generate images predicted to elicit a large response for each many different parts of the brain. We aggregate these into an awesome interactive brain viewer: piecesofmind.psyc.unr.edu/activation_m...

Cortex Feature Visualization

piecesofmind.psyc.unr.edu

June 12, 2025 at 4:34 PM

🚨Paper alert!🚨

TL;DR first: We used a pre-trained deep neural network to model fMRI data and to generate images predicted to elicit a large response for each many different parts of the brain. We aggregate these into an awesome interactive brain viewer: piecesofmind.psyc.unr.edu/activation_m...

TL;DR first: We used a pre-trained deep neural network to model fMRI data and to generate images predicted to elicit a large response for each many different parts of the brain. We aggregate these into an awesome interactive brain viewer: piecesofmind.psyc.unr.edu/activation_m...

Reposted by RJ Antonello

What are the organizing dimensions of language processing?

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

May 23, 2025 at 5:00 PM

What are the organizing dimensions of language processing?

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

We show that voxel responses during comprehension are organized along 2 main axes: processing difficulty & meaning abstractness—revealing an interpretable, topographic representational basis for language processing shared across individuals

Reposted by RJ Antonello

Stimulus dependencies---rather than next-word prediction---can explain pre-onset brain encoding during natural listening https://www.biorxiv.org/content/10.1101/2025.03.08.642140v1

March 11, 2025 at 7:15 AM

Stimulus dependencies---rather than next-word prediction---can explain pre-onset brain encoding during natural listening https://www.biorxiv.org/content/10.1101/2025.03.08.642140v1

Reposted by RJ Antonello

I’m hiring a full-time lab tech for two years starting May/June. Strong coding skills required, ML a plus. Our research on the human brain uses fMRI, ANNs, intracranial recording, and behavior. A great stepping stone to grad school. Apply here:

careers.peopleclick.com/careerscp/cl...

......

careers.peopleclick.com/careerscp/cl...

......

Technical Associate I, Kanwisher Lab

MIT - Technical Associate I, Kanwisher Lab - Cambridge MA 02139

careers.peopleclick.com

March 26, 2025 at 3:09 PM

I’m hiring a full-time lab tech for two years starting May/June. Strong coding skills required, ML a plus. Our research on the human brain uses fMRI, ANNs, intracranial recording, and behavior. A great stepping stone to grad school. Apply here:

careers.peopleclick.com/careerscp/cl...

......

careers.peopleclick.com/careerscp/cl...

......

Reposted by RJ Antonello

🚨 New Preprint!!

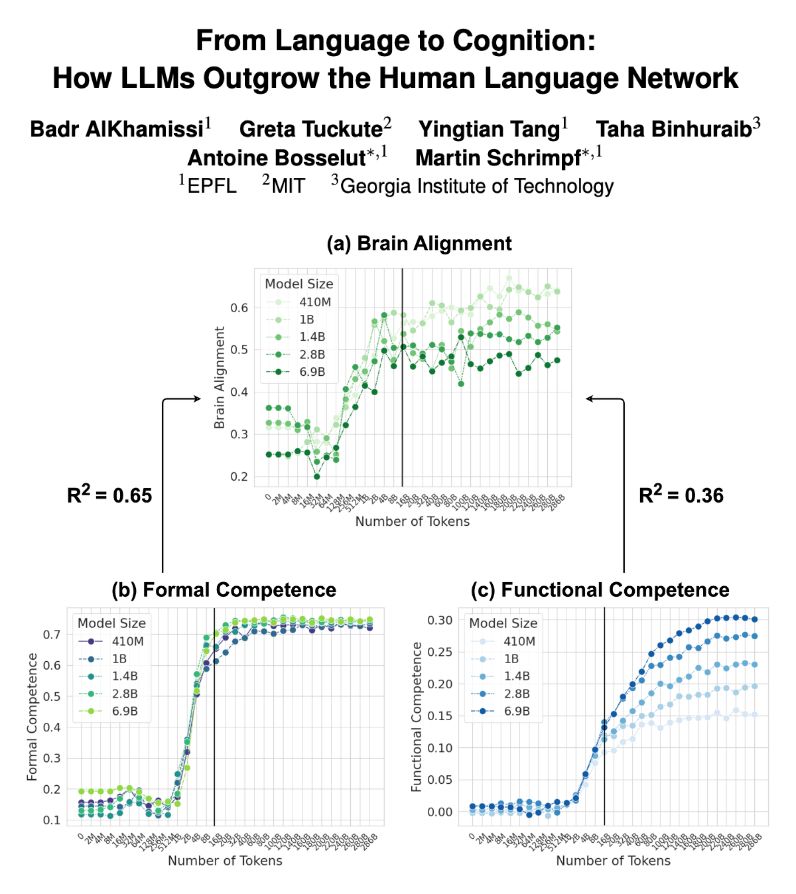

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

March 5, 2025 at 3:58 PM

🚨 New Preprint!!

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

LLMs trained on next-word prediction (NWP) show high alignment with brain recordings. But what drives this alignment—linguistic structure or world knowledge? And how does this alignment evolve during training? Our new paper explores these questions. 👇🧵

Reposted by RJ Antonello

Just in time for the holidays! Some cool new evidence from @eghbal_hosseini for the idea of universal representations shared by high-performing ANNs and brains in two domains: language and vision! Go Eghbal!

Why do diverse ANNs resemble brain representations? Check out our new paper with Colton Casto, @nogazs.bsky.social , Colin Conwell, Mark Richardson, & @evfedorenko.bsky.social on “Universality of representation in biological and artificial neural networks.” 🧠🤖

tinyurl.com/yckndmjt

tinyurl.com/yckndmjt

Universality of representation in biological and artificial neural networks

Many artificial neural networks (ANNs) trained with ecologically plausible objectives on naturalistic data align with behavior and neural representations in biological systems. Here, we show that this...

tinyurl.com

December 27, 2024 at 8:40 PM

Just in time for the holidays! Some cool new evidence from @eghbal_hosseini for the idea of universal representations shared by high-performing ANNs and brains in two domains: language and vision! Go Eghbal!

Really excited to be at NeurIPS this week presenting our new encoding model scaling laws work! Be sure to check out our poster (#402) on Tuesday afternoon and our new code and model release, and feel free to DM me to chat!

At NeurIPS this week, @rjantonello.bsky.social is presenting his work on scaling fMRI language encoding models. We're also sharing code, extracted features, and estimated model weights for some of our best models: github.com/HuthLab/enco...

Paper: arxiv.org/abs/2305.11863

Paper: arxiv.org/abs/2305.11863

GitHub - HuthLab/encoding-model-scaling-laws: Repository for the 2023 NeurIPS paper "Scaling laws fo...

Repository for the 2023 NeurIPS paper "Scaling laws for language encoding models in fMRI" - GitHub - HuthLab/encoding-model-scaling-laws: Repository for the 2023 NeurIPS paper "Scali...

github.com

December 8, 2023 at 5:15 PM

Really excited to be at NeurIPS this week presenting our new encoding model scaling laws work! Be sure to check out our poster (#402) on Tuesday afternoon and our new code and model release, and feel free to DM me to chat!