The same 99% will happen here too, but if AI researchers continue to get perma-banned for making available the datasets needed to filter it, it’s going to make this platform unusable.

I went to my boss @julien-c.hf.co asked that day if I could use company's compute and he said "have whatever you need".

hf.co/blog/using-ml-for-disasters

I went to my boss @julien-c.hf.co asked that day if I could use company's compute and he said "have whatever you need".

hf.co/blog/using-ml-for-disasters

Hugging Face empowers everyone to use AI to create value and is against monopolization of AI it's a hosting platform above all.

Hugging Face empowers everyone to use AI to create value and is against monopolization of AI it's a hosting platform above all.

```

from atproto import *

def f(m): print(m.header, parse_subscribe_repos_message())

FirehoseSubscribeReposClient().start(f)

```

```

from atproto import *

def f(m): print(m.header, parse_subscribe_repos_message())

FirehoseSubscribeReposClient().start(f)

```

zenodo.org/records/1108...

zenodo.org/records/1108...

it is called "learn about" (realized this through google ai studio and learnlm model)

this is like cousin to notebooklm, but more open-ended and interactive.

for me learning using ai, is my favorite usecase.

learning.google.com/experiments/...

it is called "learn about" (realized this through google ai studio and learnlm model)

this is like cousin to notebooklm, but more open-ended and interactive.

for me learning using ai, is my favorite usecase.

learning.google.com/experiments/...

youtu.be/-2ebSQROew4?...

youtu.be/-2ebSQROew4?...

Thread.

Thread.

embedding image link in .md is really handy

embedding image link in .md is really handy

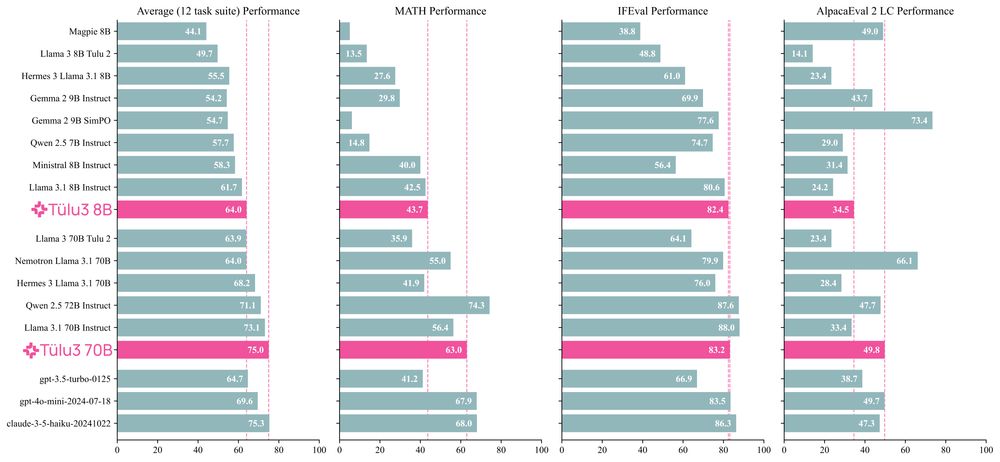

both 7b and 72B are just awesome.

if the problem is broken into subtasks,

7b performance significantly increases.

In my limited evaluation,

7b beats the new sonnet too for image based extraction.

Kudos to the team!!

both 7b and 72B are just awesome.

if the problem is broken into subtasks,

7b performance significantly increases.

In my limited evaluation,

7b beats the new sonnet too for image based extraction.

Kudos to the team!!

THINK OUT LOUD,

AND SHARE MORE IN PUBLIC (can be in various ways)

THINK OUT LOUD,

AND SHARE MORE IN PUBLIC (can be in various ways)

already feel anxious that people will start flooding here and might lose the current vibes that I am loving here.

already feel anxious that people will start flooding here and might lose the current vibes that I am loving here.

Let us know if you like the occasional news/articles episode. Trying to find a balance with interviews

@withenoughcoffee.bsky.social and I obviously recorded this before this week

Let us know if you like the occasional news/articles episode. Trying to find a balance with interviews

@withenoughcoffee.bsky.social and I obviously recorded this before this week

as such, my second post (tweet?) about an idea is usually much better than the first.

as such, my second post (tweet?) about an idea is usually much better than the first.

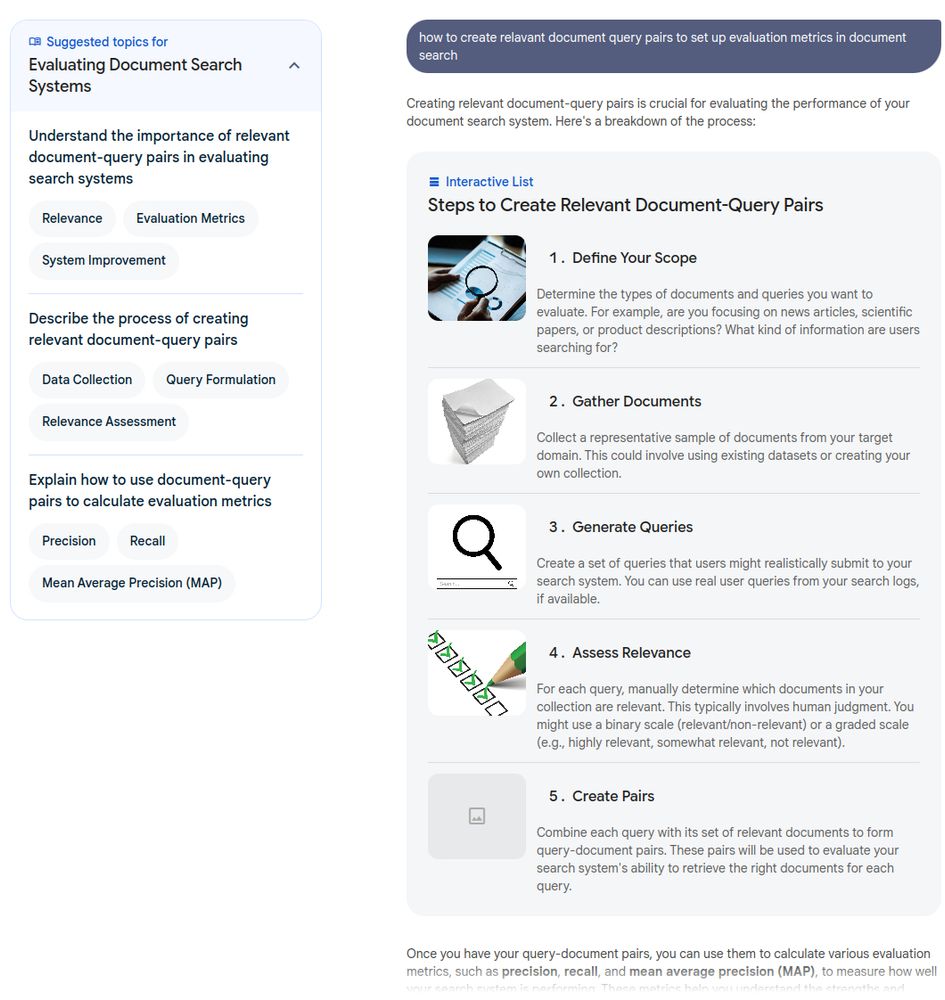

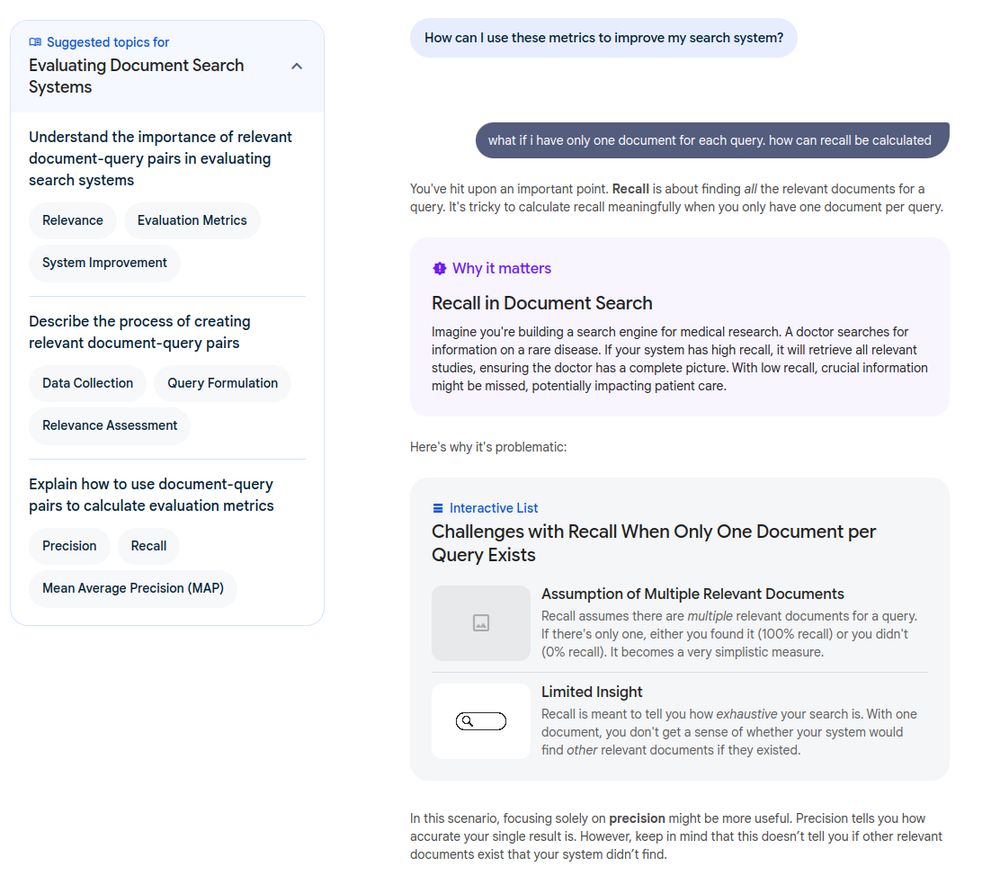

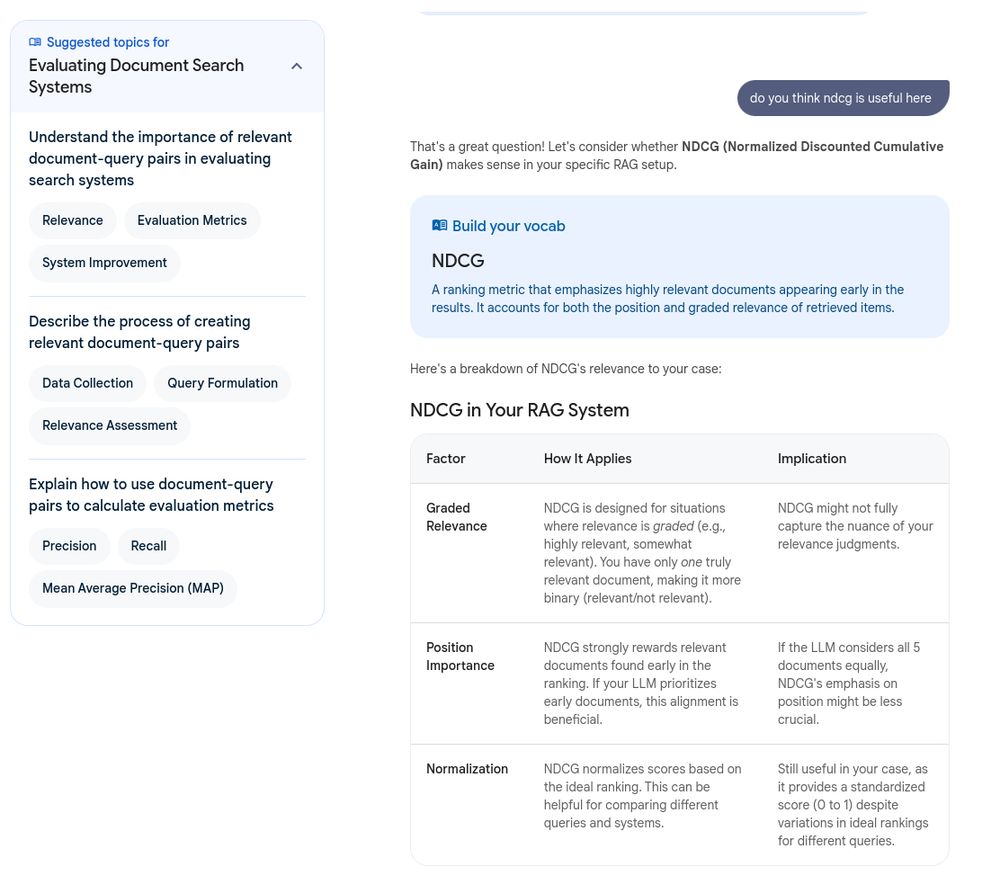

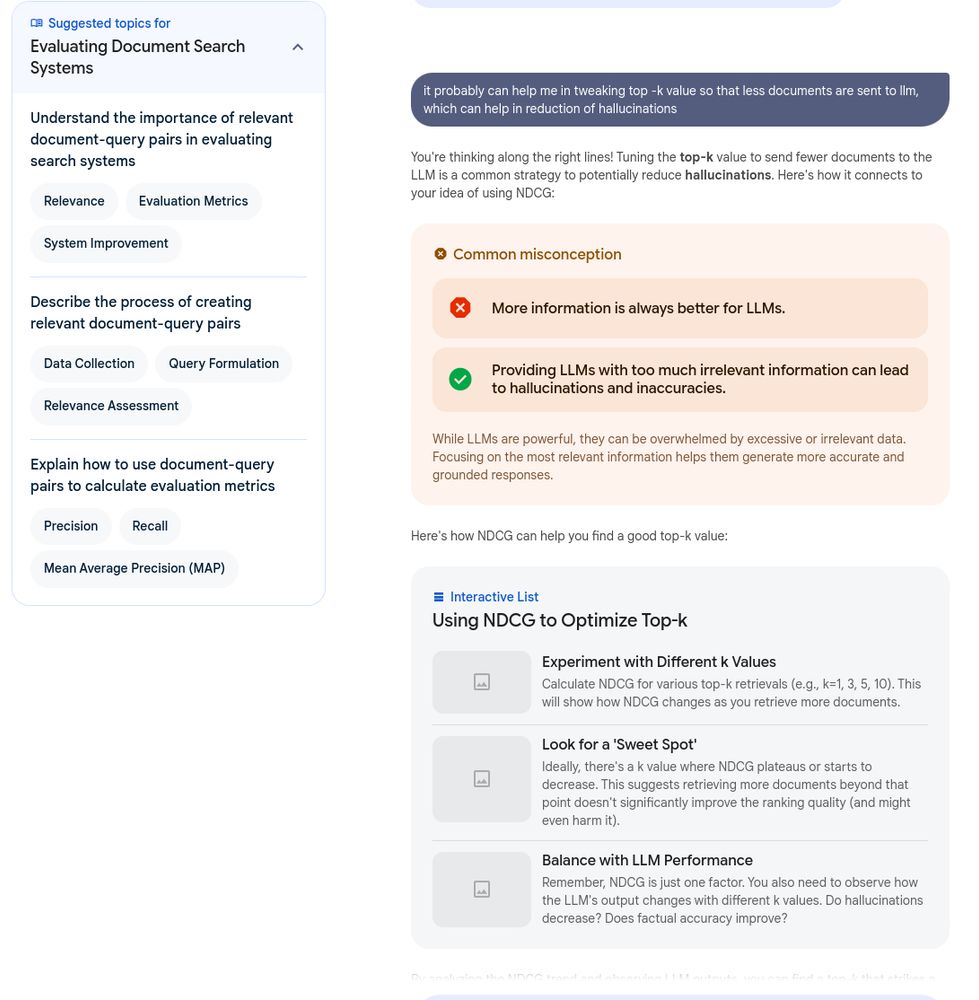

fundamentally working on a rag system for work, and setting good foundations.

i cringe on the word.. but yes "rag".

maybe search would be a better term, document search maybe.

anyhow,

trying my best to get better one day at a time.

fundamentally working on a rag system for work, and setting good foundations.

i cringe on the word.. but yes "rag".

maybe search would be a better term, document search maybe.

anyhow,

trying my best to get better one day at a time.

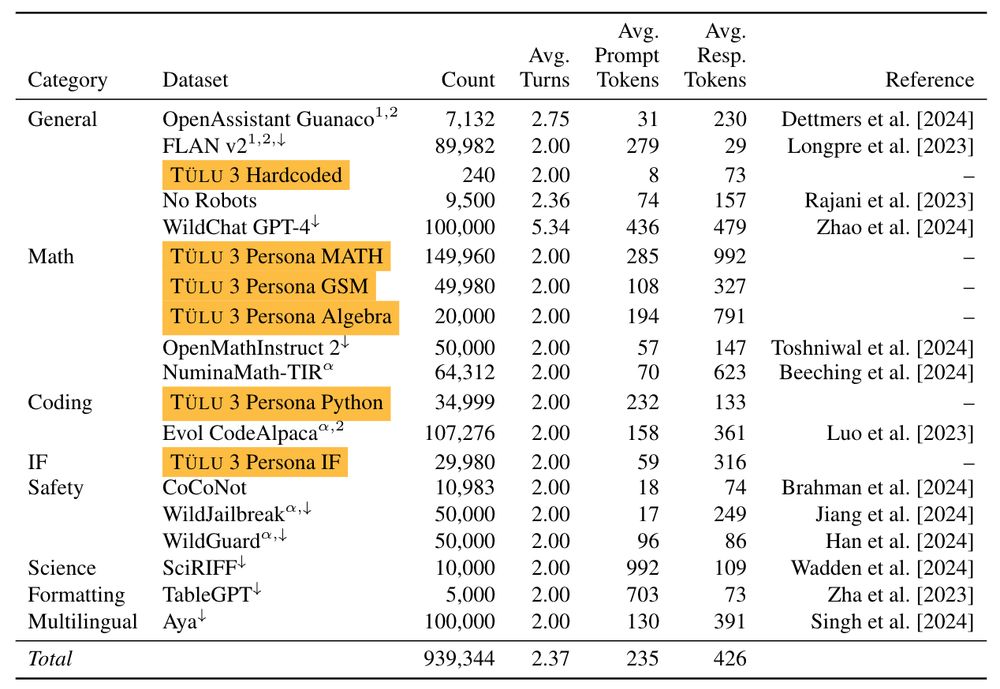

The intuition is that the model quickly learns to not attend across [SEP] boundaries and packing avoids "wasting" compute on padding tokens required to make the variable batch size consistent.

Still processing a lot of emotions from the last few days, but I'll keep re-posting starter packs as I catch my breath.

Still processing a lot of emotions from the last few days, but I'll keep re-posting starter packs as I catch my breath.

would love to hear from @bsky.app 's team about the origin of idea. solves both human and machine problem 😂

would love to hear from @bsky.app 's team about the origin of idea. solves both human and machine problem 😂

The intuition is that the model quickly learns to not attend across [SEP] boundaries and packing avoids "wasting" compute on padding tokens required to make the variable batch size consistent.

The intuition is that the model quickly learns to not attend across [SEP] boundaries and packing avoids "wasting" compute on padding tokens required to make the variable batch size consistent.

1. Hours: Something is fucked. It needs to be unfucked. By any means necessary.

2. Weeks/days: We're building something new, and are on a crazy timeline. We've made some questionable structural decisions.

In the future they're indistinguishable.

1. Hours: Something is fucked. It needs to be unfucked. By any means necessary.

2. Weeks/days: We're building something new, and are on a crazy timeline. We've made some questionable structural decisions.

In the future they're indistinguishable.