for your research to be relevant in AI, you might wanna pivot every 1-2 years

for your research to be relevant in AI, you might wanna pivot every 1-2 years

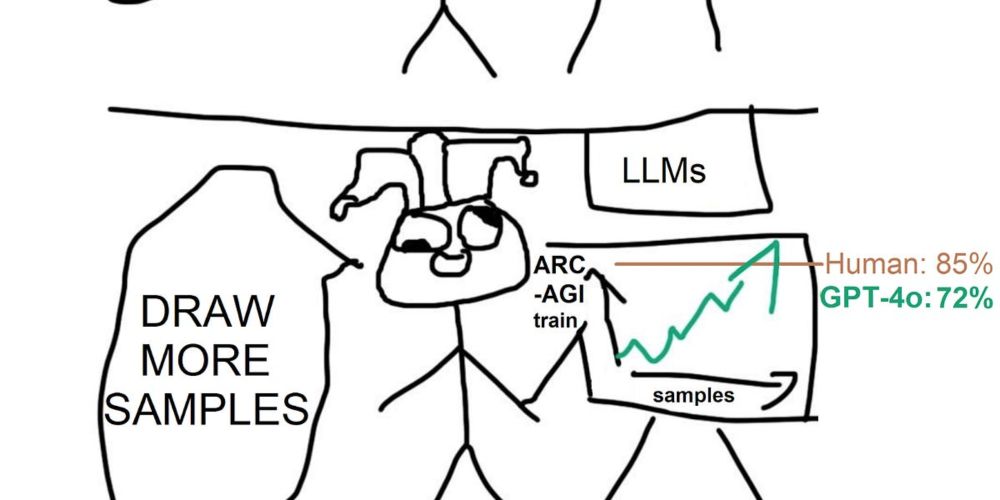

o3 is probably a more principled search technique...

Website: github.com/gfnorg/diffu...

Website: github.com/gfnorg/diffu...

I have anecdotal evidence from a friend who works at a client company for a popular insurance firm. They are using shitty “AI models” which are basically just CatBoost to mass process claims. They know the models are shit, but that’s also the point. Truly sickening.

I have anecdotal evidence from a friend who works at a client company for a popular insurance firm. They are using shitty “AI models” which are basically just CatBoost to mass process claims. They know the models are shit, but that’s also the point. Truly sickening.

My guess is GANs are a dark horse and the latents carry important abstract features. But we haven’t explored this much since they are hard to train.

My guess is GANs are a dark horse and the latents carry important abstract features. But we haven’t explored this much since they are hard to train.

1) RNNs will be back for long sequence modeling (the latent bottleneck is long term memory), but attention will be used for local short term context

2) Hierarchical VAEs will be back (with trainable encoders, not like diffusion models)

1) RNNs will be back for long sequence modeling (the latent bottleneck is long term memory), but attention will be used for local short term context

2) Hierarchical VAEs will be back (with trainable encoders, not like diffusion models)

Hope the hype doesn’t fizzle out turning this app into another “Threads”

Hope the hype doesn’t fizzle out turning this app into another “Threads”