assistant professor @ Uni Bonn & Lamarr Institute

interested in self-learning & autonomous robots, likes all the messy hardware problems of real-world experiments

https://rpl.uni-bonn.de/

https://hermannblum.net/

16.30 - 17.00: @sattlertorsten.bsky.social on Vision Localization Across Modalities

full schedule: localizoo.com/workshop

16.30 - 17.00: @sattlertorsten.bsky.social on Vision Localization Across Modalities

full schedule: localizoo.com/workshop

Contributed works cover Visual Localization, Visual Place Recognition, Room Layout Estimation, Novel View Synthesis, 3D Reconstruction

Contributed works cover Visual Localization, Visual Place Recognition, Room Layout Estimation, Novel View Synthesis, 3D Reconstruction

14.15 - 14.45: @ayoungk.bsky.social on Bridging heterogeneous sensors for robust and generalizable localization

full schedule: localizoo.com/workshop/

14.15 - 14.45: @ayoungk.bsky.social on Bridging heterogeneous sensors for robust and generalizable localization

full schedule: localizoo.com/workshop/

13.15 - 13.45: @gabrielacsurka.bsky.social on Privacy Preserving Visual Localization

full schedule: buff.ly/kM1Ompf

13.15 - 13.45: @gabrielacsurka.bsky.social on Privacy Preserving Visual Localization

full schedule: buff.ly/kM1Ompf

localizoo.com/workshop

Speakers: @gabrielacsurka.bsky.social, @ayoungk.bsky.social, David Caruso, @sattlertorsten.bsky.social

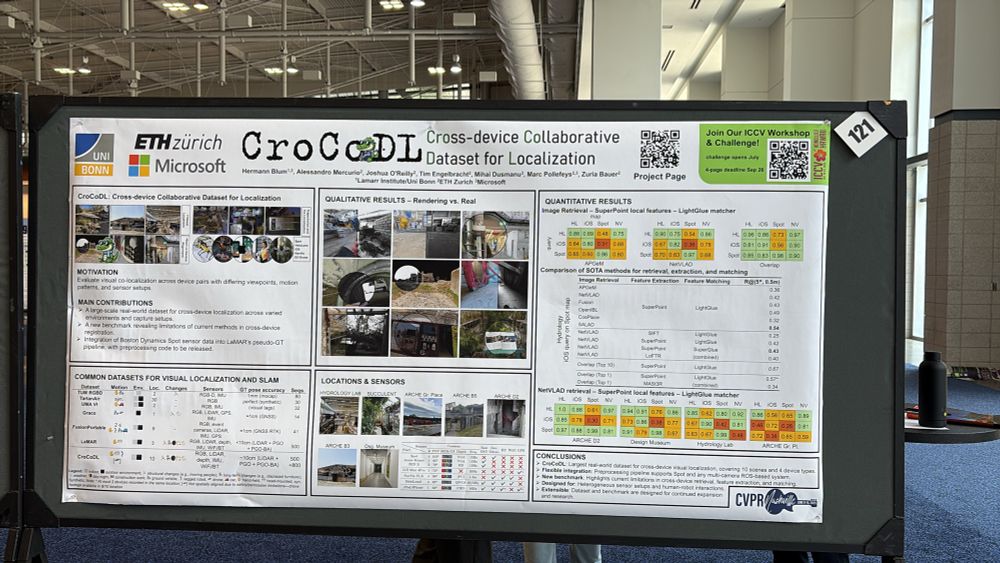

w/ @zbauer.bsky.social @mihaidusmanu.bsky.social @linfeipan.bsky.social @marcpollefeys.bsky.social

localizoo.com/workshop

Speakers: @gabrielacsurka.bsky.social, @ayoungk.bsky.social, David Caruso, @sattlertorsten.bsky.social

w/ @zbauer.bsky.social @mihaidusmanu.bsky.social @linfeipan.bsky.social @marcpollefeys.bsky.social

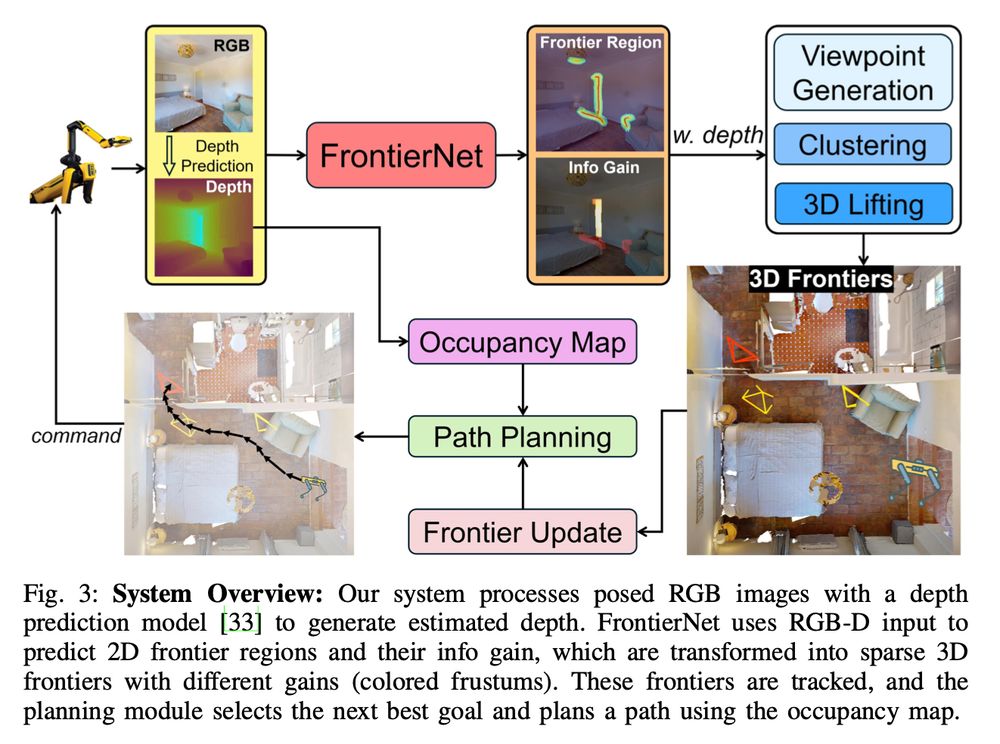

Key idea 💡Instead of detecting froniers in a map, we directly predict them from images. Hence, FrontierNet can implicitly learn visual semantic priors to estimate information gain. That speeds up exploration compared to geometric heuristics.

Key idea 💡Instead of detecting froniers in a map, we directly predict them from images. Hence, FrontierNet can implicitly learn visual semantic priors to estimate information gain. That speeds up exploration compared to geometric heuristics.

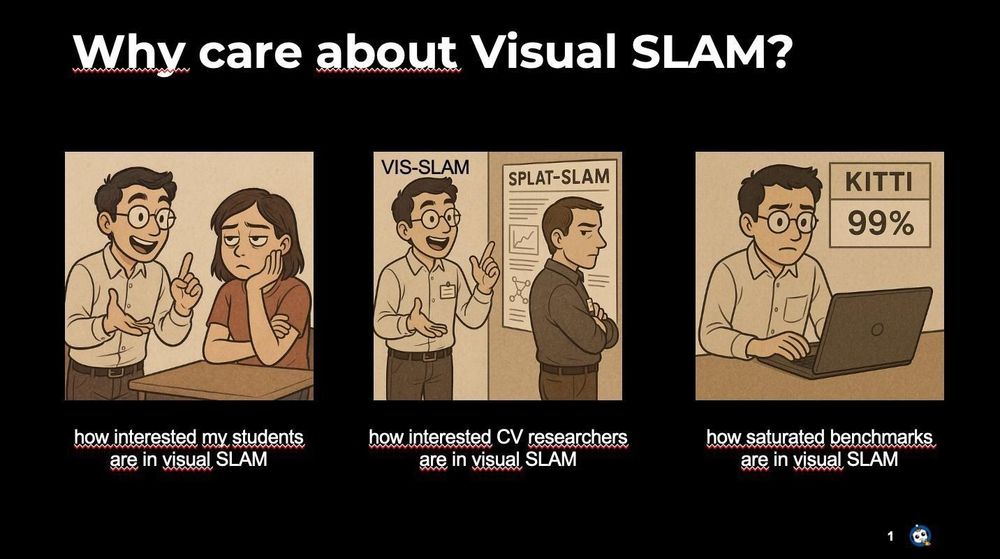

I'll be up later today at the Visual SLAM workshop at @roboticsscisys.bsky.social

buff.ly/ADHxPsX

I'll be up later today at the Visual SLAM workshop at @roboticsscisys.bsky.social

buff.ly/ADHxPsX

cc @marcpollefeys.bsky.social @hermannblum.bsky.social @mihaidusmanu.bsky.social @cvprconference.bsky.social @ethz.ch

cc @marcpollefeys.bsky.social @hermannblum.bsky.social @mihaidusmanu.bsky.social @cvprconference.bsky.social @ethz.ch

Accepted submissions go into ICCV WS proceedings 📄

Tackling real-world localization across smartphones, AR/VR, and robots with invited talks, paper track & competition on a novel dataset.

🔗 localizoo.com/workshop

🧵 1/2

Accepted submissions go into ICCV WS proceedings 📄

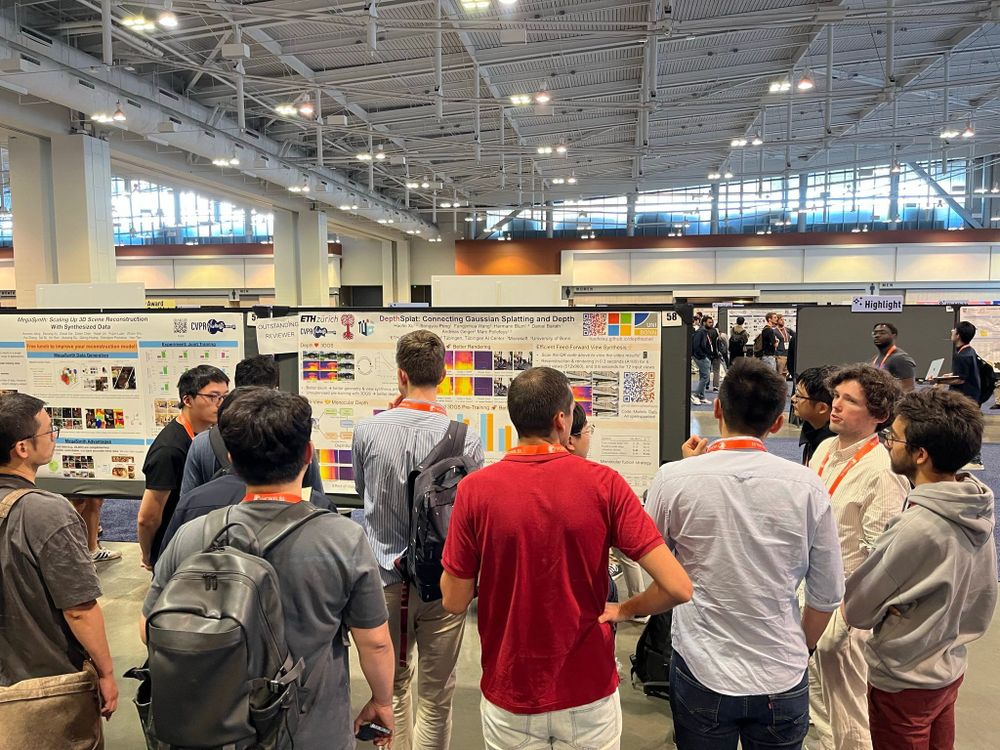

DepthSplat is a feed-forward model that achieves high-quality Gaussian reconstruction and view synthesis in just 0.6 seconds.

Looking forward to great conversations at the conference!

🔗 haofeixu.github.io/depthsplat/

DepthSplat is a feed-forward model that achieves high-quality Gaussian reconstruction and view synthesis in just 0.6 seconds.

Looking forward to great conversations at the conference!

Really cool to see something I could work with during my PhD featured as a swiss highlight 🤖

Really cool to see something I could work with during my PhD featured as a swiss highlight 🤖

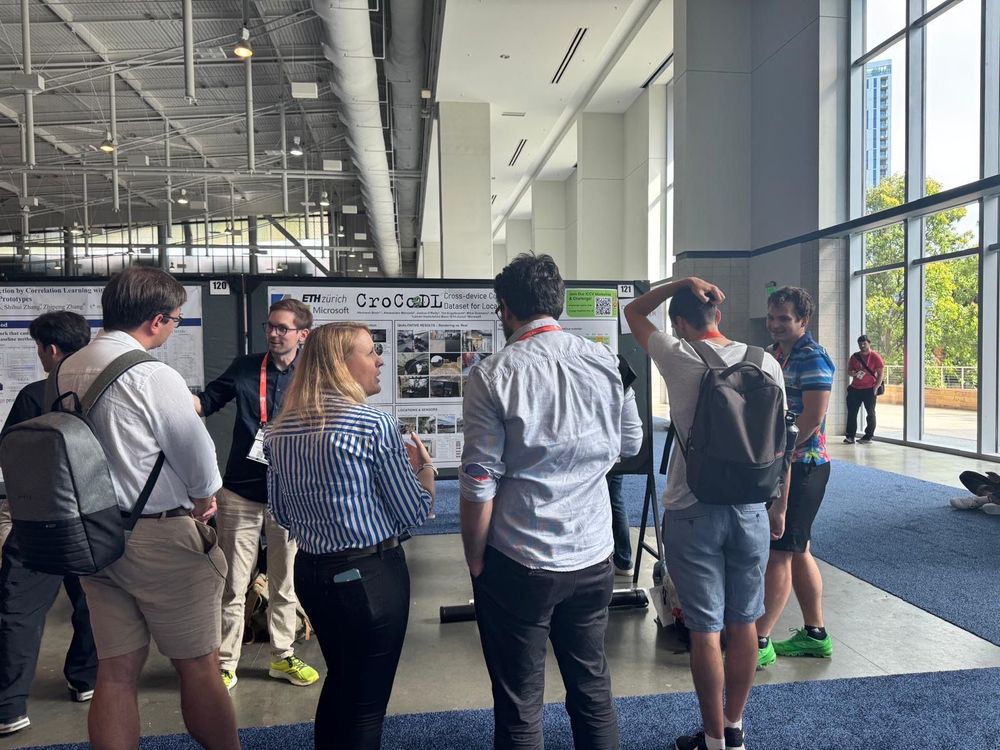

Localizing multiple phones, headsets, and robots to a common reference frame is so far a real problem in mixed-reality applications. Our new challenge will track progress on this issue.

⏰ paper deadline: June 6

Tackling real-world localization across smartphones, AR/VR, and robots with invited talks, paper track & competition on a novel dataset.

🔗 localizoo.com/workshop

🧵 1/2

🔗 haofeixu.github.io/depthsplat/

🔗 haofeixu.github.io/depthsplat/

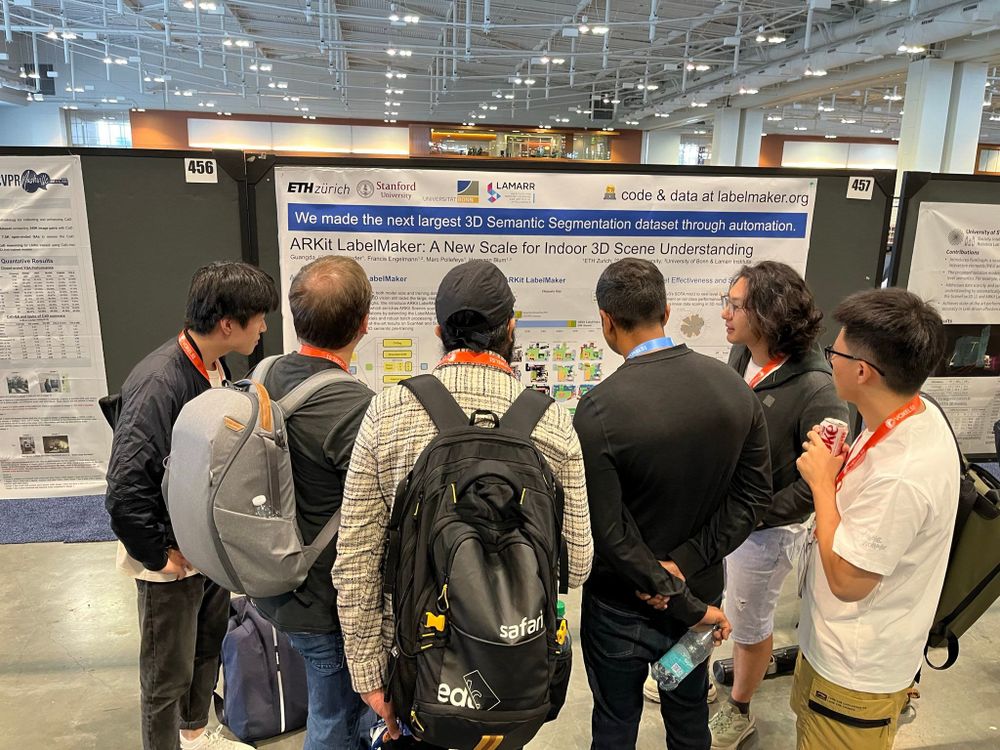

1️⃣ ARKitLabelMaker, the largest annotated 3D dataset, was accepted to CVPR 2025! This was an amazing effort of Guangda Ji 👏

🔗 labelmaker.org

📄 arxiv.org/abs/2410.13924

2️⃣ Mahta Moshkelgosha extended the pipeline to generate 3D scene graphs:

👩💻 github.com/cvg/LabelMak...

1️⃣ ARKitLabelMaker, the largest annotated 3D dataset, was accepted to CVPR 2025! This was an amazing effort of Guangda Ji 👏

🔗 labelmaker.org

📄 arxiv.org/abs/2410.13924

2️⃣ Mahta Moshkelgosha extended the pipeline to generate 3D scene graphs:

👩💻 github.com/cvg/LabelMak...

github.com/rmurai0610/M...

Try it out on videos or with a live camera

Work with

@ericdexheimer.bsky.social*,

@ajdavison.bsky.social (*Equal Contribution)

Easy to use like DUSt3R/MASt3R, from an uncalibrated RGB video it recovers accurate, globally consistent poses & a dense map.

With @ericdexheimer.bsky.social* @ajdavison.bsky.social (*Equal Contribution)

Application Deadline is End of March.

www.uni-bonn.de/en/universit...

Application Deadline is End of March.

www.uni-bonn.de/en/universit...

The gist is that exploration has always been treated as a geometric problem, but we show visual cues are really helpful to detect frontiers and predict their info gain.

W/ FrontierNet, you can get RGB-only exploration/object search/+

Boyang Sun, Hanzhi Chen, Stefan Leutenegger, Cesar Cadena, @marcpollefeys.bsky.social , Hermann Blum

tl;dr: predict frontier (where we weren't yet) using RGBD and then make a map, and not otherwise.

arxiv.org/abs/2501.04597

The gist is that exploration has always been treated as a geometric problem, but we show visual cues are really helpful to detect frontiers and predict their info gain.

W/ FrontierNet, you can get RGB-only exploration/object search/+

🔗 behretj.github.io/LostAndFound/

📄 arxiv.org/abs/2411.19162

📺 youtu.be/xxMsaBSeMXo

🔗 behretj.github.io/LostAndFound/

📄 arxiv.org/abs/2411.19162

📺 youtu.be/xxMsaBSeMXo

www.google.com/about/career...

www.google.com/about/career...