https://davidecortinovis-droid.github.io/

www.biorxiv.org/content/10.1...

🧵 1/n

www.biorxiv.org/content/10.1...

www.biorxiv.org/content/10.1...

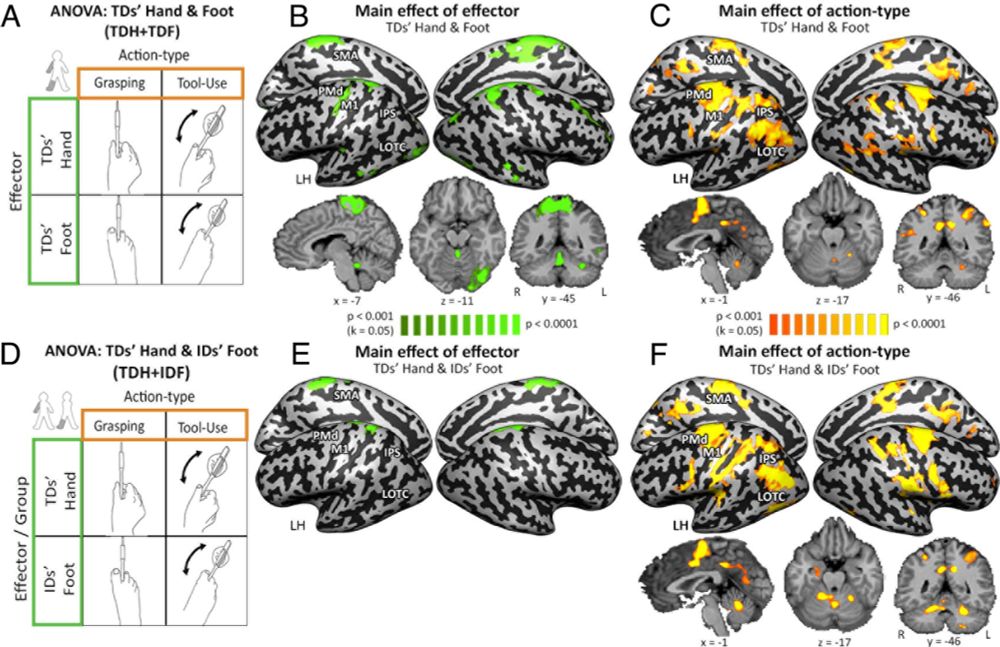

New work led by Andre Bockes and Angelika Lingnau - with some small support from me - on dimensions underlying the mental representation of dynamic human actions.

www.nature.com/articles/s44...

New work led by Andre Bockes and Angelika Lingnau - with some small support from me - on dimensions underlying the mental representation of dynamic human actions.

www.nature.com/articles/s44...

1/8: How do human neurons encode meaning?

In this work, led by Katharina Karkowski, we recorded hundreds of human MTL neurons to study semantic coding in the human brain:

doi.org/10.1101/2025...

1/8: How do human neurons encode meaning?

In this work, led by Katharina Karkowski, we recorded hundreds of human MTL neurons to study semantic coding in the human brain:

doi.org/10.1101/2025...

Paper: arxiv.org/abs/2510.03684

🧵

Paper: arxiv.org/abs/2510.03684

🧵

@tlmnhut.bsky.social show: supervised pruning of a DNN’s feature space better aligns with human category representations, selects distinct subspaces for different categories, and more accurately predicts people’s preferences for GenAI images.

doi.org/10.1145/3768...

@tlmnhut.bsky.social show: supervised pruning of a DNN’s feature space better aligns with human category representations, selects distinct subspaces for different categories, and more accurately predicts people’s preferences for GenAI images.

doi.org/10.1145/3768...

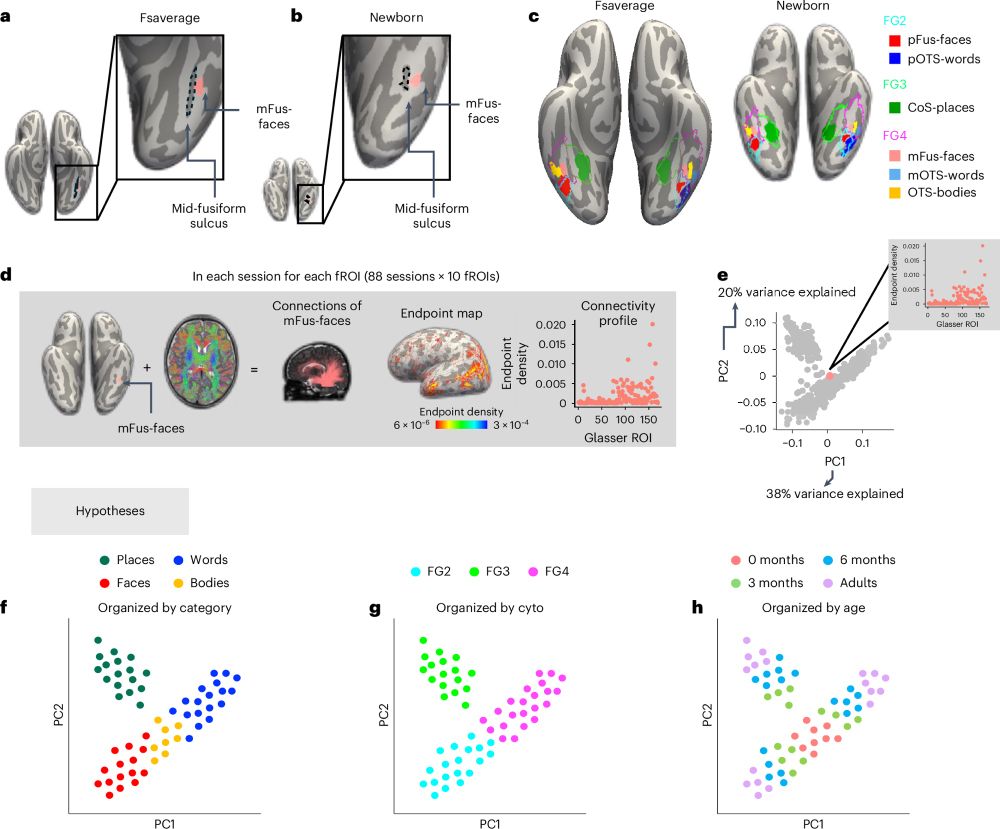

Led by: @dongwonoh.bsky.social

Paper: www.nature.com/articles/s41...

(1/6)

Led by: @dongwonoh.bsky.social

Paper: www.nature.com/articles/s41...

(1/6)

We find a striking dissociation: it’s not shared object recognition. Alignment is driven by sensitivity to texture-like local statistics.

📊 Study: n=57, 624k trials, 5 models doi.org/10.1101/2025...

We find a striking dissociation: it’s not shared object recognition. Alignment is driven by sensitivity to texture-like local statistics.

📊 Study: n=57, 624k trials, 5 models doi.org/10.1101/2025...

We asked: How does the motor cortex account for arm posture when generating movement?

Paper 👉 www.biorxiv.org/content/10.1...

1/10

We asked: How does the motor cortex account for arm posture when generating movement?

Paper 👉 www.biorxiv.org/content/10.1...

1/10

Our latest study, led by Josephine Raugel (FAIR, ENS), is now out:

📄 arxiv.org/pdf/2508.18226

🧵 thread below

Our latest study, led by Josephine Raugel (FAIR, ENS), is now out:

📄 arxiv.org/pdf/2508.18226

🧵 thread below

What happens to the brain’s body map when a body-part is removed?

Scanning patients before and up to 5 yrs after arm amputation, we discovered the brain’s body map is strikingly preserved despite amputation

www.nature.com/articles/s41593-025-02037-7

🧵1/18

What happens to the brain’s body map when a body-part is removed?

Scanning patients before and up to 5 yrs after arm amputation, we discovered the brain’s body map is strikingly preserved despite amputation

www.nature.com/articles/s41593-025-02037-7

🧵1/18

www.jneurosci.org/content/earl...

www.jneurosci.org/content/earl...

In @currentbiology.bsky.social, @chazfirestone.bsky.social & I show how these images—known as “visual anagrams”—can help solve a longstanding problem in cognitive science. bit.ly/45BVnCZ

In @currentbiology.bsky.social, @chazfirestone.bsky.social & I show how these images—known as “visual anagrams”—can help solve a longstanding problem in cognitive science. bit.ly/45BVnCZ

#VisionScience

#ObjectPerception

#VisionScience

#ObjectPerception

#VisionNeuroscience #CognitiveNeuroscience #VisionScience

#VisionNeuroscience #CognitiveNeuroscience #VisionScience

See you there!

See you there!

arxiv.org/abs/2508.00043

arxiv.org/abs/2508.00043