I’m relying on these tools for STEM-related tasks, analysis, etc, but becoming very cynical about its use in (non-technical) writing.

I’m relying on these tools for STEM-related tasks, analysis, etc, but becoming very cynical about its use in (non-technical) writing.

www.linkedin.com/pulse/how-co...

www.linkedin.com/pulse/how-co...

Prompt engineering with o1:

Interesting to compare this o1 strategy with the CLEAR or RFTC framework (role format task constraints)

I currently find myself relying less on “role” and more on “context dump”

Prompt engineering with o1:

Interesting to compare this o1 strategy with the CLEAR or RFTC framework (role format task constraints)

I currently find myself relying less on “role” and more on “context dump”

(from this post explaining the new o3 model and the ARC benchmark: arcprize.org/blog/oai-o3-...)

(from this post explaining the new o3 model and the ARC benchmark: arcprize.org/blog/oai-o3-...)

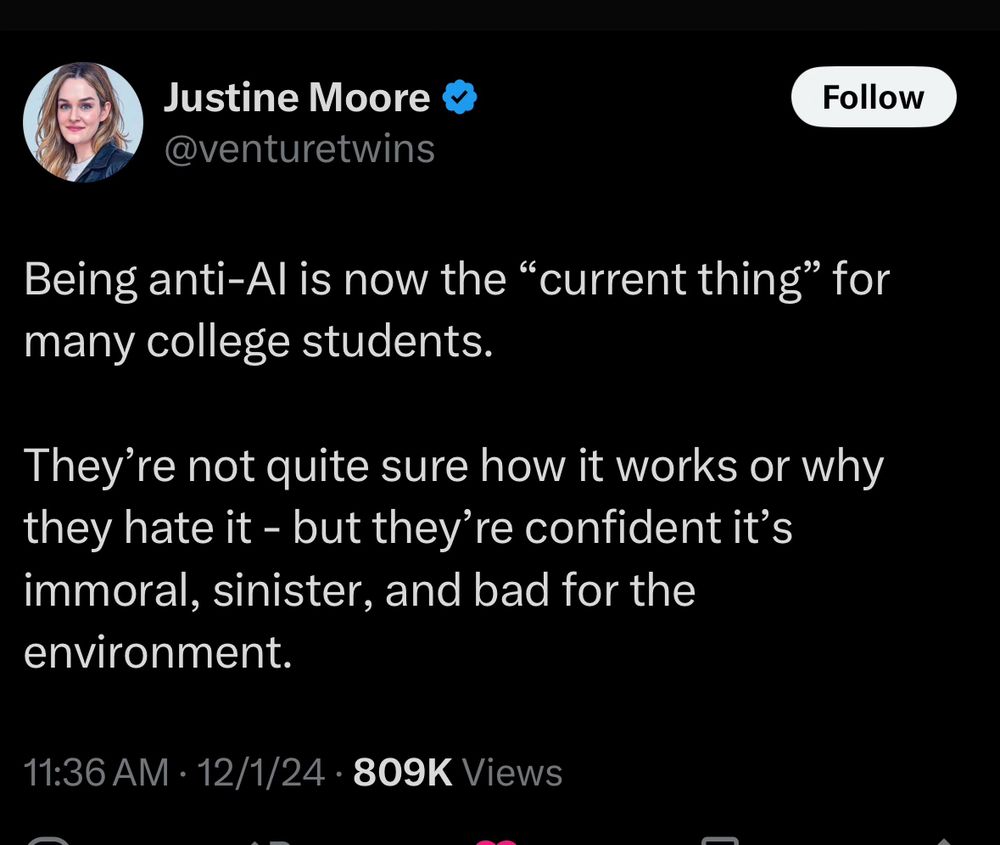

Most students, OTOH, say they’re uncomfortable with faculty using AI to evaluate their work, but they’re comfortable with AI in ed otherwise.

Most students, OTOH, say they’re uncomfortable with faculty using AI to evaluate their work, but they’re comfortable with AI in ed otherwise.

It won't bear much weight as a literal claim about historical causality.

www.ft.com/content/37bb...

It won't bear much weight as a literal claim about historical causality.

these models internalize not just the style but the whole vibe - the writer's voice, epistemic stance, worldview, etc. "write like x" tightens everything up so nicely

these models internalize not just the style but the whole vibe - the writer's voice, epistemic stance, worldview, etc. "write like x" tightens everything up so nicely

It's odd that "preparing for the workplace" now means both NOT using GenAI for some things but being really savvy at other tasks.

Anyone publishing on this?

It's odd that "preparing for the workplace" now means both NOT using GenAI for some things but being really savvy at other tasks.

Anyone publishing on this?

article here: maxread.substack.com/p/hawk-tuah-...

perplexity's summary here: www.perplexity.ai/search/zynte...

article here: maxread.substack.com/p/hawk-tuah-...

perplexity's summary here: www.perplexity.ai/search/zynte...

There are now multiple controlled experiments showing that students who use AI to get answers to problems hurts learning (even though they think they are learning), but that students who use well-promoted LLMs as a tutor perform better on tests.

You may discover that the real finding here is the huge gulf between your own taste and that of non-expert readers ... a gulf that has likely existed at least since, oh, IA Richards?

You may discover that the real finding here is the huge gulf between your own taste and that of non-expert readers ... a gulf that has likely existed at least since, oh, IA Richards?

Nice to see you.

Nice to see you.