With EVALUESTEER, we find even the best RMs we tested exhibit their own value/style biases, and are unable to align with a user >25% of the time. 🧵

With EVALUESTEER, we find even the best RMs we tested exhibit their own value/style biases, and are unable to align with a user >25% of the time. 🧵

@kghate.bsky.social, @monadiab77.bsky.social, @daniel-fried.bsky.social, @atoosakz.bsky.social

Preprint: www.arxiv.org/abs/2509.25369

@kghate.bsky.social, @monadiab77.bsky.social, @daniel-fried.bsky.social, @atoosakz.bsky.social

Preprint: www.arxiv.org/abs/2509.25369

(📷 xkcd)

(📷 xkcd)

"It was hard to seriously entertain both [doomer and AI-as-normal tech] views at the same time."

www.vox.com/future-perfe...

"It was hard to seriously entertain both [doomer and AI-as-normal tech] views at the same time."

www.vox.com/future-perfe...

I agree with the basic idea that the current speed & trajectory of AI progress is incredibly dangerous!

But I don't buy his general worldview. Here's why:

www.vox.com/future-perfe...

I agree with the basic idea that the current speed & trajectory of AI progress is incredibly dangerous!

But I don't buy his general worldview. Here's why:

www.vox.com/future-perfe...

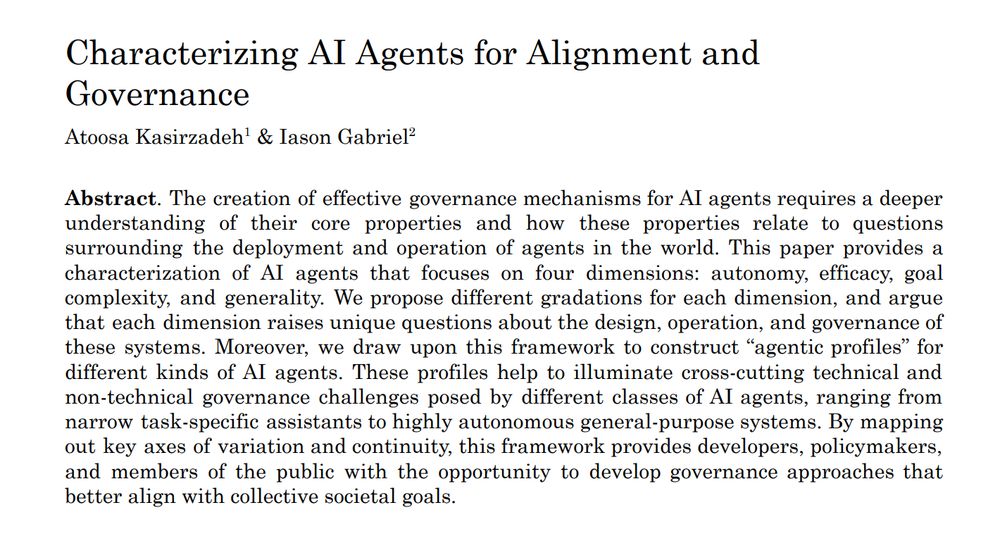

Paper at https://arxiv.org/pdf/2509.17878

Paper at https://arxiv.org/pdf/2509.17878

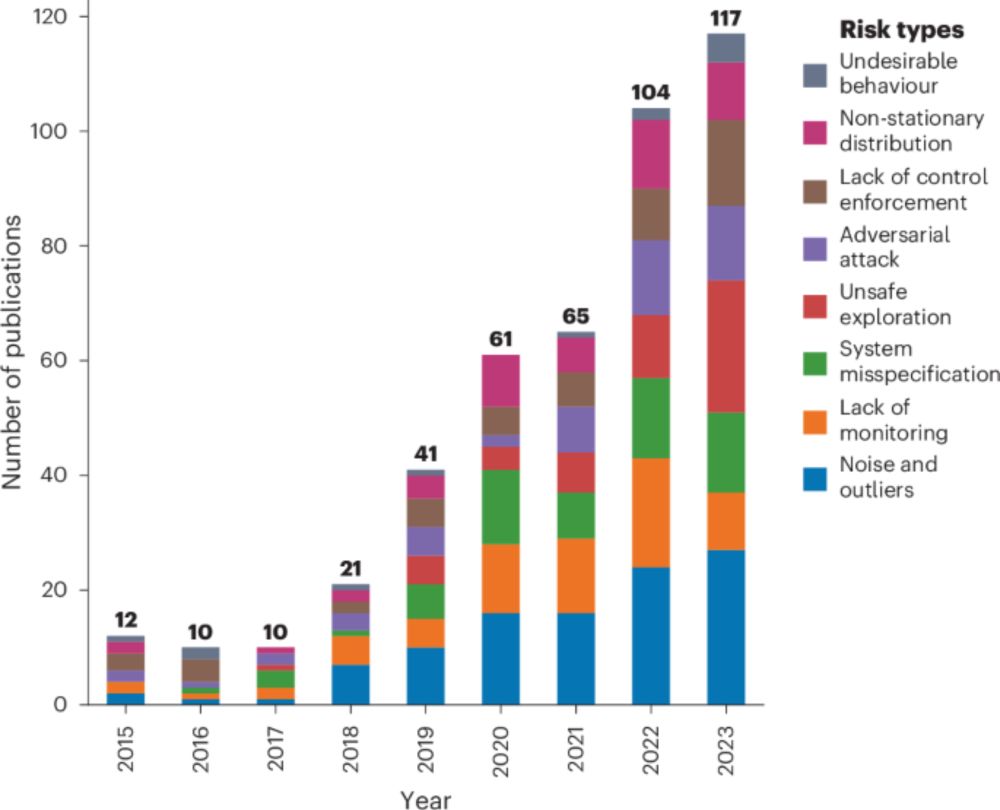

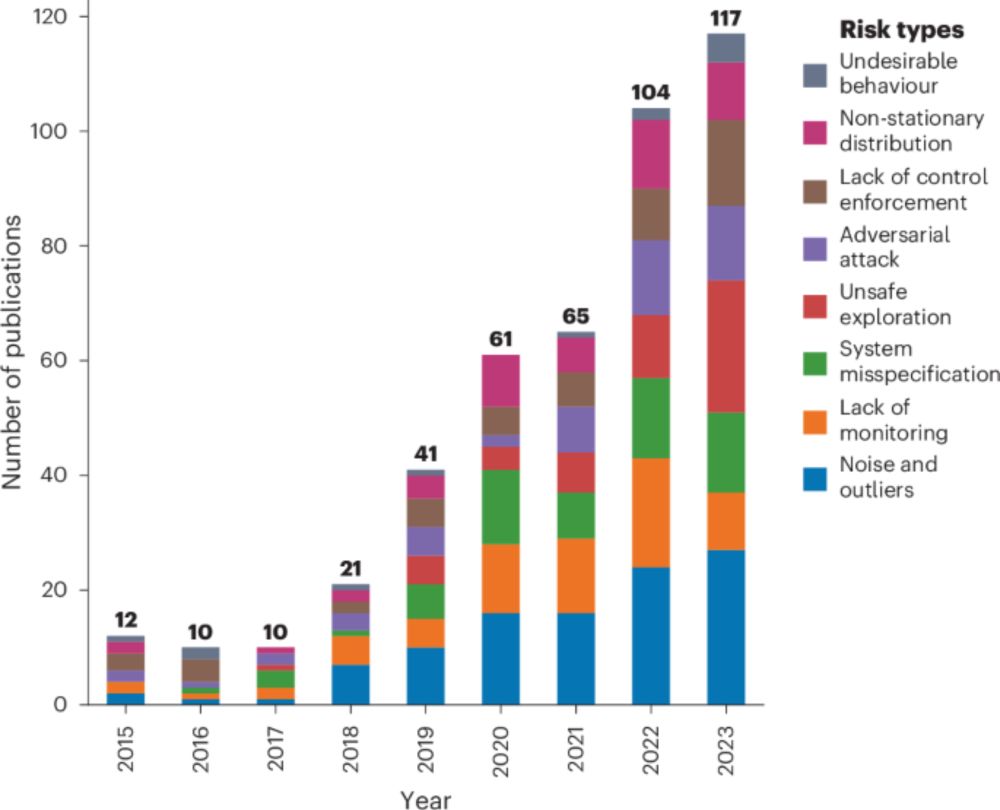

A warning that suggests AI safetyists should take AI ethics & sociology much more seriously! arxiv.org/pdf/2401.07836

A warning that suggests AI safetyists should take AI ethics & sociology much more seriously! arxiv.org/pdf/2401.07836

We look at 4 ways AI scientists can go wrong; design experiments to show the manifestation of these failures in 2 open source AI scientists; and recommend detection strategies.

We look at 4 ways AI scientists can go wrong; design experiments to show the manifestation of these failures in 2 open source AI scientists; and recommend detection strategies.

A research program dedicated to co-aligning AI systems *and* institutions with what people value.

It's the most ambitious project I've ever undertaken.

Here's what we're doing: 🧵

A research program dedicated to co-aligning AI systems *and* institutions with what people value.

It's the most ambitious project I've ever undertaken.

Here's what we're doing: 🧵

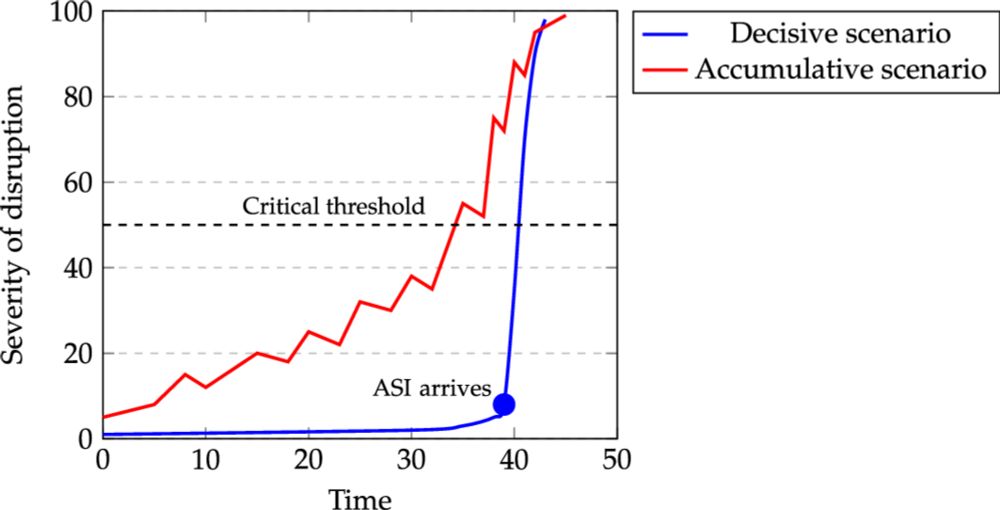

Great paper helping us understand the different possibilities

We challenge the narrative that AI safety is primarily about minimizing existential risks from AI. Why does this matter?

We challenge the narrative that AI safety is primarily about minimizing existential risks from AI. Why does this matter?

We challenge the narrative that AI safety is primarily about minimizing existential risks from AI. Why does this matter?

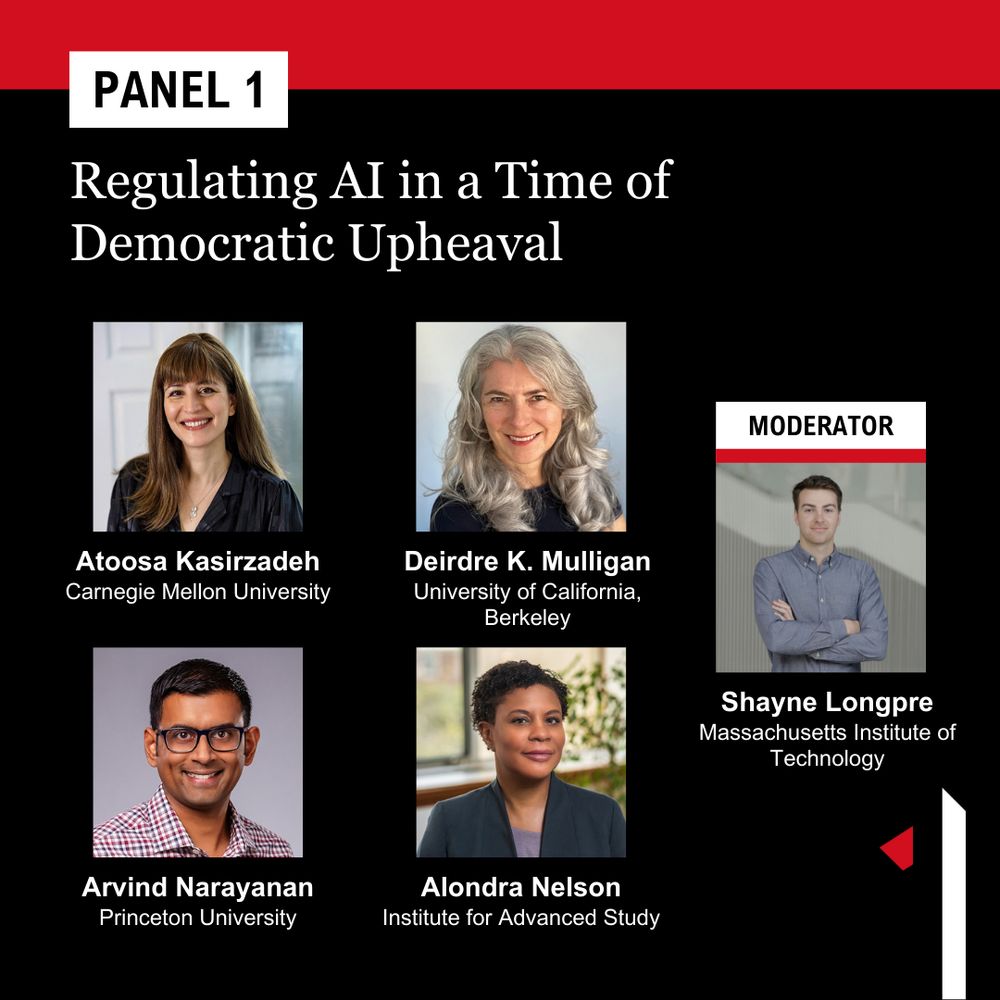

Panelists: @atoosakz.bsky.social, @randomwalker.bsky.social, @alondra.bsky.social, and Deirdre K. Mulligan.

Moderator: @shaynelongpre.bsky.social.

#AIDemocraticFreedoms

Panelists: @atoosakz.bsky.social, @randomwalker.bsky.social, @alondra.bsky.social, and Deirdre K. Mulligan.

Moderator: @shaynelongpre.bsky.social.

#AIDemocraticFreedoms

@shaynelongpre.bsky.social to kick it off. RSVP: www.eventbrite.com/e/artificial...

@shaynelongpre.bsky.social to kick it off. RSVP: www.eventbrite.com/e/artificial...

Most AI x-risk discussions focus on a cataclysmic moment—a decisive superintelligent takeover. But what if existential risk doesn’t arrive like a bomb, but seeps in like a leak?

Most AI x-risk discussions focus on a cataclysmic moment—a decisive superintelligent takeover. But what if existential risk doesn’t arrive like a bomb, but seeps in like a leak?